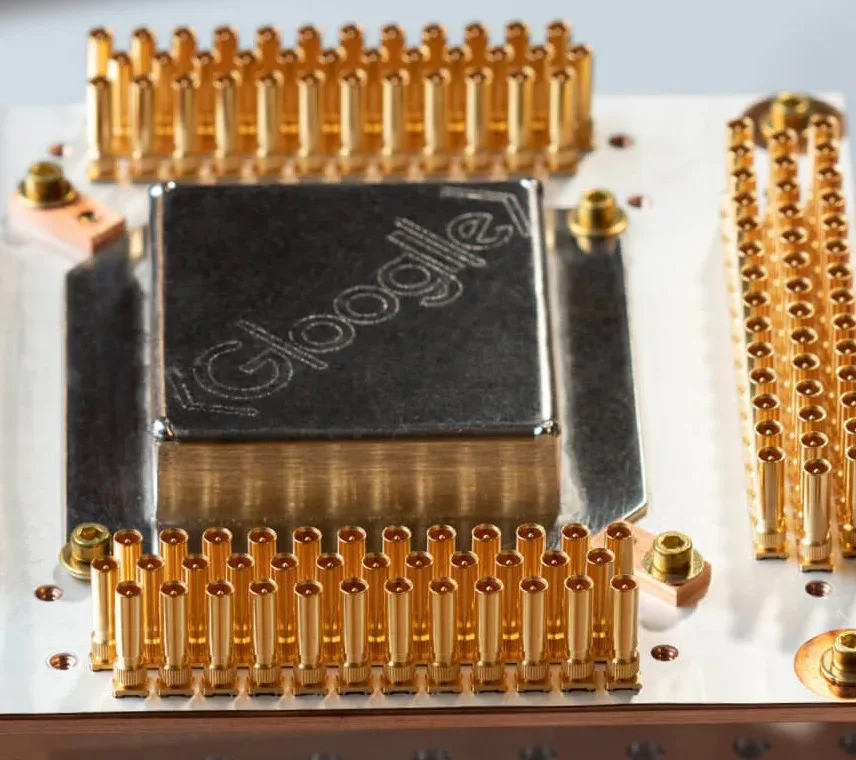

The recent advancements that Google made showed that its Sycamore quantum computer can detect and fix computational errors. This development is important in order to bring large-scale quantum computing onto the market. Because the current systems are generating more errors than they actually solve. Google’s new advancements will show new error solving techniques that will vastly improve the computational power of quantum computers.

What is error correction?

Error correction is a standard feature, no matter if we are talking about quantum computers or classical computers. In classical computing, data is stored in ones and zeroes and these are the two possible states. So, transmitting data with extra parity bits that can warn if a 0 has flipped to 1 or the opposite, means that these errors can be found and fixed accordingly.

In quantum computing, the quantum bit has a more complex state. The qubit can exist both as 1 and 0 and if you try to measure these states directly, you will destroy the data. The proposed solution for this problem was to make a cluster of many qubits into one “logical qubit”.

These logical qubits have been created in the past, but they have not been used for error correction up until now.

How does Google AI Quantum solved the error correction problem?

Google AI Quantum team headed by Julian Kelly had demonstrated this error correction concept on Google’s Sycamore quantum computer. They have created logical qubits that included 21 physical qubits on each of these logical qubits. They have come to the discovery that the addition of one more physical qubit into the logical qubit caused a significant exponential drop into the logical qubit error rates.

The team has stated that they have been able to make measurements of the extra qubits that didn’t collapse their state. And these extra qubits gave enough info for the scientists to measure if errors have actually occurred.

“This is basically our first half step along the path to demonstrate that,” he says. “A viable way of getting to really large-scale, error-tolerant computers. It’s sort of a look ahead for the devices that we want to make in the future.”

Julian Kelly, researcher at Google AI Quantum

What are the future challenges?

Although the team has demonstrated this solution in concept. Still, a big engineering challenge remains. Because adding more qubits to each logical qubits has its own downsides as each of these physical qubits is prone to errors. So, the chance for the logical qubit to develop errors rises exponentially as the number of qubits rises inside the logical bit.

In this process, there is a breakeven point called a threshold. In this threshold point where the error correction ability catches more problems compared to that an increase in qubit can bring. Google’s error correction technique unfortunately doesn’t meet the threshold. If they want to meet the threshold, they will need to create less noisy physical qubits that will encounter fewer errors, and a larger proportion of them will be spread to each logical qubit. Google’s team believes that they will need to have around 1000 physical qubits to make a single logical qubit. Their Sycamore computer at the moment only brings 54 physical qubits.

“If we couldn’t do this we’re not going to have a large scale machine,” he says. “I applaud the fact they’ve done it, simply because without this, without this advance, you will still have uncertainty about whether the roadmap towards fault tolerance was feasible. They removed those doubts.”

Peter Knight at Imperial College London