Researchers Jun-Jie Zhang and Deyu Meng have discovered a vulnerability in neural networks, which are susceptible to small, non-random perturbations known as adversarial attacks. These attacks can cause the networks to produce high-confidence yet erroneous predictions. The researchers found a mathematical similarity between this vulnerability and the uncertainty principle in quantum physics. This suggests that there is an inherent trade-off between accuracy and robustness in neural networks, and that there may be an intrinsic limitation in precisely measuring both features and attacks simultaneously. This discovery could have implications for the reliability of technologies that rely on deep learning.

The Vulnerability of Neural Networks: A Quantum Perspective

Neural networks, despite their widespread success in various domains, have been found to be inherently vulnerable to small, non-random perturbations, often referred to as adversarial attacks. These attacks, which are derived from the gradient of the loss function relative to the input, expose a systemic fragility within the network structure. This article explores the intriguing mathematical congruence between this vulnerability and the quantum physics’ uncertainty principle, offering a fresh interdisciplinary perspective on the limitations of neural networks.

The Inherent Trade-off Between Accuracy and Robustness

Deep neural networks have been lauded for their ability to approximate a continuous function to any desired level of accuracy. However, recent empirical and theoretical evidence suggests that minor, non-random perturbations can easily disrupt these networks, leading to high-confidence yet erroneous predictions. This raises significant concerns about the reliability of technologies that rely on state-of-the-art deep learning. The question then arises: is the observed trade-off between accuracy and robustness an intrinsic and universal property of these networks? If so, this would warrant a comprehensive exploration into the foundations of deep learning.

The Uncertainty Principle in Neural Networks

The study uncovers an intrinsic characteristic of neural networks: their vulnerability shares a mathematical equivalence with the uncertainty principle in quantum physics. This is observed when gradient-based attacks on the inputs are identified as conjugate variables, in relation to these inputs. The network cannot achieve arbitrary levels of measuring certainties on two factors simultaneously: the conjugate variable and the input, leading to the observed accuracy-robustness trade-off. This phenomenon, similar to the quantum physics’ uncertainty principle, offers a nuanced understanding of the limitations inherent in neural networks.

Conjugate Variables as Attacks

In quantum mechanics, the concept of conjugate variables plays a critical role in understanding the fundamentals of particle behavior. Drawing an analogy from quantum mechanics, the features of the input data provided to a neural network can be conceptualized as feature operators, while the gradients of the loss function with respect to these inputs can be viewed as attack operators. This analogy leads to an inherent uncertainty relation for neural networks, mirroring the Heisenberg’s uncertainty principle in quantum mechanics. This relation suggests that there exists an intrinsic limitation in precisely measuring both features and attacks simultaneously, revealing an inherent vulnerability of neural networks.

The Manifestation of the Uncertainty Principle in Neural Networks

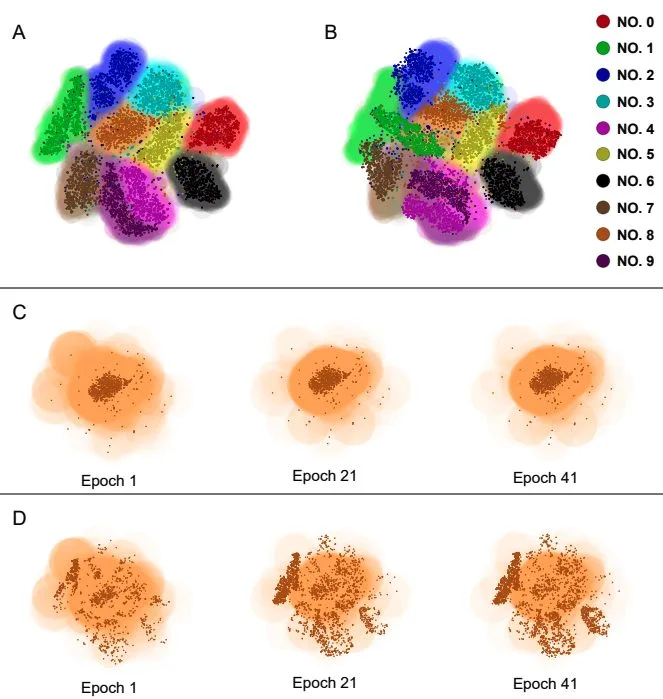

The study provides a visualization of the manifestation of the uncertainty principle within neural networks using the MNIST dataset as a representative example. The visualization reveals an inherent trade-off: a reduction in the effective radius of the trained class corresponds to an increase in the effective radius of the attacked points. These two radii can be conceptualized as the visual representations of the uncertainties, highlighting the delicate balance of precision and vulnerability in neural networks. This inherent uncertainty relation drives the accuracy-robustness trade-off, underscoring the inherent limitations of neural networks.

External Link: Click Here For More