Researchers from the University of Technology Sydney and the Sydney Quantum Academy are exploring the intersection of Large Language Models (LLMs) and Quantum Machine Learning (QML). The study focuses on implementing the foundational Transformer architecture, integral to ChatGPT, within a quantum computing paradigm. The team has designed quantum circuits to implement adapted versions of the transformers’ core components and the generative pre-training phase. The research aims to bridge the gap between QML advancements and state-of-the-art language models, potentially leading to significant advancements in AI and quantum computing.

What is the Intersection of Large Language Models and Quantum Machine Learning?

The intersection of Large Language Models (LLMs) and Quantum Machine Learning (QML) is a relatively new field of study. LLMs, such as ChatGPT, have revolutionized how we interact with and understand the capabilities of Artificial Intelligence (AI). However, the integration of these models with the emerging field of QML is still in its early stages. This paper, authored by Yidong Liao and Chris Ferrie from the Centre for Quantum Software and Information at the University of Technology Sydney and the Sydney Quantum Academy, explores this niche by detailing a comprehensive framework for implementing the foundational Transformer architecture, integral to ChatGPT, within a quantum computing paradigm.

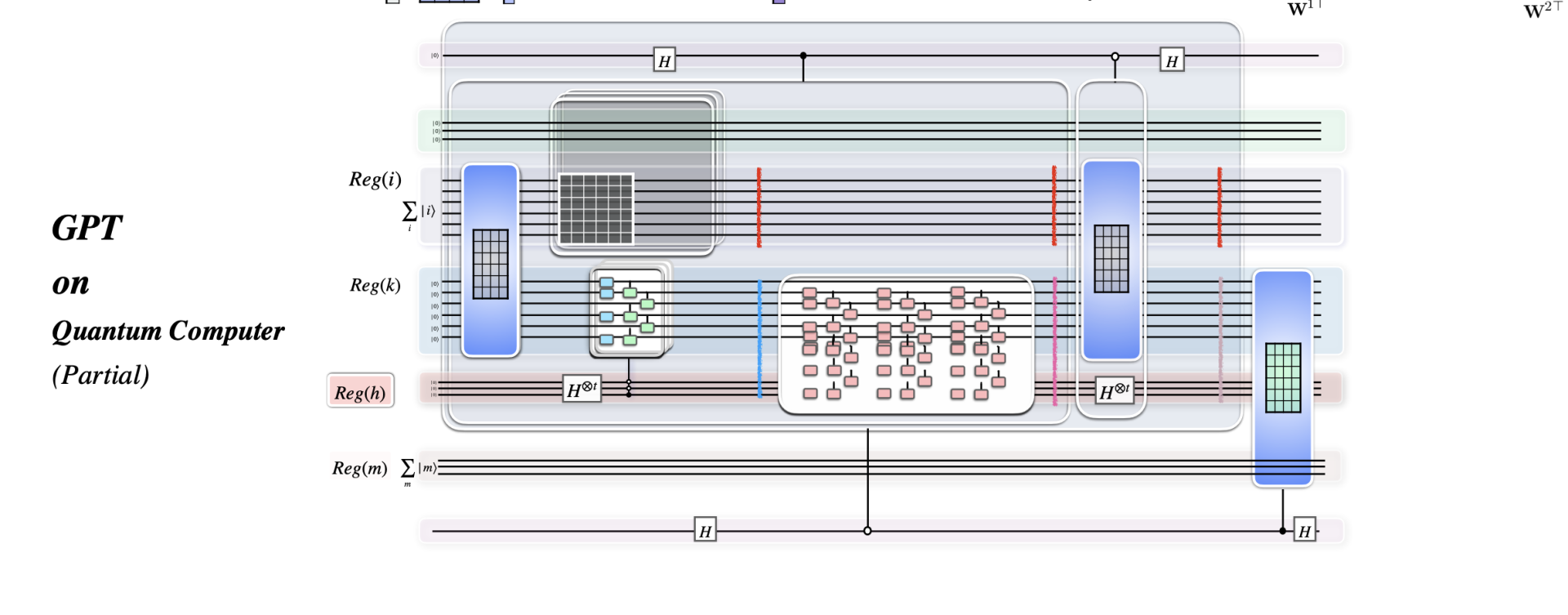

The researchers meticulously design quantum circuits that implement adapted versions of the transformers’ core components and the generative pre-training phase. The goal of this research is to open new avenues for research in QML and contribute to the ongoing evolution of AI technologies. Despite the rapid evolution and notable progress in the field of QML, there is a discernible lack of research connecting QML advancements to these state-of-the-art language models. This paper aims to bridge that gap by exploring the quantum implementation of GPT, the original version of ChatGPT.

How is the Transformer Architecture Implemented on a Quantum Computer?

The Transformer architecture is a critical component of ChatGPT. In this research, the authors detail the quantum circuit design for the Transformer architecture and its Generative Pretraining. The Transformer architecture is implemented on a quantum computer through a series of meticulously designed quantum circuits. These circuits implement adapted versions of the transformers’ core components and the generative pre-training phase.

The FeedForward Network (FFN) is a fully connected feedforward module that operates separately and identically on each input. The FFN is applied after the multihead attention module and Residual connection. The FFN is implemented on a quantum computer using a series of controlled parameterised quantum circuits. The implementation of the FFN on a quantum computer is evaluated using the Parallel Swap test.

What is the Role of Quantum Circuits in this Implementation?

Quantum circuits play a crucial role in the implementation of the Transformer architecture on a quantum computer. The authors create a trainable unitary, a series of controlled parameterised quantum circuits, for implementing the FFN on a quantum computer. Each of these circuits acts as a parameterised quantum circuit. The input state to the circuit is evaluated using the Parallel Swap test for each input and parameter.

The implementation of the FFN on a quantum computer involves applying a Hadamard gate on the bottom ancillary qubit and controlled unitary, which results in a specific output state. The controlled rotation and uncomputation of the FFN are then applied, resulting in a final state where a trainable unitary, a parameterised quantum circuit implementing the second weight matrix, is applied.

How Does this Research Contribute to the Field of Quantum Machine Learning?

This research contributes significantly to the field of Quantum Machine Learning by exploring the quantum implementation of GPT, the original version of ChatGPT. The authors aim to bridge the gap between the advancements in QML and state-of-the-art language models. By integrating quantum computing with LLMs, the authors aspire to open new avenues for research in QML and contribute to the ongoing evolution of AI technologies.

The research provides a comprehensive framework for implementing the foundational Transformer architecture, integral to ChatGPT, within a quantum computing paradigm. The meticulous design of quantum circuits that implement adapted versions of the transformers’ core components and the generative pre-training phase is a significant contribution to the field.

What are the Future Implications of this Research?

The future implications of this research are vast. The integration of quantum computing with LLMs could open new avenues for research in QML and contribute to the ongoing evolution of AI technologies. The quantum implementation of GPT, the original version of ChatGPT, could lead to significant advancements in the field of AI and quantum computing.

The research could also pave the way for the development of more advanced quantum circuits and the implementation of more complex architectures on a quantum computer. The methodologies and techniques used in this research could be applied to other areas of quantum computing and AI, leading to more innovative and efficient solutions.

Publication details: “GPT on a Quantum Computer”

Publication Date: 2024-03-14

Authors: Yidong Liao and Christopher Ferrie

Source: arXiv (Cornell University)

DOI: https://doi.org/10.48550/arxiv.2403.09418