Apple researchers have developed a new method for building performant multimodal large language models (MLLMs). Their model, Apple MM1, uses a mix of image caption, interleaved image-text, and text-only data for pre-training, which they found crucial for achieving state-of-the-art results. They also found that the image encoder and image resolution had a significant impact, while the design of the vision-language connector was less critical. The Apple MM1 model, which can scale up to 30 billion parameters, demonstrated enhanced in-context learning and multi-image reasoning capabilities.

Table of Contents

Building Multimodal Large Language Models: The Apple MM1

What is an LLM?

Language models, particularly Large Language Models (LLMs), have caused excitement the world over with the release of ChatGPT from Open AI, which has popularised the Large Language Model, which are advanced artificial intelligence systems designed to understand, generate, and interact with human language. LLMs are trained on vast datasets comprising text from the internet, books, articles, and other sources. This extensive training enables them to grasp the nuances of language, including grammar, context, and even cultural references.

What is an MM LLM?

MMLLMs, on the other hand, represent an advancement in the field by being capable of processing and integrating information from multiple data types, including but not limited to text, images, audio, and video. This multimodal approach allows MMLLMs to understand complex datasets where different types of information complement or supplement each other. For instance, MMLLMs can analyze a document that contains both text and images, understanding the relationship between the visual and textual information to generate more accurate summaries or responses.

The development of Multimodal Large Language Models (MLLMs) has been a significant advancement in the field of artificial intelligence. These models, which can process image and text data, have shown impressive results across various benchmarks. However, the process of building these models and the factors contributing to their performance are poorly understood. This article presents a comprehensive study on the construction of MLLMs, focusing on the Apple MM1 model developed by a team of researchers at Apple.

The construction of MLLMs involves several key components, including the image encoder, the vision-language connector, and the pre-training data. The image encoder converts visual data into a format the model can process. The vision-language connector is the component that integrates the processed visual data with the text data. The pre-training data is the information the model is trained on before fine-tuning for specific tasks.

The study found that the image encoder and the pre-training data are crucial for the performance of MLLMs. The image encoder’s resolution and the number of image tokens significantly impact the model’s performance. On the other hand, the design of the vision-language connector was found to be of comparatively negligible importance.

The type of pre-training data used also plays a significant role in the model’s performance. The study found that a mix of image-caption, interleaved image-text, and text-only data is crucial for achieving state-of-the-art results across multiple benchmarks.

The researchers also explored the impact of scaling up the model by using larger language models and mixture-of-experts (MoE) models. The results showed that the larger models outperformed most of the relevant works, demonstrating the potential of scaling up MLLMs.

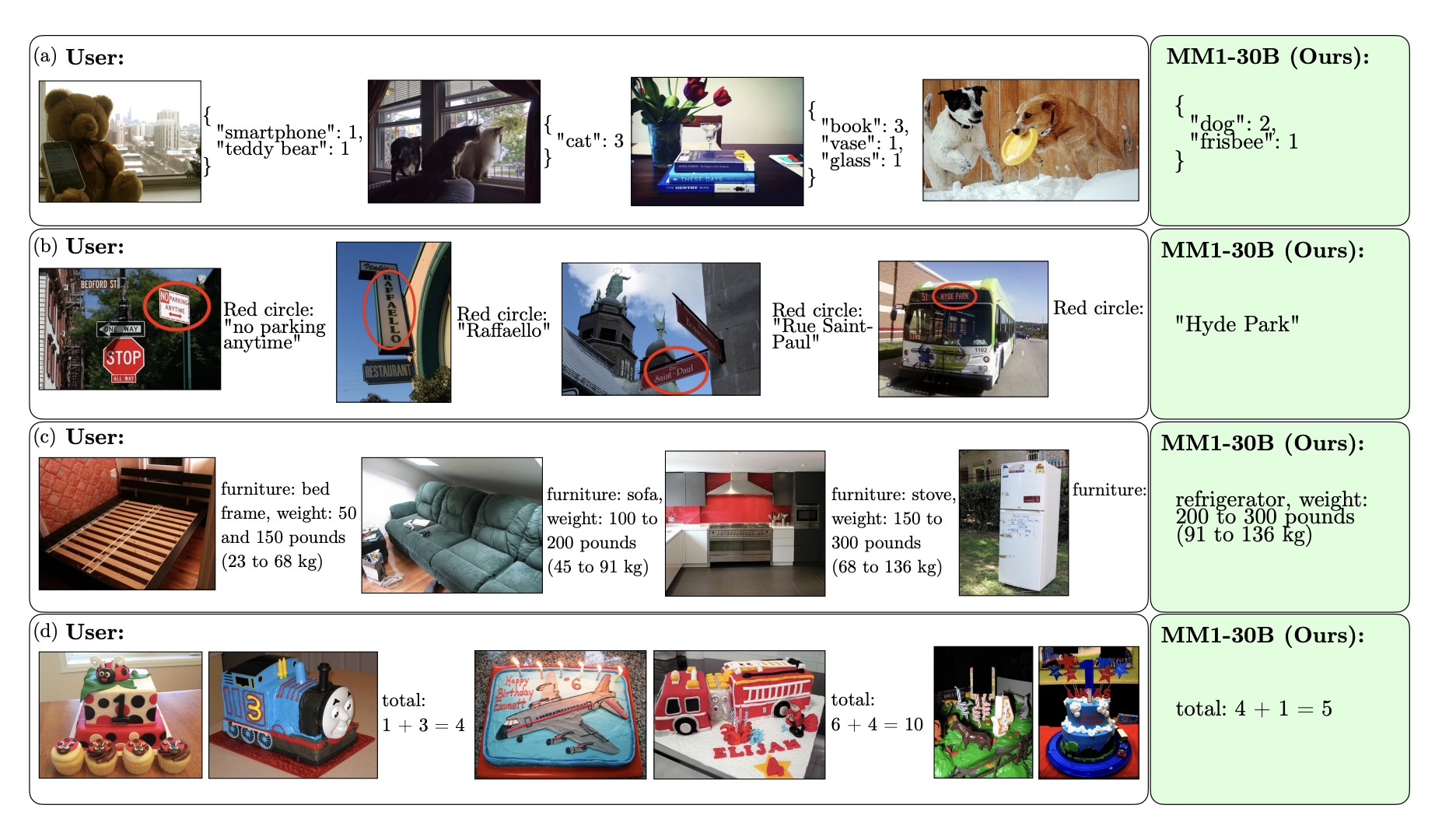

Thanks to large-scale multimodal pre-training, MLLMs exhibit enhanced capabilities such as in-context predictions, multi-image reasoning, and few-shot learning capability after instruction tuning. These capabilities make MLLMs a powerful tool for various applications in artificial intelligence.

The Apple MM1 study provides valuable insights into the construction of MLLMs and the factors that contribute to their performance. The findings suggest that careful consideration of the image encoder and the pre-training data is crucial for building performant MLLMs. The study also demonstrates the potential of scaling up these models to achieve better performance. These insights could guide future research in the field and contribute to developing more advanced MLLMs.

Apple MM1 Comparisons with Existing Models

External Link: Click Here For More