Some of the worlds leading chip makers and designers such as ARM, AMD and NVIDIA are not actively building quantum chips. Intel has been working on its semiconducting chips for a while now. NVIDIA is known for producing graphics cards or GPU’s which are typically used in applications now beyond simply graphics and are used for speeding up computation workflows and accelerating certain applications. It should come as no surprise that NVIDIA see the future of Quantum and have now released a framework or SDK for simulating Quantum Circuits.

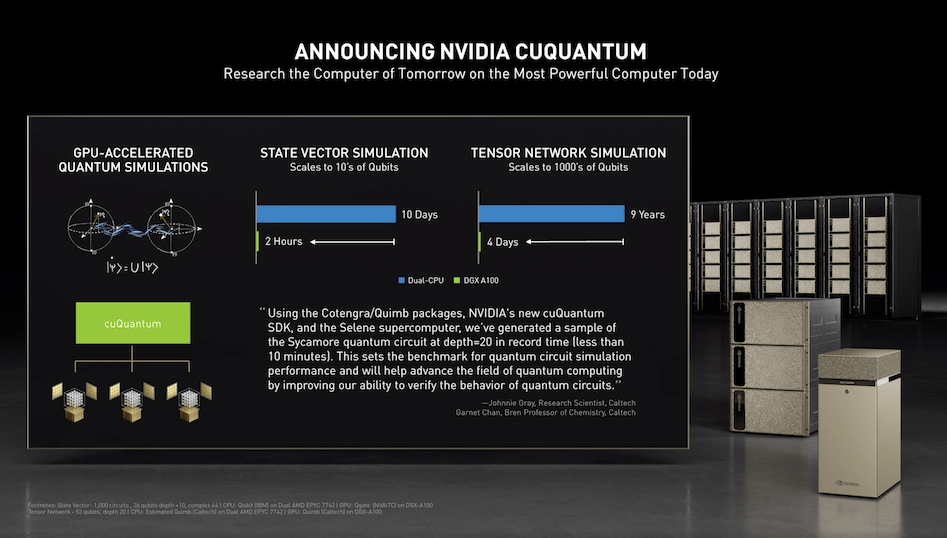

According to Nvidia, cuQuantum is an SDK of optimised libraries and tools for accelerating quantum computing workflows. The chip maker is not actually making any push towards a Quantum device (that we know), but developers can use Nvidia’s cu Quantum to speed up quantum circuit simulations based on state vector, density matrix, and tensor network methods by orders of magnitude. A great deal of quantum circuits never get run on actual quantum hardware and therefore must be simulated. So the news will be welcome for Quantum developers, that a major chip maker is supporting Quantum by allowing users to speed-up the process of developing Quantum algorithms. Of course the eventual aim is that Quantum computers will untimely be non-simulatable as they can offer a massive speed-up over classical algorithms.

Already there is a growing array of tools and technologies in the quantum ecosystem with languages, tools and technologies that allow developers to program in a variety of languages such as Python, C# using frameworks such as Qiskit, Cirq and many more.

“This sets the benchmark for quantum circuit simulation performance and will help advance the field of quantum computing by improving our ability to verify the behaviour of quantum circuits”

Garnet Chan, chemistry professor at Caltech on NVIDIA development

Using the NVIDIA Selene supercomputer, NVIDIA has used cuQuantum (the SDK) and A100 Tensor Core GPUs to generate a sample from a full-circuit simulation of the Google Sycamore processor in 10 minutes. In late 2019, this task was thought to take days on millions of CPU cores. Such a speed enhancement is likely to be of interest to the quantum researchers around the globe.

To give some idea of the simulation ability: NVIDIA DGX A100 with eight NVIDIA A100 80GB Tensor Core GPUs can simulate up to 36 qubits. delivering orders of magnitude speedup over a dual-socket CPU server on leading state vector simulations. Typical speed-ups are orders of magnitude faster than that done with existing CPU’s, which potentially hundreds of times faster. As you’d expect the performance is optimised for the Nvidia hardware and offers developers the ability to use a high level language such as Python and access the raw power of the chip’s capabilities.

The first production release of cuQuantum is expected to be available later in this year (2021), but in the meantime, more information can be found from the cuQuantum website and there is a version downloadable now that users can explore.