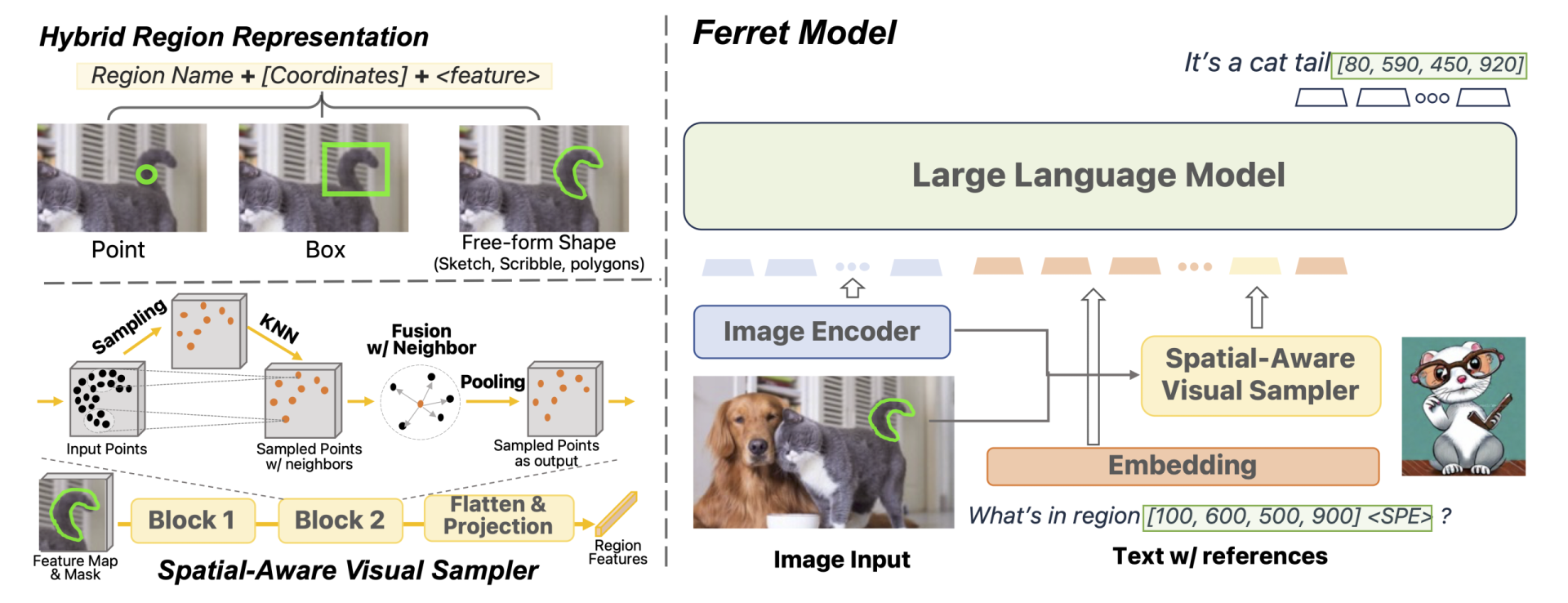

The Ferret Model, a new end-to-end Multimodal Language and Vision Model (MLLM), has been developed by Apple and researchers Haoxuan You, Haotian Zhang, Zhe Gan, Xianzhi Du, Bowen Zhang, Zirui Wang, Liangliang Cao, Shih-Fu Chang, and Yinfei Yang. The model uses a hybrid region representation and spatial-aware visual sampler for fine-grained and open-vocabulary referring and grounding. The team also created the GRIT Dataset, a large-scale, hierarchical, robust ground-and-refer instruction tuning dataset, and Ferret-Bench, a multimodal evaluation benchmark.

Introduction to Ferret: A Groundbreaking MLLM

Ferret is an end-to-end Multimodal Language and Vision Model (MLLM) that can refer to and ground anything, anywhere, and at any granularity. The team’s research paper provides an in-depth look at the model’s capabilities and its potential applications.

The Ferret model’s key contributions to the field of MLLM include a hybrid region representation and a spatial-aware visual sampler. These features enable fine-grained and open-vocabulary referring and grounding in MLLM. This means that the model can refer to and ground any object or concept, regardless of its complexity or specificity. This is a significant advancement in the field, as it allows for more precise and nuanced language and vision processing.

The GRIT Dataset

In addition to the Ferret model, the team also developed the GRIT dataset. This dataset, which contains approximately 1.1 million entries, is a large-scale, hierarchical, robust ground-and-refer instruction tuning dataset. The GRIT dataset is designed to work in conjunction with the Ferret model, providing a rich source of data for the model to refer to and ground.

Ferret-Bench: A Multimodal Evaluation Benchmark

The team also introduced Ferret-Bench, a multimodal evaluation benchmark. This benchmark is designed to jointly require referring/grounding, semantics, knowledge, and reasoning. This means that it tests a model’s ability to refer to and ground objects and concepts, understand and apply semantic knowledge, and reason logically. This comprehensive benchmark provides a robust measure of a model’s capabilities and performance.

Summary

The Ferret model, the GRIT dataset, and the Ferret-Bench benchmark represent significant advancements in the field of MLLM. These tools enable more precise and nuanced language and vision processing, which has the potential to greatly enhance the capabilities of AI systems. The team’s research paper provides a detailed overview of these tools and their potential applications, making it a valuable resource for anyone interested in the field of MLLM.

- The article discusses the Ferret Model, an end-to-end Multimodal Language and Vision Model (MLLM) developed by Haoxuan You, Haotian Zhang, Zhe Gan, Xianzhi Du, Bowen Zhang, Zhe Wang, Liangliang Cao, Shih-Fu Chang, and Yinfei Yang.

- The model uses a hybrid region representation and a spatial-aware visual sampler to enable fine-grained and open-vocabulary referring and grounding in MLLM.

- The team also created the GRIT Dataset, a large-scale, hierarchical, robust ground-and-refer instruction tuning dataset, containing approximately 1.1 million entries.

- Additionally, they developed Ferret-Bench, a multimodal evaluation benchmark that requires referring/grounding, semantics, knowledge, and reasoning.

- The Ferret Model aims to refer and ground anything anywhere at any granularity, accepting any form of referring and grounding anything in response.