Microsoft has developed a new class of small language models (SLMs) called the Phi-3 family, which are more capable and cost-effective than previous models. These models, including the Phi-3-mini, perform better than models twice their size and require less computing resources. They are designed for simpler tasks and can be fine-tuned to meet specific needs. The Phi-3 models will be available on Microsoft Azure AI Model Catalog, Hugging Face, and Ollama. Microsoft’s Ronen Eldan, Sebastien Bubeck, Sonali Yadav, and Luis Vargas were key individuals involved in this development.

The Emergence of Small Language Models

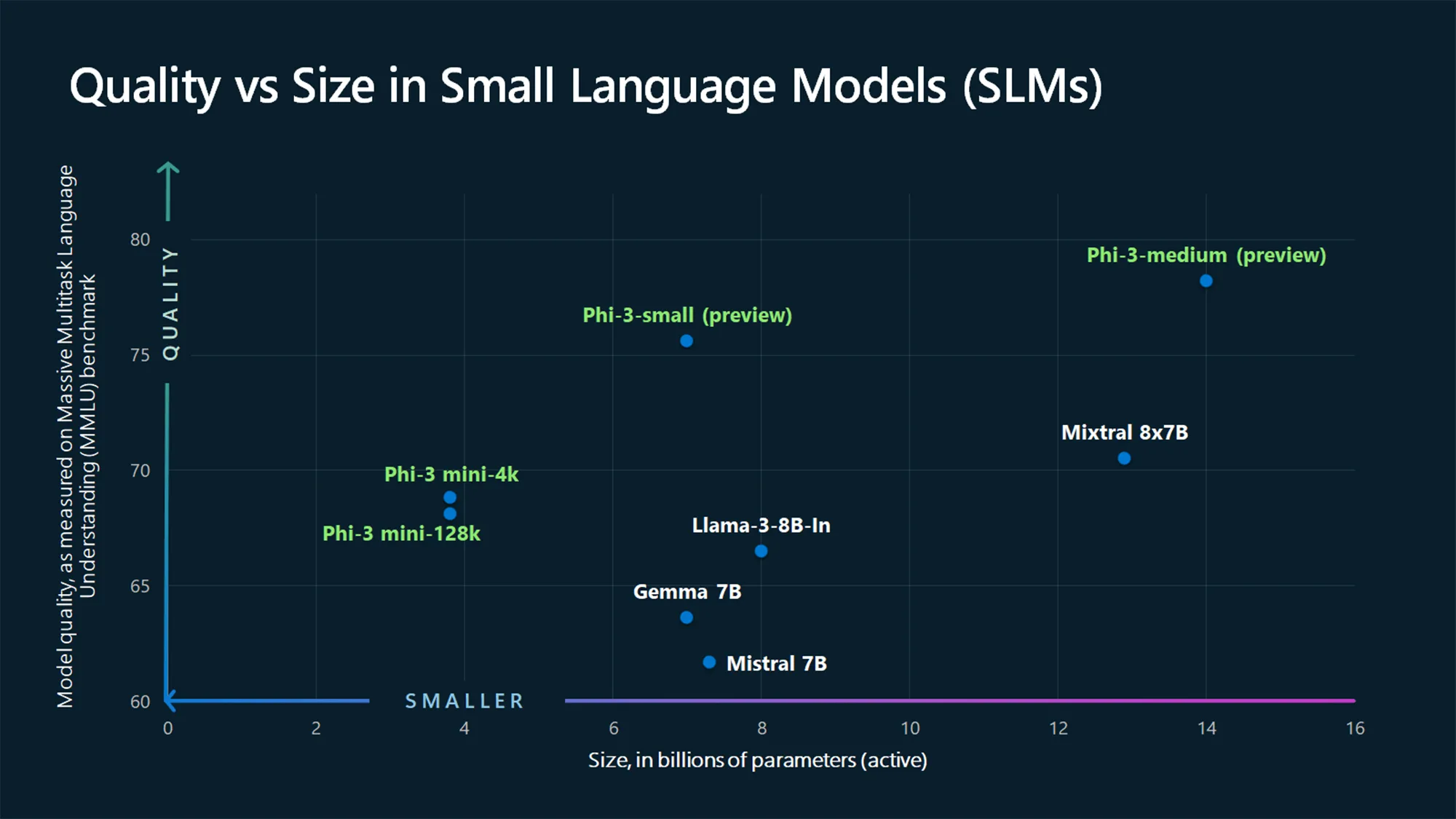

In the realm of artificial intelligence (AI), researchers are constantly seeking innovative solutions to complex problems. One such problem is the size of large language models (LLMs), which, while powerful, require significant computing resources to operate. This has led to the development of small language models (SLMs), which offer many of the same capabilities as LLMs but are smaller in size and trained on less data. Microsoft, for instance, has recently announced the Phi-3 family of open models, which are touted as the most capable and cost-effective SLMs available. These models outperform others of the same size and the next size up across various benchmarks, thanks to training innovations developed by Microsoft researchers.

The Potential of Small Language Models

SLMs are designed to perform well for simpler tasks and are more accessible and easier to use for organizations with limited resources. They can also be fine-tuned to meet specific needs. The choice between a large and small language model depends on an organization’s specific needs, the complexity of the task, and available resources. SLMs are well suited for organizations looking to build applications that can run locally on a device and where a task doesn’t require extensive reasoning or a quick response is needed. They also offer potential solutions for regulated industries and sectors that need high-quality results but want to keep data on their own premises.

The Role of High-Quality Data in Training Small Language Models

The development of SLMs that can deliver outsized results in a small package was enabled by a highly selective approach to training data. Instead of training on raw web data, Microsoft researchers decided to create a discrete dataset starting with 3,000 words. They asked a large language model to create a children’s story using one noun, one verb, and one adjective from the list, generating millions of tiny children’s stories. This dataset, dubbed “TinyStories,” was used to train very small language models of around 10 million parameters. The researchers further enhanced the dataset by approaching data selection like a teacher breaking down difficult concepts for a student, making the task of the language model to read and understand this material much easier.

Managing and Mitigating Risks in Developing Small Language Models

While starting with carefully selected data helps reduce the likelihood of models returning unwanted or inappropriate responses, it’s not sufficient to guard against all potential safety challenges. As with all generative AI model releases, Microsoft’s product and responsible AI teams used a multi-layered approach to manage and mitigate risks in developing Phi-3 models. This included providing additional examples and feedback on how the models should ideally respond, which builds in an additional safety layer and helps the model generate high-quality results. Each model also undergoes assessment, testing, and manual red-teaming, in which experts identify and address potential vulnerabilities.

Choosing the Right-Size Language Model for the Right Task

Even SLMs trained on high-quality data have limitations. They are not designed for in-depth knowledge retrieval, where LLMs excel due to their greater capacity and training using much larger data sets. LLMs are better than SLMs at complex reasoning over large amounts of information due to their size and processing power. However, based on ongoing conversations with customers, some companies may offload some tasks to small models if the task is not too complex. For instance, a business could use Phi-3 to summarize the main points of a long document or extract relevant insights and industry trends from market research reports. Another organization might use Phi-3 to generate copy, helping create content for marketing or sales teams such as product descriptions or social media posts.

External Link: Click Here For More