Researchers Yunzhe Zheng and Keita Kanno have proposed a method to minimize readout-induced noise in early fault-tolerant quantum computers. The noise, which arises during the process of reading the state of a quantum bit (qubit), contributes to the inaccuracy of the quantum system. The proposed method, called generalized syndrome measurement (GSM), requires only a single-shot measurement on a single ancilla, reducing readout overhead, idling time, and logical error rate. This method could potentially boost the applications of early fault-tolerant quantum computing, paving the way for more powerful and scalable quantum computers.

What is the Challenge with Early Fault-Tolerant Quantum Computers?

Quantum computers, with their potential to solve complex problems far beyond the reach of classical computers, have been the focus of intense research and development. However, early fault-tolerant quantum computers face a significant challenge: readout-induced noise. This noise, which arises during the process of reading the state of a quantum bit (qubit), is a major contributor to the inaccuracy of the logical state of the quantum system.

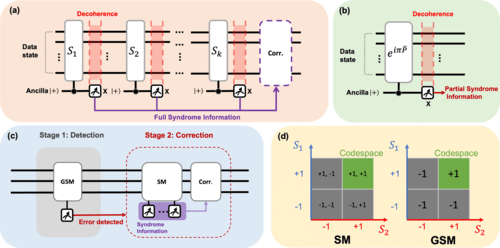

The process of reading out the state of a qubit is technically challenging and error-prone. The state-of-the-art readout duration ranges from hundreds of nanoseconds to several microseconds, while the coherence time for scalable near-term hardware is tens of microseconds. When the ancilla qubit (a helper qubit used in quantum error correction) is being measured, all data qubits are idling and therefore suffer from decoherence noise. Additionally, the state-of-the-art readout assignment error is on the scale of 0.01, which cannot be mitigated if no repeated readouts are performed.

How Does Quantum Error Correction Work?

Quantum error correction (QEC) codes provide a general framework to tackle noise by encoding a logical qubit into multiple physical qubits. This extra Hilbert space allows the detection and correction of quantum errors, providing the possibility for large-scale quantum computing. To diagnose physical errors in QEC codes, syndrome measurement (SM) is required to gain the necessary information for identifying both the location and type of errors.

However, as mid-circuit readouts have been extremely error-prone for near-term quantum hardware, readout-induced noise significantly affects the fidelity of the logical state after the syndrome measurement. For superconducting qubits, the state-of-the-art readout duration ranges from hundreds of nanoseconds to several microseconds, while the coherence time for scalable near-term hardware is tens of microseconds.

What is the Proposed Solution to Minimize Readout-Induced Noise?

Researchers Yunzhe Zheng and Keita Kanno propose a different method for syndrome extraction, namely generalized syndrome measurement (GSM), that requires only a single-shot measurement on a single ancilla. This method can detect the error in the logical state with minimized readout-induced noise. By adopting this method as a pre-check routine for quantum error correcting cycles, the readout overhead, the idling time, and the logical error rate during syndrome measurement can be significantly reduced.

The researchers numerically analyze the performance of their protocol using Iceberg code and Steane code under realistic noise parameters based on superconducting hardware and demonstrate the advantage of their protocol in the near-term scenario. As mid-circuit measurements are still error-prone for near-term quantum hardware, their method could boost the applications of early fault-tolerant quantum computing.

How Does Generalized Syndrome Measurement Work?

The GSM method involves a circuit scheme that only requires a single readout to extract partial information and detect the error, thereby minimizing the readout-induced idling error. The quantum error correction cycle with generalized syndrome measurement is divided into two stages: detection and correction.

First, the GSM method is used to check whether an error has occurred or not. If no error is detected, the correction stage is skipped while ensuring that the logical state is in the code space. If an error is detected, the GSM routine is followed by the canonical SM, extracting the full syndrome information and applying the appropriate correcting operations.

What are the Limitations of Existing Quantum Error Correction Protocols?

Existing quantum error correction protocols that do not require ancilla readout have been studied. However, these protocols require the ability to reset the qubits, which is generally as noisy as readout in the near-term quantum hardware. Therefore, it in principle just transfers the overhead from readout to resetting and only works for limited quantum hardware where resetting is much less error-prone than readout.

What is the Potential Impact of this Research?

The proposed method of generalized syndrome measurement could significantly reduce the readout-induced noise in early fault-tolerant quantum computers. This could potentially boost the applications of early fault-tolerant quantum computing, paving the way for the development of more powerful and scalable quantum computers. The research represents a significant step forward in overcoming the technical challenges associated with quantum computing, bringing us closer to realizing the full potential of this revolutionary technology.

Publication details: “Minimizing readout-induced noise for early fault-tolerant quantum computers”

Publication Date: 2024-05-06

Authors: Yunzhe Zheng and Keita Kanno

Source: Physical review research

DOI: https://doi.org/10.1103/physrevresearch.6.023129