The Quantum Approximate Optimization Algorithm (QAOA) is a method that can help solve combinatorial problems often found in finance and vehicle routing. It works by mapping the cost function to a spin-Hamiltonian and finding its ground state. Machine learning plays a crucial role in QAOA, particularly in mitigating hardware noise in quantum computations. However, quantum computers are still very noisy and error mitigation methods are hard to implement. Researchers Stefan H Sack and Daniel J Egger have shown a QAOA on nonplanar random regular graphs with up to 40 nodes, enabled by machine learning-based error mitigation, marking a significant step towards the practical application of quantum computing.

What is Quantum Approximate Optimization and How Does it Work?

Quantum Approximate Optimization Algorithm (QAOA) is a method that may assist in solving combinatorial problems, which are frequently encountered in practical settings such as finance and vehicle routing. The algorithm works by mapping the cost function to a spin-Hamiltonian and finding its ground state with a suitable variational ansatz. Many problems of practical interest are nonplanar, but common superconducting qubit architectures have a grid or heavy-hexagonal coupling map.

Recently, QAOA experiments with a connectivity matching the hardware coupling map have been reported for 27 and 127 qubits with up to QAOA depth two. However, these instances are very sparse and classical solvers perform well, especially on sparse problems. While brute-force classical simulation methods of quantum circuits can handle up to around 50 qubits, tensor-product-based methods are capable of simulating much larger QAOA circuits.

How Does Machine Learning Aid in Quantum Approximate Optimization?

Machine learning plays a crucial role in quantum approximate optimization. In particular, it can mitigate hardware noise in quantum computations. Researchers have adjusted the probabilities estimated from measurements of quantum circuits with neural networks, showing an effective reduction in errors with a method that scales exponentially with system size.

A scalable method to error mitigate observables rather than the full state vector with linear regression has also been proposed. This method efficiently generates training data by computing expectation values of Clifford circuits on noiseless simulators and noisy quantum hardware. Another method learns noise mitigation from Clifford data, error-mitigating a quantum circuit by simulating multiple versions of it in which non-Clifford gates are replaced with gates that are efficient to simulate classically. These methods have successfully mitigated noise on both real quantum hardware and simulations of imperfect quantum computers.

What are the Challenges in Quantum Approximate Optimization?

Quantum computers are increasing in size and quality but are still very noisy. Error mitigation extends the size of the quantum circuits that noisy devices can meaningfully execute. However, state-of-the-art error mitigation methods are hard to implement and the limited qubit connectivity in superconducting qubit devices restricts most applications to the hardware’s native topology.

In Zero-Noise Extrapolation (ZNE), multiple logically equivalent copies of a circuit are run under different noise amplification factors. Based on the noisy results, extrapolation to the zero-noise limit produces a biased estimation of the noiseless expectation value. However, pulse-based ZNE is almost impossible for users of a cloud-based quantum computer to implement due to the onerous calibration.

Probabilistic Error Cancellation (PEC) learns a sparse model of the noise. The non-physical inverse of the noise channel is applied through a quasi-probability distribution to recover an unbiased expectation value. However, the large shot overhead of PEC can be prohibitive.

How Can Quantum Approximate Optimization be Improved?

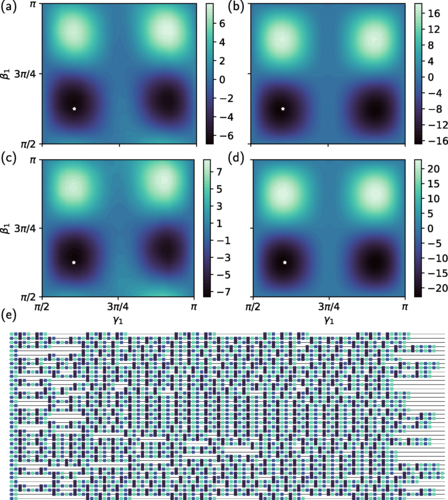

The researchers, Stefan H Sack from the Institute of Science and Technology Austria and Daniel J Egger from IBM Quantum, IBM Research Europe, have shown a quantum approximate optimization algorithm (QAOA) on nonplanar random regular graphs with up to 40 nodes, enabled by a machine learning-based error mitigation. They used a swap network with careful decision-variable-to-qubit mapping and a feedforward neural network to optimize a depth-two QAOA on up to 40 qubits.

They observed a meaningful parameter optimization for the largest graph, which requires running quantum circuits with 958 two-qubit gates. Their paper emphasizes the need to mitigate samples and not only expectation values in quantum approximate optimization. These results are a step towards executing quantum approximate optimization at a scale that is not classically simulable.

Reaching such system sizes is key to properly understanding the true potential of heuristic algorithms like QAOA. This research is a significant step towards the practical application of quantum computing in solving complex problems.

What is the Future of Quantum Approximate Optimization?

The future of quantum approximate optimization lies in overcoming the challenges and limitations of current quantum computing technology. The research by Sack and Egger emphasizes the need to mitigate samples and not only expectation values in quantum approximate optimization.

Their work is a step towards executing quantum approximate optimization at a scale that is not classically simulable. Achieving such system sizes is key to properly understanding the true potential of heuristic algorithms like QAOA.

As quantum computers increase in size and quality, and as error mitigation methods continue to improve, the potential for quantum approximate optimization to solve complex problems in fields such as finance and vehicle routing will only increase. The integration of machine learning techniques in quantum computing also opens up new avenues for research and development in this field.

Publication details: “Large-scale quantum approximate optimization on nonplanar graphs with machine learning noise mitigation”

Publication Date: 2024-03-01

Authors: Stefan Sack and Daniel J. Egger

Source: Physical review research

DOI: https://doi.org/10.1103/physrevresearch.6.013223