Mistral AI has released Mixtral 8x7B, a high-quality sparse mixture of experts model (SMoE) with open weights. The model outperforms Llama 2 70B on most benchmarks and matches or outperforms GPT3.5. Mixtral can handle a context of 32k tokens and is proficient in English, French, Italian, German and Spanish. It also shows strong performance in code generation. The model is pre-trained on data extracted from the open web and is licensed under Apache 2.0. Mistral AI has also submitted changes to the vLLM project to enable the community to run Mixtral with a fully open-source stack.

“Today, the team is proud to release Mixtral 8x7B, a high-quality sparse mixture of experts model (SMoE) with open weights. Licensed under Apache 2.0. Mixtral outperforms Llama 2 70B on most benchmarks with 6x faster inference. It is the strongest open-weight model with a permissive license and the best model overall regarding cost/performance trade-offs. In particular, it matches or outperforms GPT3.5 on most standard benchmarks.”

Mistral AI Team

Mistral AI’s New Open Model: Mixtral 8x7B

Mistral AI, an artificial intelligence company, has released Mixtral 8x7B, a high-quality sparse mixture of experts model (SMoE) with open weights. This model is licensed under Apache 2.0 and outperforms Llama 2 70B on most benchmarks with 6x faster inference. It is the most powerful open-weight model with a permissive license and the best model overall regarding cost/performance trade-offs. In particular, it matches or outperforms GPT3.5 on most standard benchmarks.

Mixtral 8x7B’s Capabilities

Mixtral 8x7B has several capabilities. It can handle a context of 32k tokens and is multilingual, supporting English, French, Italian, German, and Spanish. It shows strong performance in code generation and can be finetuned into an instruction-following model that achieves a score of 8.3 on MT-Bench.

Mixtral’s Sparse Architecture

“Mistral AI continues its mission to deliver the best open models to the developer community. Moving forward in AI requires taking new technological turns beyond reusing well-known architectures and training paradigms. Most importantly, it requires making the community benefit from original models to foster new inventions and usages.”

Mistral AI Team

Mixtral is a sparse mixture-of-experts network. It is a decoder-only model where the feedforward block picks from a set of 8 distinct groups of parameters. At every layer, for every token, a router network chooses two of these groups (the “experts”) to process the token and combine their output additively. This technique increases the number of parameters of a model while controlling cost and latency, as the model only uses a fraction of the total set of parameters per token. Mixtral has 46.7B total parameters but only uses 12.9B parameters per token.

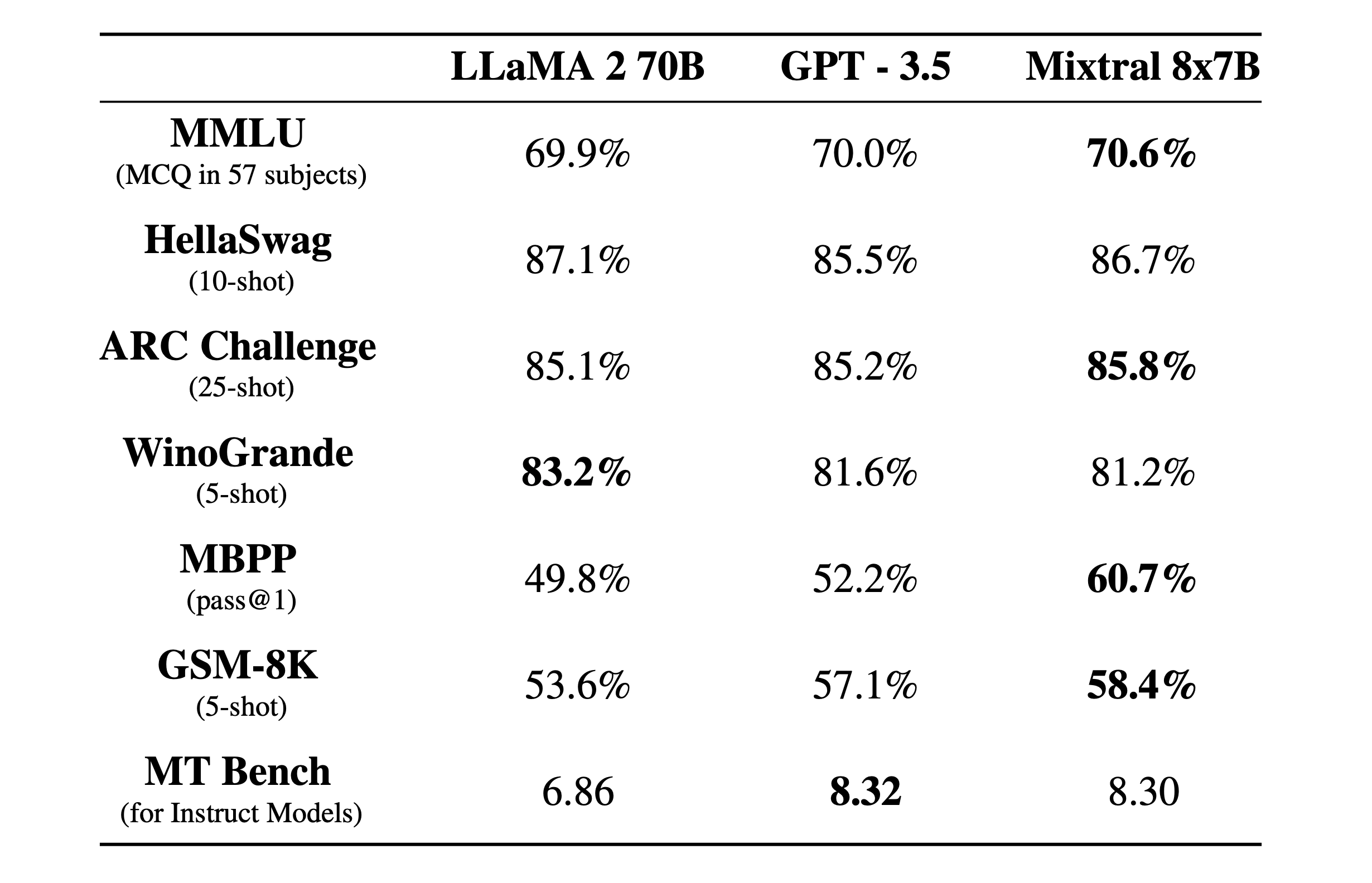

Performance Comparison

Mixtral’s performance was compared to the Llama 2 family and the GPT3.5 base model. Mixtral matches or outperforms Llama 2 70B, as well as GPT3.5, on most benchmarks. It is more truthful (73.9% vs 50.2% on the TruthfulQA benchmark) and presents less bias on the BBQ benchmark. Overall, Mixtral displays more positive sentiments than Llama 2 on BOLD, with similar variances within each dimension.

Multilingual Benchmarks and Instructed Models

Mixtral 8x7B masters French, German, Spanish, Italian, and English. The company also released Mixtral 8x7B Instruct alongside Mixtral 8x7B. This model has been optimised through supervised fine-tuning and direct preference optimisation (DPO) for careful instruction following. On MT-Bench, it reaches a score of 8.30, making it the best open-source model, with a performance comparable to GPT3.5.

Deployment and Usage

To enable the community to run Mixtral with a fully open-source stack, Mistral AI has submitted changes to the vLLM project, which integrates Megablocks CUDA kernels for efficient inference. Skypilot allows the deployment of vLLM endpoints on any instance in the cloud. Mixtral 8x7B is currently being used behind Mistral AI’s endpoint mistral-small, which is available in beta.

Summary

Mistral AI has released Mixtral 8x7B, a high-quality sparse mixture of experts model (SMoE) that outperforms previous models in most benchmarks and can handle multiple languages including English, French, Italian, German and Spanish. The model, which is pre-trained on data from the open web, has been optimised for instruction following and displays more positive sentiments and less bias compared to previous models.

- Mistral AI has released Mixtral 8x7B, a high-quality sparse mixture of experts model (SMoE) with open weights.

- Mixtral outperforms Llama 2 70B on most benchmarks and is six times faster in inference.

- It matches or outperforms GPT3.5 on most standard benchmarks, making it the best model in terms of cost/performance trade-offs.

- Mixtral can handle a context of 32k tokens and is multilingual, supporting English, French, Italian, German and Spanish.

- It shows strong performance in code generation and can be fine-tuned into an instruction-following model that achieves a score of 8.3 on MT-Bench.

- Mixtral is a sparse mixture-of-experts network, a decoder-only model where the feedforward block picks from a set of 8 distinct groups of parameters.

- It has 46.7B total parameters but only uses 12.9B parameters per token, processing input and generating output at the same speed and for the same cost as a 12.9B model.

- Mixtral is pre-trained on data extracted from the open Web.

- Mistral AI has also submitted changes to the vLLM project to enable the community to run Mixtral with a fully open-source stack.

- CoreWeave and Scaleway teams provided technical support during the training of the models.