Intel oneAPI, a programming framework, is being used by researchers from the University of Tsukuba to handle multihetero acceleration, including NVIDIA GPU and Intel FPGA simultaneously. The team has developed a oneAPI environment that uses a single programming language, Data Parallel C++ (DPC++), to manage various accelerators.

The researchers are also exploring the use of Field Programmable Gate Arrays (FPGAs) alongside GPUs to overcome limitations in high-performance computing. They have proposed a concept called CHARM (Cooperative Heterogeneous Acceleration with Reconfigurable Multidevices) to maximize overall application performance by using GPUs and FPGAs in a complementary manner.

What is Intel oneAPI and How Does it Work with Multihybrid Acceleration Programming?

Intel oneAPI is a programming framework that accepts various accelerators such as Graphics Processing Units (GPUs), Field Programmable Gate Arrays (FPGAs), and multicore Central Processing Units (CPUs). It is primarily focused on high-performance computing (HPC) applications. Users can apply their code written in a single language, Data Parallel C++ (DPC++), to this heterogeneous programming environment. However, it is not always easy to apply to different accelerators, especially for non-Intel devices such as NVIDIA and AMD GPUs.

The researchers from the University of Tsukuba, Wentao Liang, Norihisa Fujita, Ryohei Kobayashi, and Taisuke Boku, have successfully constructed a oneAPI environment set to utilize the single DPC++ programming to handle true multihetero acceleration, including NVIDIA GPU and Intel FPGA simultaneously. This paper discusses how this was achieved and what kind of applications can be targeted.

Why is the Use of Accelerators in High-Performance Computing Becoming More Common?

The use of accelerators in high-performance computing has become more common than ever before. Among these accelerators, the Graphics Processing Unit (GPU) has emerged as an important component and often surpasses the capabilities of the traditional Central Processing Unit (CPU) in many high-performance and power efficiency scenarios. This transition is particularly evident in highly parallelized tasks and physical simulations.

However, not all application operations are highly parallelizable and some operations are fine-grained or require a lot of communication. GPUs are inherently ill-suited for such operations and Amdahl’s Law makes them a bottleneck for performance improvement. This means that the benefits of GPU offloading may be limited.

How Can Field Programmable Gate Arrays (FPGAs) Address the Limitations of GPUs?

To address the limitations of GPUs, attention has focused on the use of Field Programmable Gate Arrays (FPGAs). The FPGA is an adaptable hardware device that can be reconfigured through programming to efficiently perform specific functions. Its feature makes it possible to build accelerators that specialize in computations for which GPUs are not suitable. However, the floating-point arithmetic and memory performance that FPGAs can deliver as hardware are not as good as GPUs and therefore a complete switch from GPU offloading to FPGA offloading is not a good idea.

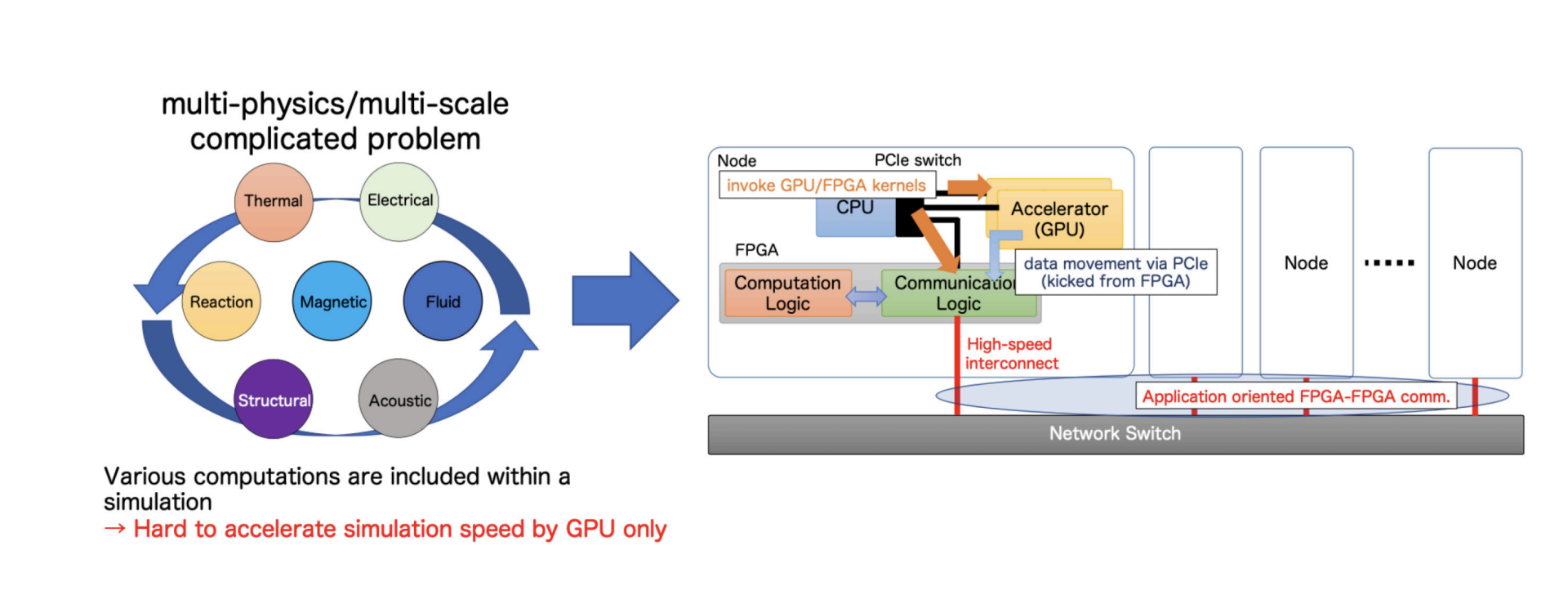

Therefore, the researchers propose using GPUs and FPGAs as high-performance computing accelerators in the right places in order to accelerate all the subcomputations of large-scale applications. Leveraging FPGA technology in parallel with GPUs can have a powerful synergistic effect and results in significant performance gains, especially in applications such as multiphysics simulation.

What is the CHARM Concept and How Does it Maximize Overall Application Performance?

The concept of using GPU and FPGA to face different workloads in order to get higher performance is called CHARM (Cooperative Heterogeneous Acceleration with Reconfigurable Multidevices). The most important point of CHARM is not only to use specific accelerators such as GPUs or FPGAs to accelerate applications but also to use both in a complementary manner to maximize overall application performance.

This is why GPUs are used for coarse-grained parallel applications whereas multiple FPGAs connected by a high-speed interconnect autonomously perform communication and computation in areas where CPUs/GPUs are inefficient. The researchers have shown that significant speedups can be achieved if CHARM is properly applied to the target application.

What are the Challenges and Solutions in Implementing the CHARM Concept?

The most important issue to be resolved at this time is the programming method for CHARM. The implementation method of GPU-FPGA accelerated computation is a mixture of CUDA and OpenCL programming, which means that the computation kernels running on GPUs are written in CUDA and those running on FPGAs are written in OpenCL. This multilingual programming places a heavy burden on programmers and therefore a programming environment with higher usability is required.

The researchers focus on the Intel oneAPI as a key to solving this issue. This is a unified programming framework and this means that accelerators and host can be programmed and controlled using the same programming language and APIs. However, since the Intel oneAPI is basically targeted at Intel CPUs, GPUs, and FPGAs, it is not easy to apply it to non-Intel devices such as NVIDIA and AMD GPUs. And because it does not assume programming that handles different computing devices simultaneously, there are technical issues that must be resolved to apply Intel oneAPI to the CHARM concept.

Publication details: “Using Intel oneAPI for Multi-hybrid Acceleration Programming with GPU and FPGA Coupling”

Publication Date: 2024-01-11

Authors: W. X. Liang, Norihisa Fujita, Ryohei Kobayashi, Taisuke Boku, et al.

Source:

DOI: https://doi.org/10.1145/3636480.3637220