Analog In-Memory Computing (AIMC) is a promising technology for deep learning inference due to its speed and energy efficiency. It performs computational tasks within the memory, improving energy efficiency and latency. However, AIMC faces challenges related to circuit mismatches and memory device nonidealities. The Near Memory Digital Processing Unit (NMPU) is proposed to overcome these. The NMPU, implemented in a 14 nm CMOS technology, provides a speedup, smaller area, and competitive power consumption. Despite its potential, further research is needed to realize its capabilities and address any challenges fully.

What is Analog In-Memory Computing (AIMC) and what is its importance in deep learning?

Analog In-Memory Computing (AIMC) is an emerging technology gaining traction for its speed and energy efficiency in deep learning inference. Deep learning, a subset of artificial intelligence (AI), involves the use of neural networks with many layers (deep neural networks) to model and understand complex patterns. Inference is the process of making predictions using a trained deep-learning model.

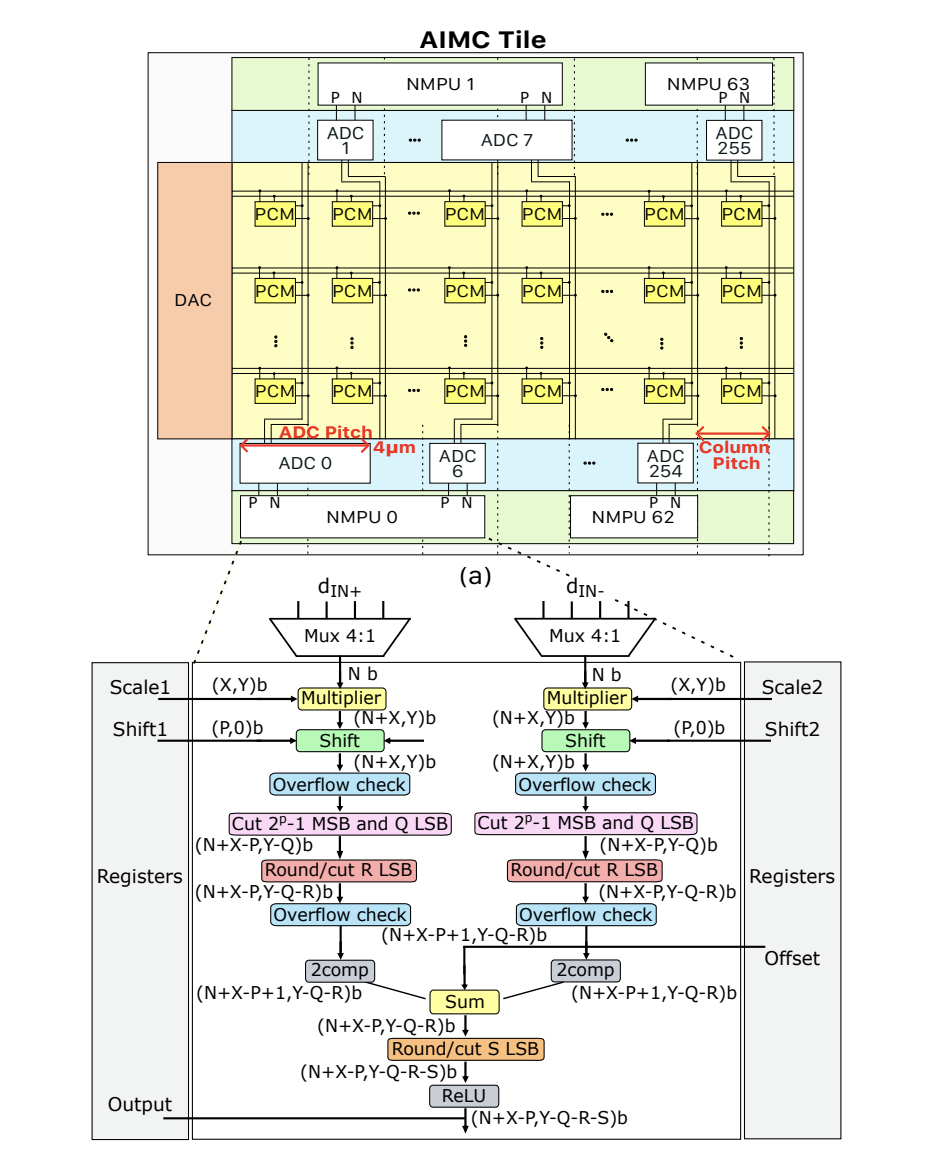

AIMC is a non-von Neumann architecture that performs computational tasks within the memory itself, a paradigm known as In-Memory Computing (IMC). This approach tackles the von Neumann bottleneck, leading to significant improvements in both energy efficiency and latency. The Matrix Vector Multiplication (MVM) operation, which dominates the computations of deep neural network inference, can be performed by mapping offlinetrained network weights onto AIMC tiles.

What are the Challenges and Solutions in AIMC?

Despite its potential, AIMC faces challenges associated with circuit mismatches and nonidealities linked to the memory devices. To address these issues, a certain amount of digital post-processing is required. This is where the Near Memory digital Processing Unit (NMPU) comes in. The NMPU, based on fixed-point arithmetic, is proposed to overcome these limitations.

The NMPU achieves competitive accuracy and higher computing throughput than previous approaches while minimizing the area overhead. It supports standard deep learning activation steps such as Rectified Linear Unit (ReLU) and Batch Normalization.

How does the NMPU Work?

The NMPU design was physically implemented in a 14 nm CMOS technology. The NMPU uses data from an AIMC chip and demonstrates that a simulated AIMC system with the proposed NMPU outperforms existing Floating Point 16 (FP16) based implementations. It provides a 1.39 speedup, 78% smaller area, and competitive power consumption.

The NMPU also achieves an inference accuracy of 86.65% and 65.06% with an accuracy drop of just 0.12% and 0.4% compared to the FP16 baseline when benchmarked with ResNet9/ResNet32 networks trained on the CIFAR-10/CIFAR-100 datasets respectively.

What is the Role of the AIMC Tile?

A typical AIMC tile comprises a crossbar array of memristive devices such as Phase Change Memory (PCM). The synaptic weights are programmed as the conductance value of these devices. The digital input to the tile is converted to voltage or pulse duration values using Digital-to-Analog Converters (DACs) or pulse-width modulation units respectively, and the output from the array is converted to digital values using Analog-to-Digital Converters (ADCs).

However, one key challenge is that there is substantial nonuniformity in the ADCs conversion behavior or transfer curves. To address this, AIMC tiles require additional data post-processing per column through an affine correction procedure.

What is the Future of AIMC and NMPU?

The research conducted by the team from IBM Research Europe and ETH Zurich demonstrates the potential of AIMC and NMPU in deep learning inference. The NMPU’s ability to achieve high computational efficiency and low latency in AIMC systems makes it a promising technology for future AI applications.

However, as with any emerging technology, further research and development are needed to fully realize its potential and address any challenges that may arise. The team’s work is a significant step forward in this direction, contributing to the ongoing advancements in the field of AI and deep learning.

Publication details: “A Precision-Optimized Fixed-Point Near-Memory Digital Processing Unit

for Analog In-Memory Computing”

Publication Date: 2024-02-12

Authors: Elena Ferro, Athanasios Vasilopoulos, Corey Lammie, Manuel Le Gallo et al.

Source: arXiv (Cornell University)

DOI: https://doi.org/10.48550/arxiv.2402.07549