Hybrid Quantum Vision Transformers, a new model based on vision transformer architectures, could potentially reduce training and operating time while maintaining predictive power. Despite their computational expense, transformer architectures have been widely implemented since their introduction in 2017. Quantum machine learning models may offer a solution to the computational challenges posed by traditional transformer architectures. In a recent study, quantum-classical hybrid vision transformers were developed and tested on a high-energy physics problem, achieving comparable performance to classical models. As quantum computation hardware develops, these hybrid models are expected to become increasingly efficient and powerful.

What are Hybrid Quantum Vision Transformers?

Hybrid Quantum Vision Transformers are a new type of model based on vision transformer architectures. These models are considered state-of-the-art for image classification tasks. However, they require extensive computational resources for both training and deployment. This problem becomes more severe as the amount and complexity of the data increases. Quantum-based vision transformer models could potentially alleviate this issue by reducing the training and operating time while maintaining the same predictive power.

Although current quantum computers are not yet able to perform high-dimensional tasks, they offer one of the most efficient solutions for the future. In this work, several variations of a quantum hybrid vision transformer were constructed for a classification problem in high-energy physics, specifically distinguishing photons and electrons in the electromagnetic calorimeter. The findings indicate that the hybrid models can achieve comparable performance to their classical analogs with a similar number of parameters.

What is the History and Application of Transformer Architectures?

The first transformer architecture was introduced in 2017 by Vaswani et al. in a paper titled “Attention Is All You Need”. This new model outperformed existing state-of-the-art models by a significant margin for the English-to-German and English-to-French newstest2014 tests. Since then, the transformer architecture has been implemented in numerous fields and has become the go-to model for many different applications such as sentiment analysis and question answering.

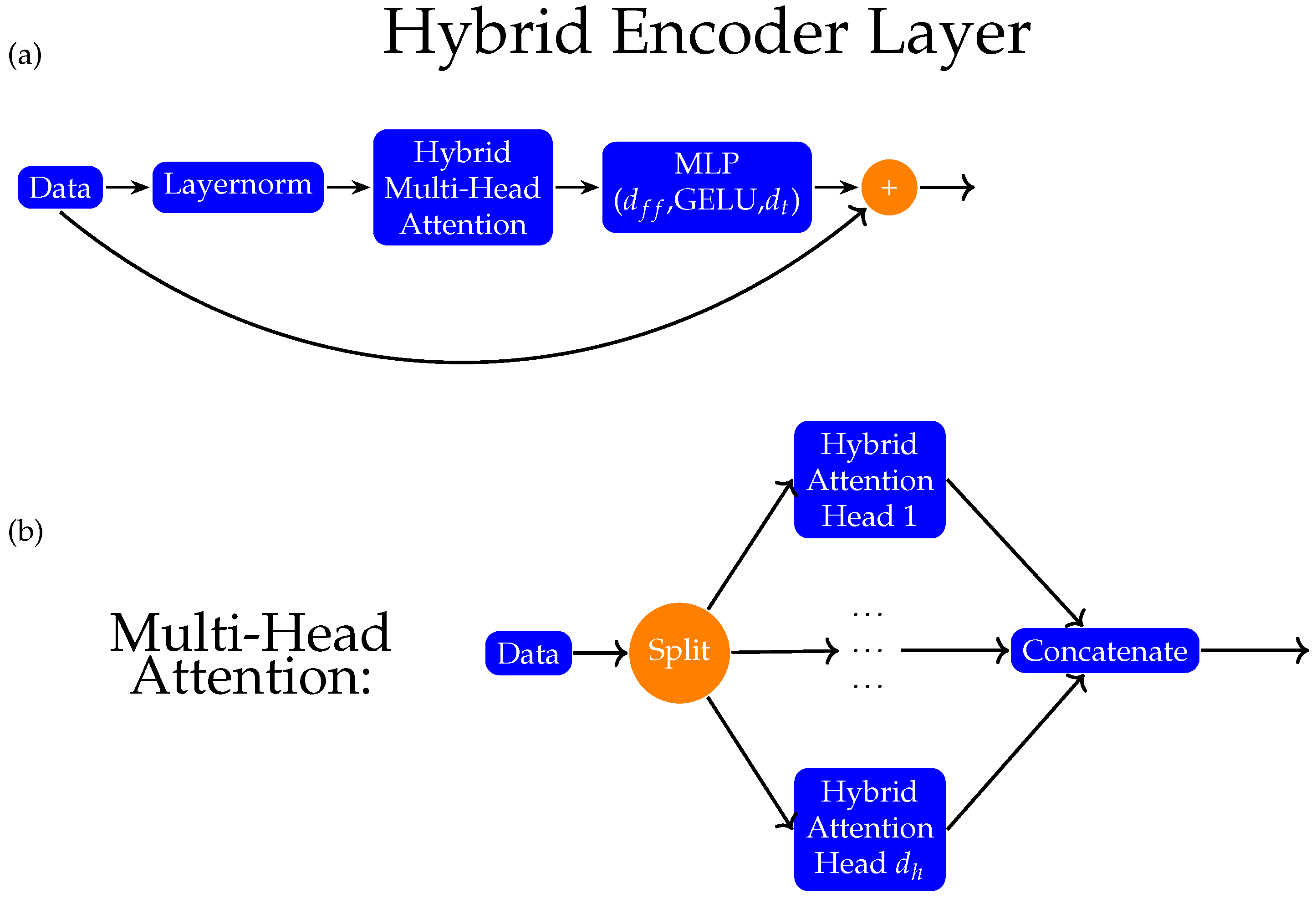

The vision transformer architecture is essentially the implementation of transformer architecture for image classification. It utilizes the encoder part of the transformer architecture and attaches a multilayer perceptron (MLP) layer to classify images. This architecture was first introduced by Dosovitskiy et al. in the paper “An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale”. It was shown that in a multitude of datasets, a vision transformer model is capable of outperforming the state-of-the-art model ResNet152x4 while using less computation time to pretrain.

What are the Challenges with Transformer Architectures?

Despite their advantages, transformer architectures are known to be computationally expensive to train and operate. Specifically, their demands on computation power and memory increase quadratically with the input length. A number of studies have attempted to approximate self-attention in order to decrease the associated quadratic complexity in memory and computation power. There are also proposed modifications to the architecture which aim to alleviate the quadratic complexity. As the amount of data grows, these problems are exacerbated. In the future, it will be necessary to find a substitute architecture that has similar performance but demands fewer resources.

Can Quantum Machine Learning be a Solution?

A quantum machine learning model might be one of those substitutes. Although the hardware for quantum computation is still in its infancy, there is a high volume of research that is focused on the algorithms that can be used on this hardware. The main appeal of quantum algorithms is that they are already known to have computational advantages over classical algorithms for a variety of problems. For instance, Shor’s algorithm can factorize numbers significantly faster than the best classical methods. Furthermore, there are studies suggesting that quantum machine learning can lead to computational speedups.

How are Quantum-Classical Hybrid Vision Transformers Developed?

In this work, a quantum-classical hybrid vision transformer architecture was developed. This architecture was demonstrated on a problem from experimental high energy physics, which is an ideal testing ground because experimental collider physics data are known to have a significant amount of complexity and computational resources represent a major bottleneck. Specifically, the model was used to classify the parent particle in an electromagnetic shower event.

What are the Results of Using Quantum-Classical Hybrid Vision Transformers?

The results of using quantum-classical hybrid vision transformers are promising. The hybrid models were tested against classical vision transformer architectures and it was found that they can achieve comparable performance to their classical analogs with a similar number of parameters. This suggests that quantum-classical hybrid vision transformers could potentially be a viable solution to the computational challenges posed by traditional transformer architectures.

What is the Future of Quantum-Classical Hybrid Vision Transformers?

The future of quantum-classical hybrid vision transformers is promising. As the hardware for quantum computation continues to develop, it is expected that these models will become increasingly efficient and powerful. Furthermore, as the amount of data continues to grow, the need for more efficient models will become increasingly important. Therefore, it is likely that quantum-classical hybrid vision transformers will play a significant role in the future of image classification and other high-dimensional tasks.

Publication details: “Hybrid Quantum Vision Transformers for Event Classification in High Energy Physics”

Publication Date: 2024-03-13

Authors: Eyup B. Unlu, M Cara, Gopal Ramesh Dahale, Zhongtian Dong, et al.

Source: Axioms

DOI: https://doi.org/10.3390/axioms13030187