The development of AI-based software engineering tools is hindered by the need for high-quality data, which is often commercially sensitive and less accessible for open-source projects. To address this, researchers have proposed a governance framework centered on Federated Learning (FL), a machine learning approach that allows data to be analyzed on the device it was collected from, ensuring privacy and security. The framework aims to foster the joint development of open-source AI code models. The researchers also provide guidelines for developers and highlight the need for more high-quality code data, stronger community support, and efficient participation models.

What are the Opportunities and Challenges of Open-Source AI-based Software Engineering Tools?

The field of software engineering (SE) has been significantly transformed by the advent of Large Language Models (LLMs). These models have been instrumental in advancing various SE tasks, particularly in code understanding. However, the development and enhancement of AI-based SE tools within the software engineering community face significant barriers. This is primarily due to the need for high-quality data, which often holds commercial or sensitive value, making it less accessible for open-source AI-based SE projects.

The collaboration of AI-based SE models hinges on maximizing the sources of high-quality data. However, the reality of data accessibility presents a significant barrier to the development and enhancement of AI-based SE tools within the software engineering community. Therefore, researchers need to find solutions for enabling open-source AI-based SE models to tap into resources by different organizations.

Addressing this challenge, the researchers have proposed a solution to facilitate access to diverse organizational resources for open-source AI models, ensuring privacy and commercial sensitivities are respected. They introduce a governance framework centered on federated learning (FL), designed to foster the joint development and maintenance of open-source AI code models while safeguarding data privacy and security.

How Can Federated Learning Improve Open-Source AI-based Software Engineering Tools?

Federated Learning (FL) is a machine learning approach that allows for data to be analyzed and processed on the device it was collected from, rather than being sent to a centralized server. This approach ensures data privacy and security, making it an ideal solution for open-source AI-based SE models that require access to high-quality data.

The researchers have proposed a governance framework centered on FL, designed to foster the joint development and maintenance of open-source AI code models. This framework allows for the collaboration of AI-based SE models by maximizing the sources of high-quality data, while also ensuring that privacy and commercial sensitivities are respected.

In addition to the governance framework, the researchers also present guidelines for developers on AI-based SE tool collaboration. These guidelines cover data requirements, model architecture updating strategies, and version control. Given the significant influence of data characteristics on FL, the researchers also examine the effect of code data heterogeneity on FL performance.

What are the Experimental Findings on the Use of Federated Learning?

The researchers conducted experiments to examine the potential for employing Federated Learning in the collaborative development and maintenance of AI-based software engineering models. They considered six different scenarios of data distributions and included four code models. They also included the four most common federated learning algorithms.

The experimental findings highlight the potential for employing Federated Learning in the collaborative development and maintenance of AI-based software engineering models. However, the researchers also discuss the key issues that need to be addressed in the co-construction process and future research directions.

What are the Limitations of Current Open-Source Code Models?

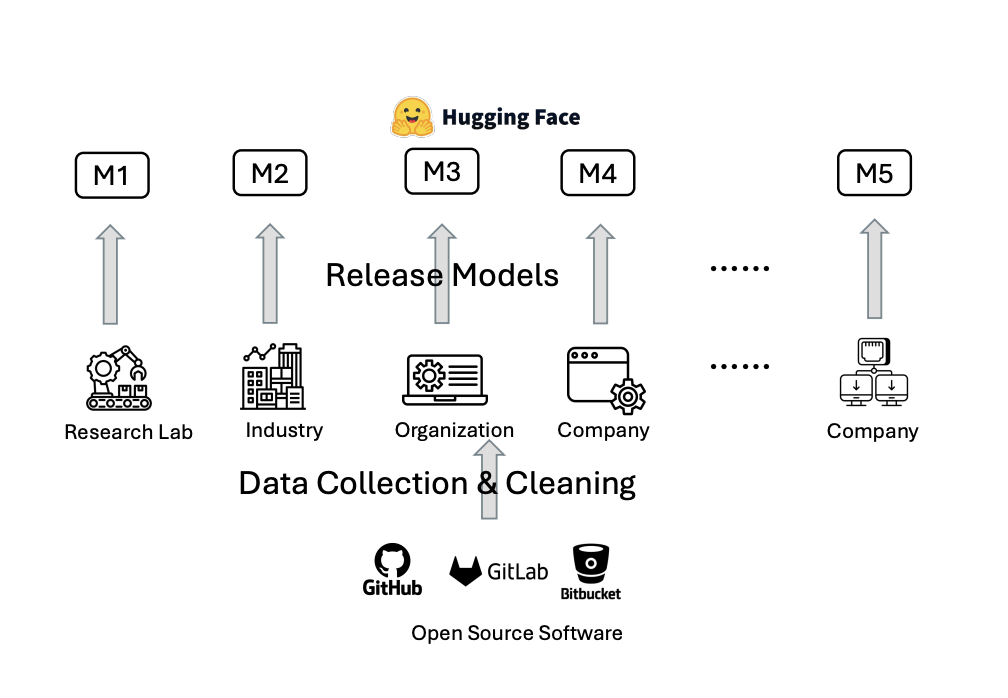

The current open-source code model is mainly developed and published by a single team based on open-source data. However, there are three significant limitations in how open-source models are developed and shared: limited access to high-quality code data, lacking community strong support, and training hardware resources.

Firstly, data quality is crucial for the performance of AI models. Most open-source code models rely on publicly available code datasets. However, the growth rate of high-quality open-source code data has not kept up with the pace at which LLM capabilities are improving.

Secondly, despite their widespread application in many areas of software engineering, such as vulnerability detection, they still lack the strong open-source community support typical of traditional software engineering tools. These open-source models also resemble isolated information islands where individual entities independently complete the training and release of models using open-source data.

Lastly, the current unipartite participation model leads to low economic efficiency as different developers or teams conduct redundant training on the same dataset, and the training process of AI models consumes a significant amount of resources.

What are the Future Research Directions for Open-Source AI-based Software Engineering Tools?

The researchers highlight the need for future research to address the key issues in the co-construction process of AI-based software engineering models. They also emphasize the need for more high-quality code data to develop more powerful code models.

Furthermore, they call for stronger open-source community support for these models, which currently lack compared to traditional software engineering tools. They also highlight the need for more efficient participation models to reduce redundancy and resource consumption in the training process.

In conclusion, the researchers propose a governance framework centered on federated learning to foster the joint development and maintenance of open-source AI code models. They believe that this approach, along with stronger community support and more efficient participation models, can overcome the current limitations and significantly advance the field of AI-based software engineering.

Publication details: “Open-Source AI-based SE Tools: Opportunities and Challenges of

Collaborative Software Learning”

Publication Date: 2024-04-09

Authors: Zhengxi Lin, Wei Ma, Tao Lin, Yaowen Zheng, et al.

Source: arXiv (Cornell University)

DOI: https://doi.org/10.48550/arxiv.2404.06201