BlueQubit has developed a novel method for data loading in quantum machine learning (QML) applications, which reduces the impact of hardware noise and error. The company used Q-CTRL’s AI-driven error suppression package, Fire Opal, to load complex information onto 20 qubits, demonstrating an 8X improvement in performance.

The method, called Hierarchical Learning for Quantum ML, breaks down the learning process into steps, starting with fewer qubits and gradually introducing more. This approach avoids common challenges of data loading with deep circuits. The technique was tested on three different IBM Quantum device configurations, showing significant improvements in data loading outcomes.

Quantum Machine Learning: Overcoming Data Loading Challenges with Fire Opal

Quantum machine learning (QML) is a rapidly evolving field that seeks to leverage the computational power of quantum computers to enhance machine learning algorithms. However, the process of loading classical data into quantum systems, a crucial step in QML applications, is often hindered by hardware noise and error. This article discusses how BlueQubit, a quantum computing company, used Fire Opal, an AI-driven error suppression package developed by Q-CTRL, to mitigate these issues and improve data loading performance.

Innovating Data-Loading Techniques for Quantum Machine Learning

The advent of AI has spurred interest in quantum machine learning techniques, which promise to reduce computational burdens and improve training efficiency. In QML applications, the data representing the problem must be encoded into a quantum state, which serves as an input for subsequent quantum operations. However, quantum computers are not efficient “big data” systems, making the development of efficient and accurate data-loading routines crucial for realizing the potential of quantum computing.

Current data-loading techniques primarily rely on variational algorithms, which are compatible with near-term hardware. However, these methods often face convergence challenges and poor scaling of operations as the number of qubits increases. To address these issues, BlueQubit developed a novel data-loading method that avoids the problems of “barren plateaus” and exponential scaling of required operations with data size.

Hierarchical Learning for Quantum Machine Learning

BlueQubit’s innovative method employs a type of QML algorithm known as a Quantum Circuit Born Machine (QCBM). The goal of QCBMs and other generative modeling techniques is to learn the overall probability distribution of a given dataset using only a sample of data points. This approach is efficient for data loading as it allows for the loading of only a subset of the data, with the rest being inferred.

BlueQubit further enhanced this process by breaking down the learning of the probability distribution into a series of steps, starting with fewer qubits and gradually introducing more. This method, known as Hierarchical Learning for Quantum ML, avoids some of the typical challenges of data loading with deep circuits, such as the emergence of barren plateaus in the training process.

The Impact of Hardware Noise and Error Suppression

In the quantum domain, hardware noise and error present additional challenges. These issues can cause quantum states, which are extremely fragile, to degrade or collapse, making it difficult to maintain the coherence of the quantum system long enough to complete the data loading process. Fire Opal, with its AI-driven error suppression capabilities, helps to mitigate these issues, allowing for more accurate and efficient data loading.

Fire Opal’s Performance on Real Hardware

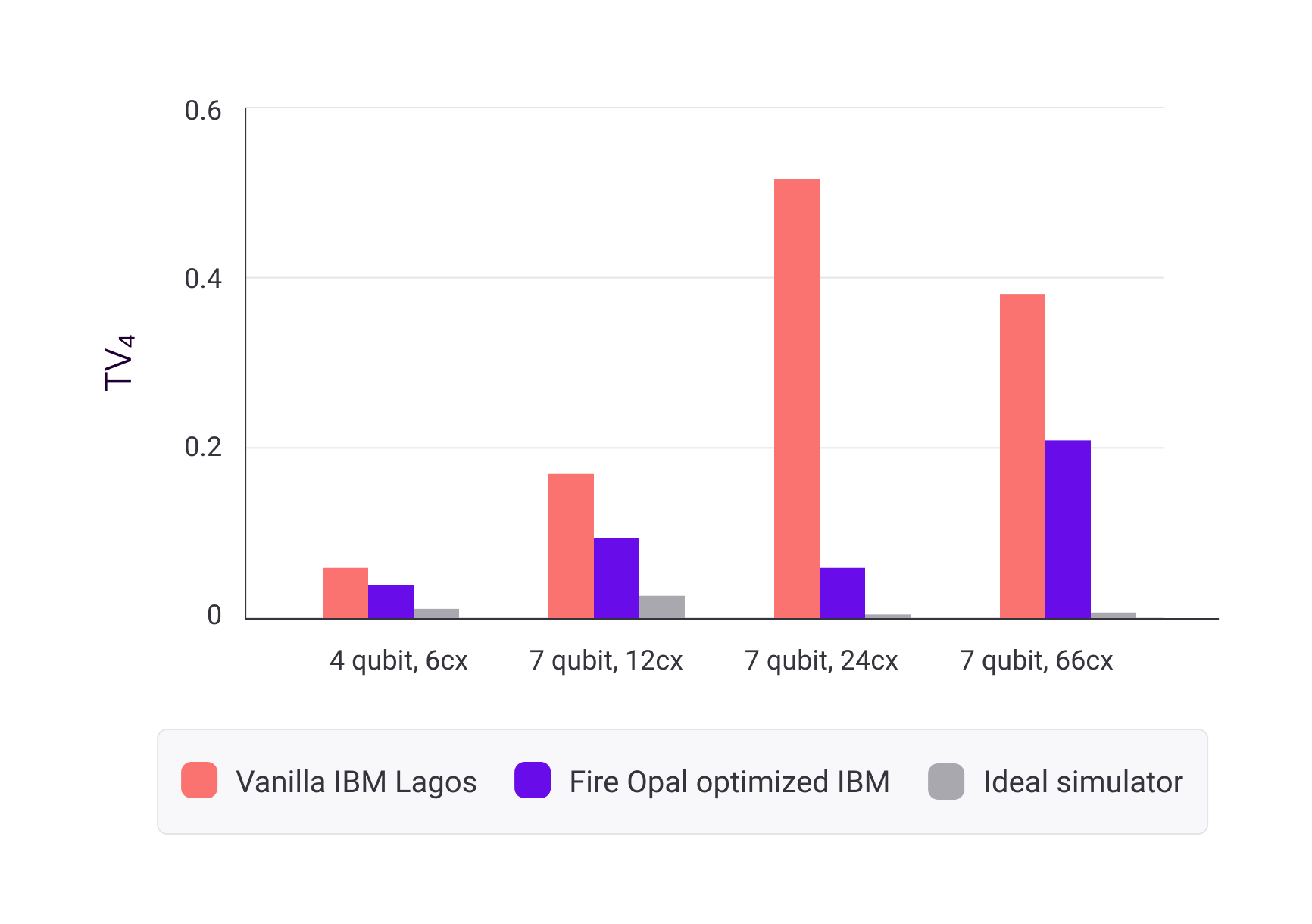

BlueQubit tested their hierarchical learning method on two different distributions using three different IBM Quantum device configurations. The results were compared using a metric called the total variational distance (TVD), which measures the dissimilarity between two probability distributions. The tests showed that the Fire Opal optimized circuits consistently resulted in better data loading outcomes than the standard hardware runs. In one test, Fire Opal’s improvement reached a factor of 8x.

Scaling to New Heights: Data Loading for 20 Qubits

BlueQubit also demonstrated data loading on up to 20 qubits, a significant challenge given the increased circuit size and the complexity of the chosen distribution—a bimodal Gaussian mixture distribution. Despite these challenges, Fire Opal was able to accurately reproduce the target distribution, capturing its bimodal nature and detailed features. As circuits scaled to 20 qubits, Fire Opal continued to improve the performance of larger circuits where noise was more likely to degrade the data loading process.

Conclusion: The Potential of Fire Opal in Quantum Machine Learning

With the help of Fire Opal, BlueQubit was able to validate their new hierarchical learning approach and demonstrate its effectiveness in improving data loading while mitigating the typical noise and error challenges associated with increasing scale. These tests confirm that by reducing circuit depth and suppressing noise, Fire Opal can address the constraints posed by current hardware devices and enable successful loading of complex data for new applications in quantum machine learning.

External Link: Click Here For More