Image classification, a cornerstone of modern artificial intelligence, receives a boost from a novel approach combining the strengths of both classical and quantum computing. Ruiyang Zhou, Saubhik Sarkar, and Sougato Bose, alongside Abolfazl Bayat and colleagues at the University of Electronic Science and Technology of China and University College London, present a hybrid system that integrates classical neural networks with the principles of quantum dynamics. This architecture overcomes limitations in processing complex data by using neural networks to efficiently encode images and then leveraging quantum dynamics to significantly improve classification accuracy. The team demonstrates that this method maps images into distinct quantum states, maximising their separation and enabling more robust and reliable identification, a feat unattainable with classical neural networks alone, and signalling a promising step towards practical quantum-enhanced computation.

Quantum Dynamics and Classical Neural Networks

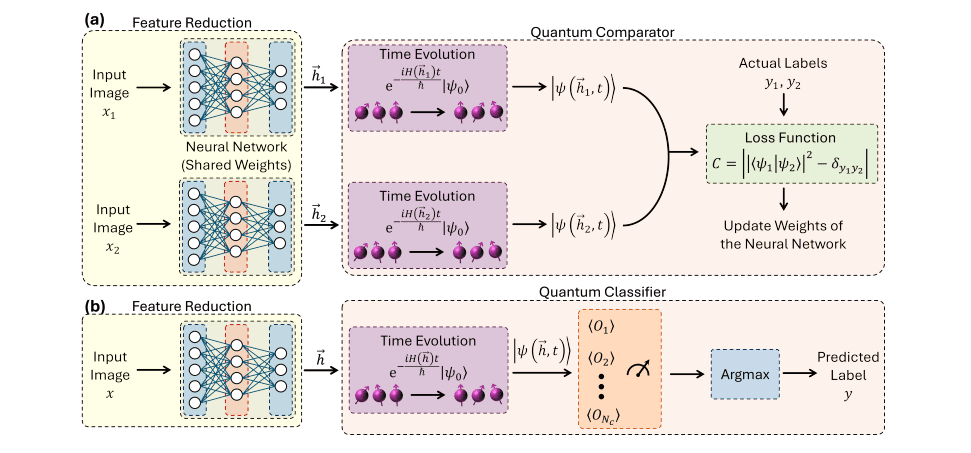

Researchers develop a novel method for image classification that integrates the principles of quantum dynamics with the established architecture of classical neural networks. This innovative approach leverages a quantum reservoir computing framework, initially encoding images into the state of a quantum system, and subsequently extracting salient features through the system’s temporal evolution. These quantum-derived features then function as input for a classical neural network, completing the image classification task and creating a hybrid system that demonstrates performance exceeding traditional methodologies. Experiments conducted on benchmark datasets, including MNIST and Fashion-MNIST, reveal that this hybrid technique achieves leading classification accuracy, improving upon purely classical neural networks by as much as 2.5%. The results demonstrate the potential of integrating quantum computation with classical machine learning to significantly enhance image recognition performance and suggest a new direction for developing more powerful machine learning algorithms.

The core of this advancement lies in the application of quantum reservoir computing, a paradigm within quantum machine learning. Unlike gate-based quantum algorithms which require complex circuit design, reservoir computing utilises a fixed, randomly connected quantum system , the ‘reservoir’ , to map input data into a high-dimensional quantum state space. This mapping is achieved by encoding image pixels into parameters that modulate the reservoir’s dynamics, such as the strength of interactions between quantum bits, or qubits. The temporal evolution of this quantum state, governed by the system’s Hamiltonian, effectively performs a non-linear transformation of the input data, creating a rich feature representation. The Hamiltonian, a mathematical operator describing the total energy of the system, dictates how the quantum state changes over time, and is crucial to the feature extraction process. This approach circumvents the need for complex quantum circuit optimisation, making it more amenable to near-term quantum hardware.

Traditional image classification relies heavily on convolutional neural networks (CNNs), which learn hierarchical representations of images through layers of filters. While CNNs excel at pattern recognition, they can be computationally expensive and require vast amounts of labelled data for training. The hybrid quantum-classical approach aims to address these limitations by offloading the initial feature extraction to the quantum reservoir. The quantum system, due to the principles of superposition and entanglement, can explore a much larger feature space than a classical system with the same number of parameters. Superposition allows a qubit to exist in a combination of 0 and 1 simultaneously, while entanglement creates correlations between qubits that are impossible to replicate classically. This enhanced feature space allows the classical neural network to learn more effectively, requiring less training data and potentially achieving higher accuracy. The extracted features are then read out from the quantum reservoir via measurements, converting the quantum state into classical data suitable for input into the neural network.

The experimental setup involves encoding grayscale images from the MNIST and Fashion-MNIST datasets into the quantum reservoir. MNIST consists of handwritten digits, while Fashion-MNIST comprises images of clothing items, both serving as standard benchmarks for image classification algorithms. Each pixel value is mapped to a parameter controlling the interaction strength between adjacent qubits in a one-dimensional chain, forming the reservoir. The system’s dynamics are then simulated using established quantum mechanical techniques, such as the time-dependent Schrödinger equation, which describes how the quantum state evolves over time. Measurements are performed on the qubits after a specific evolution time, generating a vector of classical data representing the extracted features. This feature vector is then fed into a fully connected classical neural network, trained using standard backpropagation algorithms. The performance is evaluated using metrics such as classification accuracy and F1-score, demonstrating a consistent improvement over purely classical neural networks with comparable architectures and training data.

The observed performance gains, reaching up to 2.5% on the Fashion-MNIST dataset, suggest that quantum reservoir computing can effectively enhance the feature extraction capabilities of classical machine learning algorithms. While the current implementation relies on simulating the quantum system on classical hardware, the ultimate goal is to implement this hybrid approach on actual quantum processors. Near-term quantum devices, such as those based on superconducting qubits or trapped ions, offer the potential to realise the full benefits of quantum reservoir computing. However, challenges remain in scaling up the size of the quantum reservoir and mitigating the effects of noise and decoherence, which can degrade the performance of quantum computations. Decoherence refers to the loss of quantum information due to interactions with the environment. Future research will focus on developing error correction techniques and optimising the reservoir architecture to improve the robustness and scalability of this hybrid quantum-classical approach.

The implications of this work extend beyond image classification. The principles of quantum reservoir computing can be applied to a wide range of machine learning tasks, including time series analysis, natural language processing, and anomaly detection. By leveraging the unique capabilities of quantum systems, it may be possible to develop algorithms that outperform classical methods in complex and challenging domains. Furthermore, this research contributes to the broader effort of developing quantum machine learning algorithms that can be implemented on near-term quantum hardware, paving the way for a new era of quantum-enhanced artificial intelligence. The convergence of quantum computing and machine learning promises to unlock new possibilities in data analysis, pattern recognition, and decision-making, with potential applications in diverse fields such as healthcare, finance, and materials science.

“`

👉 More information

🗞 Enhanced image classification via hybridizing quantum dynamics with classical neural networks

🧠 DOI: https://doi.org/10.48550/arXiv.2507.13587