The field of quantum computing research is exploring early fault-tolerant quantum computing (EFTQC), a method that could be used during the transition between near-term intermediate-scale quantum (NISQ) computing and future fault-tolerant quantum computing (FTQC). EFTQC aims to address the diminishing returns in quantum error correction (QEC) that characterize this transition period. By modeling these diminishing returns and designing suitable algorithms, EFTQC could potentially extend the capabilities of quantum computers. For instance, researchers have shown that EFTQC can extend the reach of quantum computers for tasks like phase estimation, using fewer operations and increasing circuit repetitions.

What is Early Fault-Tolerant Quantum Computing (EFTQC)?

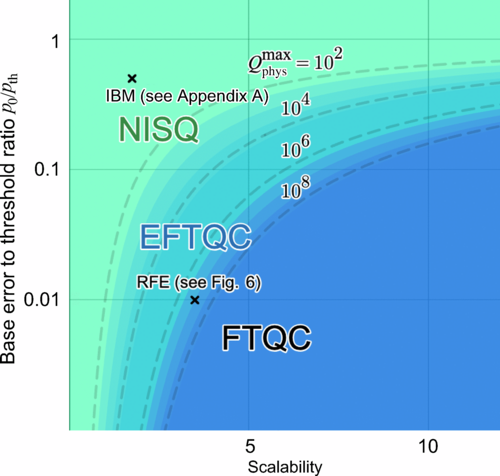

Quantum computing research has primarily focused on two approaches: near-term intermediate-scale quantum (NISQ) computing and future fault-tolerant quantum computing (FTQC). A growing body of research is exploring early fault-tolerant quantum computing (EFTQC), which aims to utilize quantum computers during the transition between the NISQ and FTQC eras. However, without agreed-upon characterizations of this transition, it is unclear how best to utilize EFTQC architectures.

The transition period is expected to be characterized by a law of diminishing returns in quantum error correction (QEC), where the architecture’s ability to maintain quality operations at scale determines the point of diminishing returns. Two challenges emerge from this perspective: how to model the phenomenon of diminishing return of QEC as device performance continually improves, and how to design algorithms to make the most use of these devices.

To address these challenges, researchers have presented models for the performance of EFTQC architectures, capturing the diminishing returns of QEC. These models are then used to elucidate the regimes in which algorithms suited to such architectures are advantageous.

How Can EFTQC Extend the Reach of Quantum Computers?

As a concrete example of the potential of EFTQC, researchers have shown that for the canonical task of phase estimation in a regime of moderate scalability, and using just over one million physical qubits, the reach of the quantum computer can be extended compared to the standard approach. This is achieved by using a simple early fault-tolerant quantum algorithm, which reduces the number of operations per circuit by a factor of 100 and increases the number of circuit repetitions by a factor of 10,000.

This example clarifies the role that such algorithms might play in the era of limited-scalability quantum computing. It demonstrates that EFTQC can potentially extend the capabilities of quantum computers beyond what is currently possible with standard approaches.

What is the History and Future of Quantum Computing?

Quantum computers were first proposed to efficiently simulate quantum systems. It took about a decade before it was discovered that quantum phenomena such as superposition and entanglement could be leveraged to provide an exponential advantage in performing tasks unrelated to quantum mechanics. This sparked interest in using a quantum computer to perform other tasks beyond simulating quantum systems, the most famous case being Shor’s algorithm.

Around the same time, the groundbreaking discovery of quantum error-correcting codes (QECCs) set the stage for practical quantum computing. This showed that errors due to faulty hardware could not only be identified but also corrected. Two pieces of the puzzle remained: could quantum computation be done in a fault-tolerant manner, and could one rigorously prove the existence of a threshold below which error can be reduced exponentially in the time and memory overhead cost?

How Has Quantum Computing Hardware Evolved?

On the hardware side, astonishing progress has been made across various modalities, such as superconducting, ion-trap, photonic, etc., in terms of extending qubit coherence times and improving entangling operations. A watershed moment occurred in 2016 when IBM put the first quantum computer on the cloud, giving the public access to quantum computers. This event spurred widespread interest in finding near-term quantum algorithms that did not need the full machinery of fault tolerance.

Another significant moment occurred when the Google Quantum AI team, along with collaborators, announced their achievement of so-called quantum supremacy. They argued that their hardware accomplished a computational sampling task far faster than possible with available supercomputers.

What is the NISQ Era and How Does EFTQC Fit In?

The NISQ era is characterized by quantum devices that are too large to be simulated classically but also too small to implement quantum error correction. Despite the progress made, there is still a need to reduce errors, and the area of quantum error mitigation arose as attempts have been made to meet the needs of these applications.

EFTQC fits into this era by providing a way to utilize quantum computers during the transition from NISQ to FTQC. By modeling the diminishing returns of QEC and designing algorithms to make the most use of these devices, EFTQC can potentially extend the capabilities of quantum computers and play a significant role in the era of limited-scalability quantum computing.

Publication details: “Early Fault-Tolerant Quantum Computing”

Publication Date: 2024-06-17

Authors: Amara Katabarwa, Katerina Gratsea, Athena Caesura, Peter D. Johnson, et al.

Source: PRX Quantum 5, 020101

DOI: https://doi.org/10.1103/PRXQuantum.5.020101