The world of computation is on the verge of a profound transformation. Classical computers have served as the backbone of technological progress for decades. Nevertheless, a new paradigm is emerging. Quantum computing promises to tackle problems now beyond their reach.

This fundamental shift in how information is processed is crucial. It holds the key to unlocking breakthroughs in fields like medicine and materials science. It is also impactful for artificial intelligence and cryptography. This article explores the fascinating history of quantum computing. It traces its evolution from the early theoretical foundations of quantum mechanics to today’s cutting-edge research and development.

Quantum Revolution

The Seeds of Quantum Theory: From Planck to Entanglement

The story of quantum computing begins in the early 20th century with the birth of quantum mechanics. This revolutionary theory fundamentally altered our understanding of the physical world at the atomic and subatomic levels.

In 1900, Max Planck worked on blackbody radiation and laid the first groundwork. He proposed that energy is not continuous. Instead, it is exchanged in discrete packets called quanta. Albert Einstein further solidified this idea in 1905. He explained the photoelectric effect by suggesting that light itself is composed of particles. These particles were later named photons, each carrying a quantum of energy.

A few years later, in 1913, Niels Bohr developed his model of the atom. He introduced the concept of quantized energy levels. Electrons orbit the nucleus in specific, discrete states. These early discoveries marked a radical departure from classical physics. They paved the way for understanding the peculiar behaviors of the quantum realm.

One of the most fundamental principles of quantum mechanics that underpins quantum computing is superposition. Classical systems can only be in one definite state at a time. Yet, a quantum system can be in several states at once. This includes systems like an electron or a photon. Consider a coin flip: in the classical world, the coin will land as either heads or tails.

Nonetheless, in the quantum world, a qubit can concurrently be “0” and “1” until it is measured. Mathematically, this is represented as a linear combination of the basis states. The qubit exists in a probabilistic combination of these states. This ability to define a combination of multiple possibilities is a key attribute of quantum computing systems.

It allows qubits to hold significantly more information than classical bits. This ability enables quantum computers to approach problems in fundamentally different ways. It is important to note that this state of superposition is maintained only while the quantum system remains unobserved. Once a measurement is made, the quantum system “collapses” into one of the definite basis states. This non-intuitive nature of superposition is perplexing. A quantum object can seemingly be in multiple locations. It can also have multiple properties at once. This challenges our everyday experience.

Another bizarre yet crucial quantum phenomenon is entanglement. Entanglement describes a peculiar connection. This connection can exist between two or more quantum particles. It remains regardless of the distance separating them. These particles become linked. They share a unified quantum state. The properties of one particle are instantly correlated with the properties of the other. If a measurement is performed on one entangled particle, the outcome will instantaneously affect the state of the other particle. This occurs even if they are light-years apart. Albert Einstein famously referred to this as “spooky action at a distance” due to its seemingly paradoxical nature.

Entanglement is not merely a theoretical curiosity. It is a fundamental resource in quantum information theory. Most researchers believe it is necessary for realizing the full potential of quantum computing. Superposition allows qubits to exist in multiple states at once. Similarly, entanglement links multiple qubits together. This linkage creates complex computational spaces. The development of quantum mechanics was a gradual process. It involved many scientists over several decades. This process provided the essential theoretical groundwork for quantum computing.

Conceptualizing the Quantum Computer: Early Ideas and Models

The realization emerged that classical computers might struggle to simulate quantum systems. This challenge arises from the exponential increase in computational power required as the setup size grows. This realization laid the foundation for exploring different computational paradigms. In a pivotal 1981 lecture, physicist Richard Feynman proposed a revolutionary idea. He suggested constructing computers that could harness the principles of quantum physics. These computers would carry out simulations of quantum phenomena.

He argued that nature itself operates according to quantum mechanical laws. Thus, a true simulation of nature would require a quantum approach. Feynman envisioned computers operating at the atomic level. In this vision, individual atoms or particles would serve as the fundamental units of computation. This vision was crucial. It shifted the focus from simply understanding the intricacies of quantum mechanics. The focus is now on actively exploring its potential for computation.

Paul Benioff expanded on this foundational idea in the early 1980s. He developed the first concrete theoretical model of a quantum computer. He specifically described a quantum mechanical model of a Turing machine. This is a theoretical construct that forms the basis of classical computer science. Benioff’s work demonstrated that a computer can operate under the laws of quantum mechanics. He did this by providing a Schrödinger equation description of a Turing machine.

He also explored the concept of reversible quantum computing, showing that computations could be performed without dissipating energy. Benioff’s work provided the essential theoretical foundation. It demonstrated that quantum computing was not merely a fanciful notion. It showed a genuine possibility grounded in the fundamental laws of physics. Around the same time, Yuri Manin also briefly motivated the idea of quantum computation.

Expanding on these initial concepts, David Deutsch, in 1985, introduced the idea of a universal quantum computer . He proposed that quantum mechanics could be leveraged to solve certain computational problems significantly faster than any classical computer .Deutsch’s work went beyond the idea of simply simulating quantum systems and began to explore the potential for quantum algorithms to outperform their classical counterparts .

He also proposed the Deutsch-Jozsa algorithm. This was an early example. It demonstrated a computational problem that a quantum computer can solve efficiently. However, no deterministic classical algorithm can solve it. This marked an important step in establishing the computational complexity of quantum computers. It proved their potential for offering a genuine speedup for specific types of calculations.

The Algorithmic Breakthrough: Shor’s and Beyond

A pivotal moment in the history of quantum computing arrived in 1994 with the development of Peter Shor’s algorithm. This groundbreaking algorithm demonstrated the remarkable potential of quantum computers. They can factor large numbers exponentially faster than the best-known classical algorithms. The implications of this discovery for modern cryptography were profound. Many widely used public-key encryption systems, like RSA, rely on the computational difficulty of factoring large numbers. Shor’s algorithm essentially showed that a sufficiently powerful quantum computer could break these encryption schemes. This discovery sparked immense interest and investment in the field.

The algorithm cleverly utilizes the principles of quantum superposition. It uses the Quantum Fourier Transform to efficiently find the prime factors of a large integer. The process involves first preparing a superposition of states. These states represent potential factors. Quantum operations like modular exponentiation are then used.

They encode information about the number being factored . The Quantum Fourier Transform is applied. It then reveals the periodic structure hidden within the data. This allows for the extraction of the factors through classical post-processing. Shor’s algorithm was a powerful proof-of-concept. It demonstrated that quantum computers could indeed offer a significant computational advantage. They solve real-world problems that were intractable for classical computers .

Two years later, in 1996, Lov Grover developed another significant quantum algorithm, known as Grover’s algorithm. This algorithm provides a quadratic speedup over classical algorithms for searching unsorted databases. While not the exponential speedup offered by Shor’s algorithm, it provides a quadratic improvement. This still represents a substantial advantage for many types of search and optimization problems. These issues are prevalent in various industries. Grover’s algorithm demonstrated that the potential of quantum computers extended beyond the realm of number theory and cryptography.

These early algorithmic breakthroughs laid the crucial groundwork for exploring quantum computation. Other breakthroughs, like the Bernstein-Vazirani and Deutsch-Jozsa algorithms, contributed significantly. They provided concrete examples of how the unique properties of quantum mechanics could be harnessed. These examples show how computational problems could be solved more efficiently than with classical methods. This development fueled the burgeoning field and inspired researchers to develop more complex quantum algorithms .

Building the First Qubits: Early Hardware Implementations

The theoretical foundations and the promise of quantum algorithms were being established. Meanwhile, the challenge of physically realizing qubits – the fundamental building blocks of quantum computers – remained significant. Qubits are inherently fragile. They are highly susceptible to disturbances from their environment. This phenomenon is known as decoherence and can lead to errors in computation . Despite these challenges, researchers began to make progress in implementing physical systems that could behave as qubits.

One of the early successful platforms for building qubits involved using trapped ions . Ions, or charged atomic particles, can be confined and suspended in free space using electromagnetic fields. Quantum information is typically stored in the stable electronic states of each ion. Lasers or microwaves can perform quantum operations.

A significant milestone in this area was reached with the first experimental demonstration of a quantum logic gate. It was the controlled NOT (CNOT) gate using trapped ions. This was achieved by Christopher Monroe and David J. Wineland at the National Institute of Standards and Technology (NIST) in 1995. This achievement proved that individual qubits could be controlled and manipulated. This was a crucial step towards building functional quantum computers.

Trapped ions are known for their long coherence times and high fidelity, making them a promising platform for quantum computing. Ignacio Cirac and Peter Zoller proposed the first implementation scheme for a controlled-NOT quantum gate in 1995. It was specifically for trapped ions. Researchers at NIST demonstrated the basic elements experimentally in the same year. They entangled two ions’ states. This marked the first demonstration of a quantum logic gate in any system.

Another leading platform for qubit development emerged with the use of superconducting circuits . These circuits are made from superconducting materials that exhibit zero electrical resistance when cooled to extremely low temperatures .

Quantum behavior in these circuits can be achieved through the use of Josephson junctions. These junctions allow for non-linear inductance. This enables the creation of qubit states. The first experimental realization of a superconducting qubit, known as the Cooper pair box, occurred in 1999. Superconducting qubits offer the potential for scalability. They can leverage fabrication techniques developed for the semiconductor industry. This makes them attractive for building more complex quantum processors. Major technology companies have heavily invested in this approach, further accelerating its development.

While trapped ions and superconducting circuits became the dominant platforms, other early qubit implementations were also explored. Nuclear Magnetic Resonance (NMR) utilized the magnetic properties of atomic nuclei to create and manipulate qubits. In 2000, a significant milestone was reached when the first working 5-qubit NMR computer was demonstrated.

In 2001, there was another breakthrough with the first experimental execution of Shor’s algorithm. This involved factoring the number 15 using an NMR-based quantum computer. NMR played a significant role in early demonstrations of quantum algorithms. However, trapped ions and superconducting circuits have shown greater promise. These technologies offer better scalability and achieve longer coherence times needed for more complex computations.

Taming the Noise: The Emergence of Quantum Error Correction

High error rates are a critical hurdle in the path towards building practical and reliable quantum computers. These errors are caused by noise and decoherence. Errors are extremely rare in classical computers. In contrast, quantum computers are much more susceptible to errors. This vulnerability is due to the delicate nature of quantum states. Additionally, there are challenges in isolating them from environmental interference.

Errors in quantum computers can manifest as both bit flips (a 0 becomes a 1, or reverse). Phase flips can also occur, which disrupt the phase of a qubit’s quantum state. To overcome this fundamental limitation, the development of quantum error correction (QEC) techniques became essential.

The first theoretical proposals for quantum error correction emerged in the mid-1990s. Peter Shor is credited with pioneering work in this area, publishing his groundbreaking error-correcting codes in 1995 and 1996. Around the same time, Andrew Steane also made significant contributions, developing the Steane code. The fundamental idea behind quantum error correction is to encode the information of one logical qubit. This is achieved by creating a highly entangled state of multiple physical qubits.

This redundancy allows for the detection and correction of errors that might occur in the individual physical qubits. The no-cloning theorem in quantum mechanics prohibits the creation of identical copies of an unknown quantum state. Yet, QEC overcomes this by cleverly distributing the quantum information across multiple qubits. Quantum error correction developed a theoretical framework. It addresses the inherent fragility of qubits. This was crucial for the field’s long-term viability. Without effective error correction, the accumulation of errors would render complex quantum computations impossible.

Later research led to the development of more advanced error-correcting codes. These include surface codes and topological codes. They are considered particularly promising for achieving fault-tolerant quantum computing. This means quantum computers that can carry out computations reliably even in the presence of noise. Recent years have seen significant progress in experimentally demonstrating quantum error correction techniques and extending the lifetime of qubits. For example, Google’s researchers showed that increasing the number of physical qubits reduces the error rate. They used these physical qubits to form a single logical qubit.

IBM also announced a new error-correcting code in 2024 that is about 10 times more efficient than previous methods. Microsoft and Quantinuum have reported experimental results. These results show the creation of logical qubits with a reduced number of physical qubits. Photonic also announced SHYPS error correction codes in February 2025. They use materially fewer qubits per logical qubit than traditional surface codes. These experimental advancements represent crucial steps towards building practical, large-scale quantum computers capable of performing complex and error-free computations.

The Rise of Quantum Computing Companies and Research

Quantum computing has the potential to revolutionize various fields. This has led to increasing interest from both academic institutions and private companies. Significant investment is also being made. Many key companies are now actively involved in the development of quantum computing hardware across different qubit modalities.

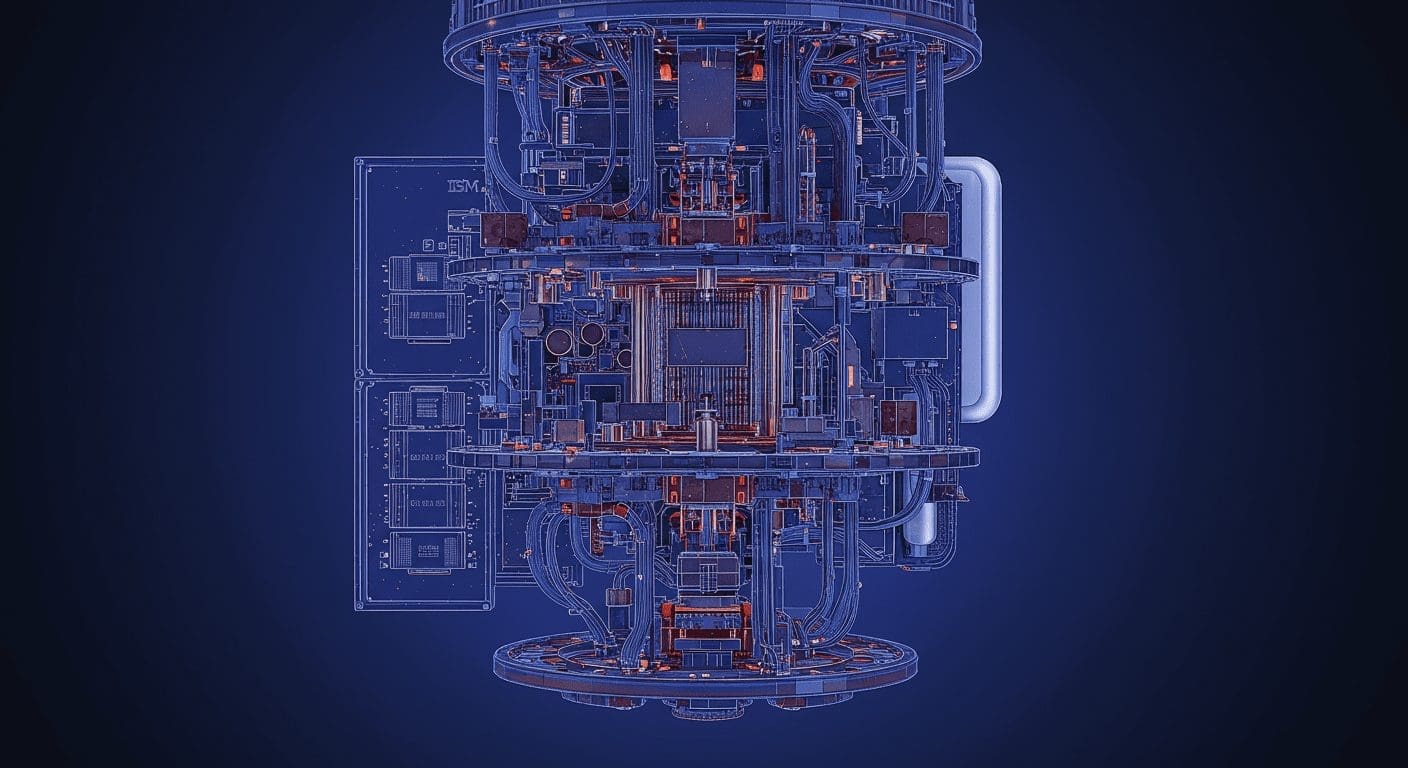

IBM, with its long history of technological innovation, has emerged as a major player. It focuses on the development of superconducting quantum processors. IBM aims to build increasingly powerful and scalable quantum systems. Google’s Quantum AI division is also at the forefront. It pushes the boundaries of quantum computing capabilities. The division claims to have achieved quantum supremacy.

Microsoft, through its Azure Quantum platform, offers a comprehensive ecosystem for quantum computing, encompassing software, hardware, and solutions .

Amazon Web Services (AWS) gives users access to various quantum computing resources. Its Amazon Braket service enables them to experiment with different quantum hardware.

Beyond these corporate giants, several other companies are making significant contributions. Rigetti Computing is focused on building quantum computing components, from chips to processors, based on superconducting qubits. IonQ specializes in trapped-ion quantum computers, leveraging the high fidelity and long coherence times of this technology .

IonQ’s Tempo system is expected in 2025 with a target of 64 algorithmic qubits. D-Wave Systems is known for its quantum annealing technology, which is particularly suited for solving enhancement problems. Xanadu is pioneering the development of photonic quantum computing, using the properties of light for quantum computation.

Oxford Quantum Circuits (OQC) is another notable company with a self-contained operational quantum computer . Alice & Bob, a French firm, is pursuing error-corrected, fault-tolerant quantum computers. Riverlane focuses on quantum error correction solutions . Quantum Computing Inc. (QCI) is dedicated to advancing quantum algorithms and hardware, specializing in quantum annealing technology .

The active involvement of these major technology companies signifies a crucial shift. This shift is from primarily academic research towards engineering practical quantum computing technologies. It also includes the deployment of services. This increased investment and competition are driving rapid advancements in both the hardware and software aspects of quantum computing.

Key research institutions and universities around the world continue to play a vital role in advancing the field. Institutions like the Massachusetts Institute of Technology (MIT), Harvard University, and Stanford University lead fundamental research efforts.

They explore new types of qubits. They develop novel quantum algorithms and train the next generation of quantum scientists and engineers. The University of Maryland, College Park, and the University of Chicago are leading centers for quantum research. The University of California, Berkeley, and the University of Oxford also lead in this field.

Delft University and the University of Tokyo are prominent as well. These institutions foster a diverse range of research areas. They range from quantum materials and devices to quantum information theory. Quantum-safe cryptography is another area of their research. The ongoing research in these academic centers is crucial. It lays the groundwork for future breakthroughs. It pushes the boundaries of what is possible with quantum computation.

Quantum Supremacy and the Path to Quantum Advantage

The concept of quantum supremacy is also sometimes referred to as quantum advantage. It indicates when a quantum computer can solve a computational problem. This problem is practically impossible for any classical computer to resolve within a reasonable amount of time. It is important to distinguish between quantum supremacy. Quantum supremacy can be demonstrated with any problem regardless of its practical utility. Quantum advantage refers to solving a useful, real-world problem faster, cheaper, or more accurately than classical computers .

In 2019, Google claimed to have achieved quantum supremacy with their Sycamore processor. It reportedly performed a specific task in just 200 seconds. This task would take the most powerful classical supercomputers approximately 10,000 years.

This announcement sparked considerable debate. IBM subsequently argued that a classical supercomputer could perform the same task in a significantly shorter timeframe. Nevertheless, Google’s claim marked a significant milestone. It suggested that quantum computers were reaching a point. They could outperform even the most powerful classical machines for certain specific tasks.

In March 2025, D-Wave Quantum made a claim about achieving “quantum supremacy.” They did this by outperforming a classical supercomputer. This was in solving complex magnetic materials simulation problems. Other claims of quantum advantage have also emerged using different quantum computing approaches, such as photonic systems developed by Xanadu.

The potential speedups offered by quantum computers are due to the principle of quantum parallelism. This principle is enabled because qubits can exist in a superposition of states. A qubit can represent a combination of 0 and 1. Therefore, a quantum computer with n qubits can, in principle, perform calculations on 2^n states simultaneously. This massive parallelism allows quantum computers to explore a vast number of possibilities concurrently. It may lead to exponential speedups for certain types of problems. Classical computers process information sequentially.

The demonstration of quantum supremacy is a significant scientific achievement. However, the current focus is shifting towards achieving quantum advantage for practical, real-world applications . Researchers and companies are actively exploring quantum computers’ usage in solving complex problems. These include fields like drug discovery, materials science, financial modeling, and optimization. They aim to demonstrate that quantum computers can provide a tangible benefit over classical approaches . The concept of quantum utility is also emerging. It refers to the point when quantum computers can perform calculations reliably. These computations occur at a scale beyond brute-force classical methods for exact solutions .

Challenges and Future Horizons in Quantum Computing

Decoherence and the resulting high error rates in quantum computations continue to be major obstacles. Scaling the number of qubits in quantum processors while maintaining their quality and coherence is another significant hurdle. Furthermore, developing new and efficient quantum algorithms continues. These algorithms aim to leverage the power of quantum computers for a wider range of practical problems. This development is an ongoing area of research.

Ongoing research efforts are focused on developing more stable qubits with longer coherence times across various qubit modalities . Advancements in quantum error correction are crucial for achieving fault-tolerant quantum computers that can reliably perform complex computations. Researchers are also developing innovative ways. They aim to scale the number of qubits in quantum processors. This involves exploring different architectures and fabrication techniques .

Key Milestones in Quantum Computing

The history of quantum computing is a rich tapestry of theoretical breakthroughs, experimental achievements, and growing commercial interest. Here is a timeline highlighting some of the key milestones in this ongoing journey:

- Early 20th Century: The development of quantum mechanics was pioneered by scientists like Max Planck. Albert Einstein and Niels Bohr also contributed significantly. This work lays the foundational principles .

- 1935: Albert Einstein, Boris Podolsky, and Nathan Rosen introduce the EPR paradox, highlighting the concept of quantum entanglement .

- 1970: James Park introduces a concept. He says it is impossible to create an identical copy of an arbitrary unknown quantum state. This idea was later formalized as the no-cloning theorem .

- 1973: Alexander Holevo publishes Holevo’s theorem, showing the limit of accessible classical information from qubits .

- 1980: Paul Benioff describes the first quantum mechanical model of a computer, the quantum Turing machine . Yuri Manin also briefly motivates the idea of quantum computing .

- 1981: Richard Feynman proposes the idea of quantum computers for simulating physics. He recognizes the limitations of classical computers for this task.

- 1982: Paul Benioff further develops his quantum mechanical Turing machine model. William Wootters and Wojciech H. Zurek, and independently Dennis Dieks, rediscover the no-cloning theorem . Richard Feynman lectures on the potential advantages of computing with quantum systems .

- 1985: David Deutsch conceptualizes the universal quantum computer. He suggests that quantum computers could offer a computational advantage over classical ones. Feynman publishes his paper “Quantum Mechanical Computers” .

- 1992: David Deutsch and Richard Jozsa propose a computational problem solvable efficiently by a quantum computer but not classically. Ethan Bernstein and Umesh Vazirani propose the Bernstein-Vazirani algorithm .

- 1994: Peter Shor develops his quantum algorithm for factoring large numbers. It works exponentially faster than classical algorithms and has significant implications for cryptography.

- 1995: Christopher Monroe and David J. Wineland experimentally demonstrate the first quantum logic gate. It is a controlled NOT (CNOT) gate using trapped ions at NIST. Peter Shor proposes the first schemes for quantum error correction. Ignacio Cirac and Peter Zoller propose a scheme for a CNOT gate with trapped ions.

- 1996: Lov Grover develops his quantum search algorithm, providing a quadratic speedup for searching unsorted databases . Seth Lloyd proposes a quantum algorithm for simulating quantum-mechanical systems .

- 1998: Researchers at UCLA show the first working quantum logic gate .

- 1999: The first experimental demonstration of superconducting qubits is achieved with the Cooper pair box. D-Wave Systems is founded .

- 2000: The first working 5-qubit NMR computer is demonstrated at the Technical University of Munich, Germany . The first execution of order finding (part of Shor’s algorithm) is demonstrated by IBM and Stanford University .

- 2001: IBM and Stanford University demonstrate the first execution of Shor’s algorithm. They factored the number 15 using a 7-qubit NMR quantum computer. The first experimental implementation of linear optical quantum computing is achieved.

- 2011: D-Wave Systems releases the D-Wave One, the first commercially available quantum annealer.

- 2012: John Preskill coins the term “quantum supremacy” .

- 2016: IBM makes quantum computing accessible to the public through the IBM Cloud, launching the IBM Quantum Experience .

- 2019: Google claims to have achieved quantum supremacy with its Sycamore processor .

- 2024: IBM announces a new, more efficient quantum error correction code . Microsoft and Quantinuum announce experimental results showing logical qubits created with fewer physical qubits.

- IonQ retires its Harmony quantum system. Rigetti’s Ankaa-3 system achieves high gate fidelity.

- LHC experiments at CERN observe quantum entanglement at the highest energy yet .

- 2025: The UN designates 2025 as the International Year of Quantum Science and Technology . Predictions suggest increased use of diamond technology in quantum systems and quantum computers leaving labs for real-world deployments.

- IonQ targets #AQ 64 with its Tempo system.

- Photonic announces SHYPS error correction codes.

- Oxford Instruments, OQC, and Quantum Machines plan to reveal an open quantum computing platform .

- SEEQC installs a cross-qubit scaling platform at the UK’s National Quantum Computing Center . Singapore invests in a quantum and Supercomputing integration initiative.

- NVIDIA’s inaugural Quantum Day is scheduled. D-Wave claims “quantum supremacy” in quantum simulation.

- IBM and the Basque Government announce plans to install Europe’s first IBM Quantum System Two

- Future Projections: Companies like IBM outline roadmaps for developing quantum systems. These systems will have increasing numbers of logical qubits. The goal is to achieve utility-scale quantum computing in the coming years .

Conclusion

The history of quantum computing is a testament to human curiosity and the relentless pursuit of knowledge. The field has come a long way. It started with the groundbreaking theories of quantum mechanics in the early 20th century. It continues with the ambitious efforts to build powerful and reliable quantum computers today. Profound theoretical insights, ingenious algorithmic breakthroughs, and remarkable experimental achievements have marked the journey.

Significant challenges remain. The rapid pace of innovation in quantum computing is remarkable. Global interest in this technology is growing. This transformative technology has the potential to reshape our world. It could unlock solutions to some of humanity’s most complex problems. The quantum revolution is well underway, and its future promises to be nothing short of extraordinary.