Convolutional Neural Networks consistently deliver breakthroughs in areas like image recognition and artificial intelligence, but their increasing complexity presents a significant hurdle for deployment on resource-constrained devices. Ahmed Sadaqa and Di Liu, from the Norwegian University of Science and Technology, address this challenge with a novel approach to model compression, focusing on reducing network size without sacrificing performance. Their work introduces a hybrid pruning framework that strategically removes both channels and layers, effectively scaling down the network’s complexity, inspired by the scaling principles of the EfficientNet architecture. The team demonstrates that this method achieves substantial reductions in model complexity, enabling faster performance on embedded AI devices like the JETSON TX2, while maintaining a high level of accuracy.

The study systematically compares different pruning criteria to achieve compression without significant accuracy loss, investigating methods for identifying and removing less important filters within the network. Scientists evaluated performance based on accuracy, the number of parameters, computational complexity, and inference speed. The research explores two approaches, one applying layer pruning before channel pruning, and another reversing the order.

Results demonstrate that a trade-off exists between compression ratio and accuracy, with higher compression generally leading to lower accuracy. Batch Normalization Scale appears to achieve a good balance between compression and accuracy, particularly when applied after layer pruning. The order in which layer and channel pruning are applied significantly impacts performance. The relatively small accuracy drops observed suggest that the dataset used, CIFAR-100, is relatively easy for compression, and more challenging datasets might reveal more significant differences between methods. Future work should expand dataset evaluation to include ImageNet and more complex scenes, conduct thorough hyperparameter optimization for each pruning method, and perform statistical tests to determine the statistical significance of observed differences.

Comparing the results with other state-of-the-art compression techniques, such as knowledge distillation and quantization, would also strengthen the findings. Exploring adaptive pruning techniques that dynamically adjust the pruning rate based on filter importance, and investigating the benefits of combining different pruning criteria, are promising avenues for further research. In conclusion, this research provides a valuable comparison of different pruning criteria for CNN compression. While the study has some limitations, it offers useful insights into the trade-offs between accuracy, compression ratio, and latency. The work combines established channel pruning techniques with a newly proposed layer pruning algorithm, systematically reducing both the width and depth of the network. Experiments demonstrate that this approach effectively scales down network size by removing redundant channels and layers, inspired by the scaling principles of the EfficientNet architecture but applied in reverse. The team’s methodology centers on a two-phase process, first applying channel pruning to reduce network width, followed by the new layer pruning algorithm to decrease network depth.

Crucially, both phases utilize the same criteria for identifying components to remove, ensuring a consistent approach to complexity reduction. The layer pruning algorithm operates by evaluating and removing entire blocks of layers in a single step, based on a generated importance score for each block. This one-shot approach distinguishes it from iterative layer pruning methods and contributes to the framework’s efficiency. Results show the framework successfully reduces network complexity while minimizing performance loss. The research empirically examined various pruning criteria, selecting the most effective for identifying and removing unimportant network components. By applying this hybrid approach, scientists achieved significant reductions in both the width and depth of the CNNs, paving the way for more efficient deployment on edge devices like the JETSON TX2. This work delivers a practical solution for compressing CNN models, enabling their use in applications where computational resources are limited.

Hybrid Pruning Reduces Network Width and Depth

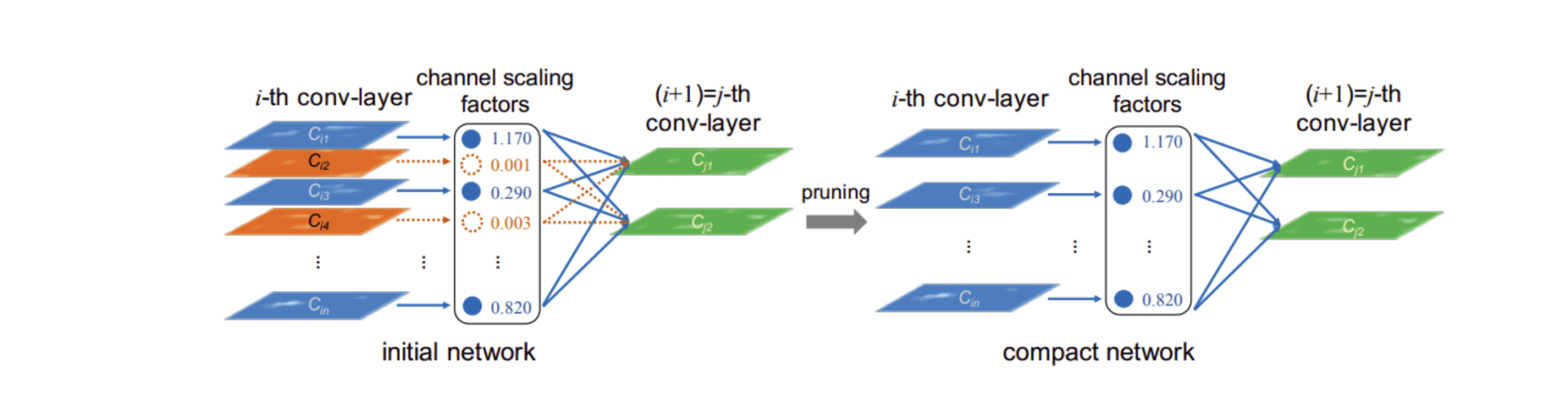

This study pioneers a hybrid pruning framework to compress convolutional neural networks, addressing the challenge of deploying complex models on resource-constrained devices. This framework systematically reduces both the width and depth of networks, mirroring the scaling principles of EfficientNet but in reverse. Instead of expanding, the system strategically prunes redundant components. The methodology employs a two-phase approach, beginning with established channel pruning algorithms to diminish network width, followed by a novel layer pruning algorithm designed to reduce network depth. The core of the layer pruning algorithm lies in its ability to assess the importance of entire blocks of layers in a single step.

Scientists generated an importance score for each layer block, enabling the one-shot removal of less critical sections. This contrasts with iterative pruning methods, offering a more efficient approach to depth reduction. The team meticulously evaluated the impact of each pruned block on overall network performance, ensuring minimal accuracy loss. Researchers carefully measured the network’s initial performance before any pruning commenced to establish a baseline. Scientists utilized consistent pruning criteria across both channel and layer pruning phases, focusing on identifying components with minimal impact on the network’s output.

This involved assessing the sensitivity of the loss function to changes in parameters, drawing inspiration from techniques like Taylor expansion. The resulting pruned models were then deployed on a JETSON TX2 embedded AI device to quantify reductions in latency and demonstrate the practical benefits of this aggressive compression strategy. By adapting principles from network scaling techniques, the team successfully scaled down networks depthwise and widthwise, achieving significant reductions in the number of parameters and computational operations required. Experiments demonstrate that this aggressive hybrid pruning framework maintains predictive accuracy while substantially decreasing model complexity and latency. The study rigorously evaluated the framework’s performance using three key metrics, accuracy, complexity, and latency, and explored various pruning criteria to assess its capabilities.

Results indicate that substantial compression is achievable without significant performance degradation, making the approach particularly valuable for deployment on resource-constrained devices such as smartphones, IoT devices, and embedded systems. This work addresses critical challenges in real-world AI applications, where limitations in computational power and memory often hinder effective deployment, and offers a pathway to streamlining models for efficient operation on limited hardware. The authors acknowledge that further research could explore the framework’s application to a wider range of network architectures and datasets.

👉 More information

🗞 Compressing CNN models for resource-constrained systems by channel and layer pruning

🧠 ArXiv: https://arxiv.org/abs/2509.08714