The insatiable energy appetite of artificial intelligence (AI) is a growing concern, driven by the massive computing resources required to train complex neural networks. However, researchers at the Technical University of Munich (TUM) have unveiled a groundbreaking method that promises to drastically reduce this energy burden, achieving a 100-fold increase in speed while maintaining comparable accuracy to existing techniques.

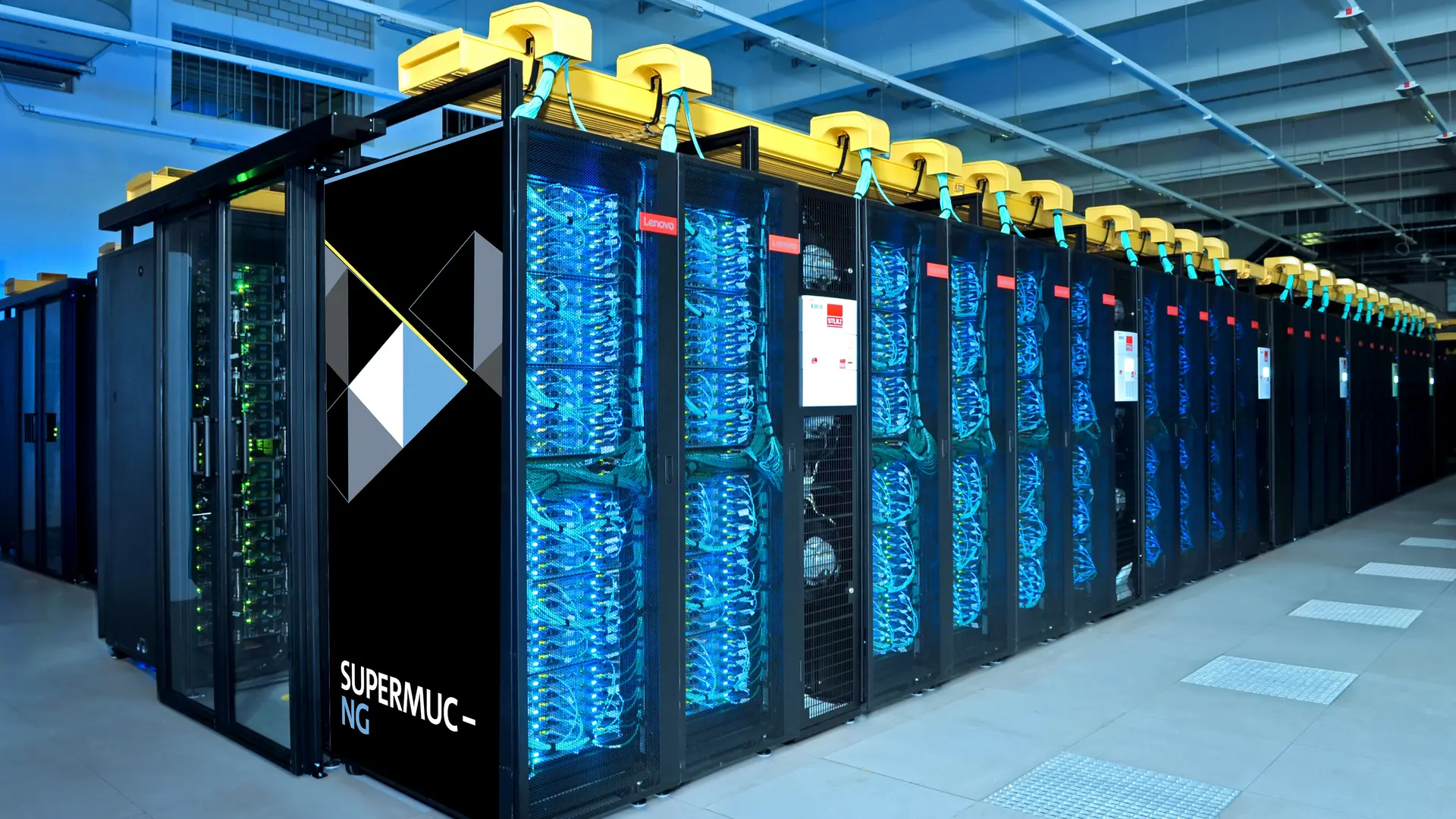

The escalating use of AI applications, such as large language models (LLMs), has led to a surge in demand for data center capacity, resulting in significant energy consumption. In Germany alone, data centers consumed approximately 16 billion kWh in 2020, a figure projected to rise to 22 billion kWh by 2025. As AI applications become more sophisticated, the energy demands for training neural networks are expected to skyrocket.

To address this challenge, Professor Felix Dietrich and his team at TUM have developed a novel approach that departs from traditional iterative training methods. Instead of incrementally adjusting parameters, their method directly computes them based on probabilities. This probabilistic approach strategically utilizes values at critical points in the training data, where rapid changes occur.

“Our method makes it possible to determine the required parameters with minimal computing power,” explains Professor Dietrich. “This can make the training of neural networks much faster and, as a result, more energy efficient.”

The functioning of neural networks, inspired by the human brain, involves interconnected nodes where input signals are weighted and summed. Conventional training methods randomize initial parameter values and then iteratively adjust them, a process that is both computationally intensive and energy-consuming.

Dietrich’s team’s innovative approach eliminates this iterative process, offering a significant speed advantage. “In addition, we have seen that the accuracy of the new method is comparable to that of iteratively trained networks,” Dietrich confirms.

The researchers are currently focusing on applying this method to acquire energy-conserving dynamic systems from data. These systems, which evolve over time according to specific rules, are prevalent in areas such as climate modeling and financial markets.

This breakthrough, detailed in publications such as “Training Hamiltonian Neural Networks without Backpropagation” and “Sampling Weights of Deep Neural Networks,” holds the potential to revolutionize AI training, paving the way for more sustainable and efficient AI development. By significantly reducing energy consumption, this new method offers a crucial step towards mitigating the environmental impact of the burgeoning AI industry.

More information

External Link: Click Here For More