Researchers at the University of Edinburgh’s Quantum Software Lab, led by Associate Professor Dr Petros Wallden, have made a significant breakthrough in quantum machine learning (QML) algorithms. By leveraging NVIDIA’s CUDA-Q simulation toolkits and GPU hardware, they were able to overcome scalability issues and simulate complex QML clustering methods on problem sizes up to 25 qubits. This achievement is crucial for the development of quantum-accelerated supercomputing applications.

The team used a variational quantum algorithm (VQA) framework to express three clustering techniques in a way that could utilize a quantum processing unit (QPU). They derived a weighted qubit Hamiltonian encoding the cost functions for each method, enabling efficient calculation of cost minimization. CUDA-Q’s GPU acceleration and out-of-the-box primitives facilitated comprehensive simulations, allowing the team to benchmark their algorithms against classical methods.

Key individuals involved in this work include Dr Petros Wallden and Boniface Yogendran, lead developer on the research. Companies involved are NVIDIA, which provided the CUDA-Q simulation toolkits and GPU hardware, and the University of Edinburgh, where the research was conducted.

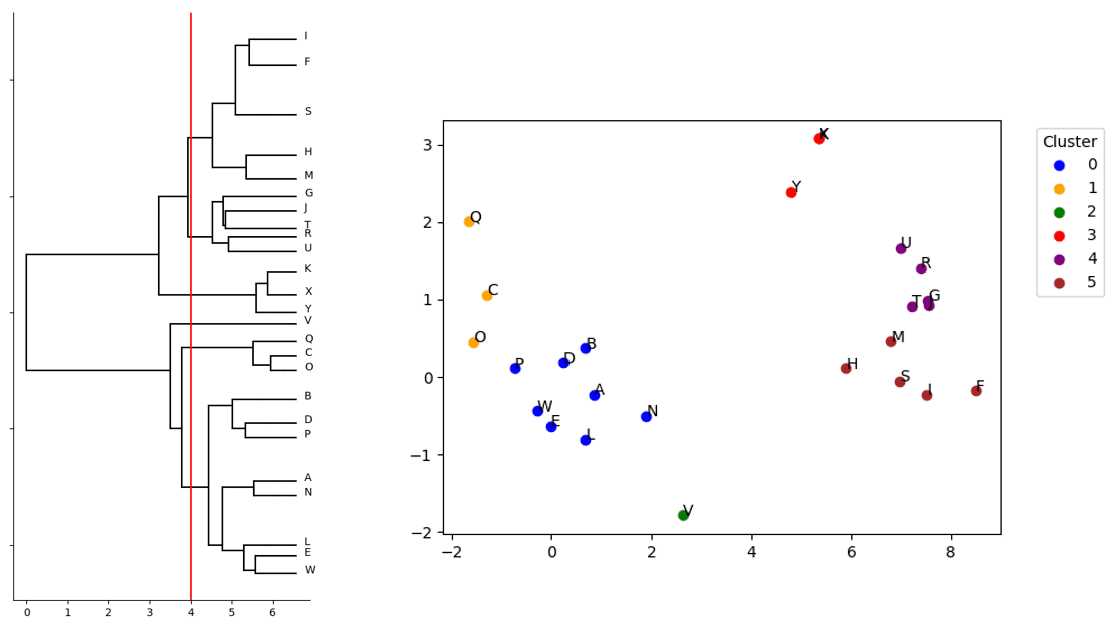

The three clustering techniques explored – k-means, Gaussian mixture model (GMM), and divisive clustering – are all well-established methods in classical machine learning. However, by expressing these techniques within a variational quantum algorithm (VQA) framework, the authors have enabled their implementation on a quantum processing unit (QPU). This is a significant breakthrough, as it allows for the exploitation of quantum parallelism to accelerate the clustering process.

The use of CUDA-Q, a simulation toolkit developed by NVIDIA, has been instrumental in overcoming scalability issues. By providing easy access to GPU hardware and out-of-the-box primitives, CUDA-Q has enabled the authors to simulate large problem sizes (up to 25 qubits) that would be impossible on CPU hardware alone. The ability to pool memory across multiple GPUs has also allowed for further scaling of the simulations.

The results of the experiments are promising, with the QML algorithms performing well in certain scenarios. For instance, the GMM (K=2) and divisive clustering approaches have been shown to be comparable to or even outperform classical heuristic methods like Lloyd’s algorithm.

What I find particularly exciting is the potential for further development and scaling of these QML clustering techniques using CUDA-Q. The fact that the code can be easily ported to physical QPUs makes it an attractive solution for real-world applications.

In conclusion, this work represents a significant step forward in the development of quantum-accelerated supercomputing applications. As we continue to push the boundaries of what is possible with QML, I have no doubt that we will see even more innovative solutions emerge in the years to come.

For those interested in exploring CUDA-Q further, I recommend checking out the Divisive clustering Jupyter notebook and the CUDA-Q Tutorials page. The resources provided offer a great starting point for developers looking to get started with QML clustering techniques.

External Link: Click Here For More