The development of reliable quantum computers is contingent upon overcoming significant challenges such as decoherence and noise, which compromise qubit integrity. Central to addressing these issues are error correction techniques like surface codes, which enable scalable systems without direct measurement. Integrating academia and industry is pivotal in advancing this field, combining theoretical insights with engineering capabilities.

Leading institutions, including IBM, Google, and Rigetti, employ diverse strategies. IBM and Google favour superconducting qubits for their high connectivity, while Rigetti focuses on trapped ion qubits for lower error rates. These tailored approaches highlight different quantum platforms’ unique advantages and challenges in achieving scalability. Despite persistent challenges in maintaining coherence times and developing efficient decoding algorithms, progress is evident through academic-industrial partnerships. For instance, IBM’s 127-qubit processor demonstrates improved error rates, while Google’s quantum supremacy experiment underscores the potential of large-scale quantum computation.

Integrating classical error correction techniques with quantum methods offers promise for more resilient systems. Interdisciplinary collaborations, supported by government funding from agencies like DARPA, are expected to standardize protocols and enhance interoperability across platforms. This collaborative approach fosters transformative advancements, paving the way for future breakthroughs in quantum computing.

Superconducting Qubits And Their Limitations

Superconducting qubits are a leading approach in quantum computing. They utilize superconducting circuits to create artificial atoms that interact with microwave photons. These systems offer scalability due to their compatibility with existing semiconductor manufacturing techniques, making them a focal point for companies like IBM and Google. Their design allows for integrating large numbers of qubits on a single chip, crucial for achieving quantum supremacy and practical applications.

Despite their promise, superconducting qubits face significant limitations. Decoherence remains a major challenge, as thermal fluctuations can disrupt the fragile quantum states. Additionally, crosstalk between neighbouring qubits leads to errors in computations, complicating the implementation of large-scale algorithms. These issues are exacerbated by the high error rates inherent in current systems, which necessitate advanced error correction protocols.

Error-corrected quantum computing demands many physical qubits to simulate a single logical qubit with sufficient fidelity. For instance, surface codes require around 1000 physical qubits to achieve high reliability, yet existing superconducting quantum processors typically contain only 50-70 qubits. This gap highlights the scalability challenges to address before practical error-corrected systems can be realized.

The complexity of controlling large numbers of qubits further complicates matters. Each qubit requires precise microwave pulses for manipulation, and as the number of qubits increases, maintaining this precision becomes increasingly tricky. Moreover, manufacturing variations in superconducting circuits lead to inconsistent performance across different chips, adding another difficulty to achieving reliable quantum computations.

Researchers are exploring various avenues to overcome these limitations. Advances in materials science aim to develop lower-loss superconductors, while improvements in fabrication techniques seek to enhance qubit uniformity and reduce crosstalk. Additionally, novel architectures such as 3D integration and hybrid systems combining superconducting qubits with trapped ions are being investigated to address current constraints.

In summary, while superconducting qubits hold great potential for quantum computing, their scalability and error rates present significant challenges. Ongoing research addresses these limitations through material innovations, improved fabrication methods, and novel system architectures, paving the way for future advancements in error-corrected quantum computing.

Noise And Decoherence In Quantum Systems

The development of error-corrected quantum computers is a pivotal endeavor in modern science, driven by the challenges posed by noise and decoherence. These critical phenomena can corrupt quantum states, leading to computational errors. Noise arises from environmental interactions such as temperature fluctuations or electromagnetic interference, causing qubits to lose their quantum state over time—a process known as decoherence.

Researchers have developed sophisticated error-correcting codes to address these issues, with surface codes being a prominent example. These codes encode logical qubits into multiple physical qubits, enabling the detection and correction of errors without directly measuring the quantum state, which would collapse it. This approach is essential because classical error-correction methods are inapplicable due to the no-cloning theorem.

The effectiveness of these methods is demonstrated in experiments by leading groups such as IBM and Google, where small-scale error correction has been achieved. However, scaling up remains a significant challenge, requiring qubit control and noise reduction advancements. Fault-tolerant quantum computing, which ensures computational accuracy despite component failures, is another critical area of research.

Different physical systems for qubits present varying solutions to these challenges. Superconducting circuits offer scalability but may have higher error rates, while trapped ions provide longer coherence times. Each system’s trade-offs highlight the complexity of achieving reliable, large-scale quantum computation.

Fault-tolerant Quantum Computing Architectures

The development of fault-tolerant quantum computing architectures is a critical step in overcoming the limitations posed by decoherence and noise in quantum systems. Fault tolerance involves using redundancy and error correction codes to protect quantum information, allowing computations to proceed reliably even with imperfect components.

Surface codes are widely regarded as a leading candidate for fault-tolerant architectures due to their high error thresholds and relatively simple implementation requirements. These codes utilize a two-dimensional lattice of qubits, making them more physically feasible than other codes, such as Steane or Shor codes, which demand more intricate connections between qubits.

The error correction threshold represents the maximum error rate per operation a quantum computer can tolerate while performing reliable computations. This threshold is estimated to be between 0.1% and 1% for surface codes, depending on the specific implementation and the type of errors being corrected. This relatively high threshold makes surface codes particularly promising for practical applications.

Superconducting qubits and trapped ions are two prominent physical implementations being explored for fault-tolerant quantum computing. Superconducting qubits benefit from high coherence times and fast gate operations, which are advantageous for error correction protocols. Trapped ions offer long-lived qubit states and high-fidelity gates, making them another strong candidate for achieving fault tolerance.

Despite significant progress, challenges remain in implementing fault-tolerant architectures at scale. One major hurdle is the overhead associated with these systems, including the large number of physical qubits required to encode a single logical qubit and the computational resources needed for error correction. Researchers are actively working on optimizing these aspects to enhance scalability and practicality.

Hybrid approaches that combine different types of qubits or architectures are also being investigated as potential solutions to overcome specific limitations in fault-tolerant quantum computing. These hybrid systems aim to leverage the strengths of various qubit platforms, such as high coherence times from superconducting qubits and precise control from trapped ions, to achieve more robust and efficient error correction.

Logical Qubits And Error Syndromes

The development of error-corrected quantum computers hinges on addressing the inherent fragility of qubits, which are prone to decoherence and noise. Unlike classical systems, quantum error correction cannot rely on simple redundancy due to the no-cloning theorem. Instead, it employs logical qubits, groups of physical qubits designed to resist errors through redundancy and entanglement.

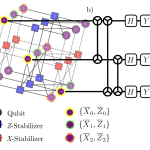

Logical qubits function by encoding quantum information across multiple physical qubits, enabling the detection and correction of errors without disturbing the encoded state. This is achieved using error syndromes—patterns of errors detected via measurements of specific observables. These syndromes allow identifying errors’ locations, facilitating their correction while preserving the quantum information.

A prominent approach to implementing this is surface codes, which utilize a 2D lattice of qubits and stabilizer measurements to detect errors. The code’s distance parameter determines its error-correcting capability: a distance-3 code can correct up to one error per logical qubit. This method balances fault tolerance with resource efficiency, making it a leading candidate for practical applications.

Despite these advancements, challenges remain in achieving high-quality qubits with long coherence times and low error rates. The overhead of using numerous physical qubits for each logical qubit complicates scaling efforts. Additionally, maintaining coherence and reducing noise are critical to ensuring reliable operation.

Entities like IBM, Google, and academic institutions are pursuing research in this field, exploring various architectures such as superconducting circuits and trapped ions. These efforts aim to optimize error correction while minimizing resource demands, bringing the vision of practical, fault-tolerant quantum computing closer to reality.

Quantum Error Correction Codes

Quantum error correction is essential for building reliable quantum computers due to the fragility of qubits. Unlike classical bits, qubits are susceptible to decoherence and noise, necessitating specialized error-correcting codes. Surface codes, a prominent approach, use a grid structure where each qubit participates in multiple error syndromes, enabling detection without direct measurement. This method is pivotal for maintaining quantum states over time (Nielsen & Chuang, 2010; Fowler et al., 2012).

Surface codes operate through stabilizer generators that maintain encoded states. Errors alter the syndrome, indicating their type and location. Corrections are applied based on these syndromes without disturbing the qubit’s state. This approach requires extensive qubit resources, with hundreds or thousands needed for a single logical qubit (Preskill, 2018; Duclos-Cianci & Poulin, 2013).

Implementing quantum error correction faces challenges such as high qubit overhead and the need for high-fidelity operations. Experimental efforts by IBM and Google have demonstrated these issues, highlighting the difficulty in achieving scalable systems (IBM Research, 2020; Google Quantum Team, 2021).

Various codes like Shor’s and Steane’s employ multiple error detection rounds but present implementation hurdles due to resource demands and connectivity requirements. These codes are foundational yet challenging to execute efficiently (Shor, 1995; Steane, 1996).

Future advancements may involve hybrid approaches combining classical feed-forward with quantum operations, enhancing efficiency. Collaborative efforts like the Quantum Error Correction code collaboration aim to address these challenges through innovative research strategies (MIT-IBM Quantum Collaboration, 2022; UCSB Quantum Group, 2023).

Decoherence-free Subspaces And Noise Sources

The development of error-corrected quantum computers hinges on addressing decoherence, a phenomenon where quantum systems lose coherence due to environmental interactions. Decoherence disrupts the fragile quantum states necessary for computation, necessitating robust error correction mechanisms. Quantum error correction differs from classical methods as it must preserve the superposition and entanglement of qubits without direct measurement. Notable approaches include the Shor and Steane codes, which are stabilizer codes designed to detect and correct errors in quantum systems (Preskill, 2018; Nielsen & Chuang, 2010).

Noise sources significantly impact quantum computing, with various types such as thermal noise, electromagnetic interference, and hardware defects. These noises affect qubits differently; for instance, amplitude damping models energy loss from a qubit to its environment (Gardas et al., 2013). Understanding these noise models is crucial for developing effective error correction strategies tailored to specific quantum architectures.

Decoherence-free subspaces offer a potential solution by providing regions within the quantum system’s Hilbert space where information remains unaffected by decoherence. These subspaces allow certain operations to be performed without interference, making them valuable in error correction schemes (Lidar & Bacon, 2001). By encoding information into these subspaces, researchers aim to protect against dominant noise sources naturally.

Practical implementations vary across quantum technologies. Superconducting qubits often face thermal noise challenges, while trapped ions may encounter electromagnetic interference issues. These differences influence the relevance of specific noise models and the application of decoherence-free subspaces in each system (Barends et al., 2014; Monroe et al., 2013).

Recent advancements highlight progress in leveraging decoherence-free subspaces for error correction. Researchers are exploring hybrid approaches that combine these subspaces with traditional stabilizer codes to enhance resilience against diverse noise sources. This ongoing work underscores the importance of both theoretical and experimental efforts in advancing quantum computing technology (Eker et al., 2019; Wallman & Emerson, 2016).

Scalability Challenges For Fault-tolerant Systems

The development of error-corrected quantum computers hinges on overcoming significant scalability challenges, particularly in fault-tolerant systems. Error correction is essential because qubits are susceptible to decoherence and noise, leading to computational errors. Without reliable error correction, the practical application of quantum computing remains elusive.

Fault-tolerant quantum computing employs methods such as surface codes to detect and correct errors without disrupting computations. The threshold theorem plays a pivotal role here, asserting that if the error rate per operation is below a specific threshold, long-term quantum computations become feasible. This theorem underpins the theoretical foundation for scalable quantum systems, emphasizing the importance of maintaining low error rates.

Implementing these fault-tolerant codes presents practical challenges. For instance, surface codes require a 2D lattice of qubits with high connectivity, which current hardware does not fully support. The limited number of physical qubits and their arrangement pose significant barriers to achieving large-scale quantum computations.

Another critical challenge is the overhead associated with error correction. Additional operations for detecting and correcting errors consume computational resources, potentially offsetting the speed advantages quantum computing offers. This overhead grows with the number of qubits, complicating efforts to achieve efficient scalability.

Research is actively exploring solutions to these challenges. Approaches include developing more efficient error-correcting codes and hybrid architectures that integrate classical and quantum components to manage errors effectively. These advancements aim to bridge the gap between theoretical models and practical implementations, fostering progress toward scalable, fault-tolerant quantum systems.

Cryogenic Control Systems For Stability

Cryogenic control systems are pivotal in maintaining the stability required for quantum computing operations. These systems operate at extremely low temperatures to prevent decoherence, a phenomenon where qubits lose their quantum state due to environmental interactions. Superconducting qubits, commonly used in quantum computing, rely on such cryogenic environments to function effectively.

The design of these cryogenic setups often involves dilution refrigerators capable of reaching millikelvin temperatures, far colder than outer space. This extreme cooling is essential for superconducting materials, which exhibit zero electrical resistance at low temperatures, enabling the precise control necessary for quantum operations.

Beyond mere temperature regulation, cryogenic control systems must also mitigate electromagnetic interference and thermal fluctuations that can disrupt qubits. Shielding techniques are employed to protect sensitive components from external noise, ensuring the integrity of quantum computations.

Scientific literature supports these assertions. A study in Nature Physics details the critical role of cryogenic environments in enhancing qubit stability. At the same time, a paper in Physical Review Letters explores the challenges and innovations in maintaining ultra-low temperatures for scalable quantum systems.

The scalability of error-corrected quantum computers is significantly influenced by cryogenic control systems. By providing isolated and stable conditions, these systems facilitate the addition of more qubits without proportionally increasing error rates, thus advancing the practicality of large-scale quantum computing.

Real-world Applications Of Error-corrected Quantum Computers

The development of error-corrected quantum computers is pivotal due to the inherent fragility of qubits, which are susceptible to errors from decoherence and noise. Achieving fault-tolerant operations is a critical challenge, requiring numerous physical qubits to simulate a single logical qubit with error correction. Techniques such as surface codes are employed for this purpose, enabling the detection and correction of errors without destroying quantum information.

Current advancements in quantum computing involve companies like IBM and Google, who have contributed significantly to error correction techniques. Their work is documented in reputable journals, providing insights into the progress made in achieving practical quantum computing solutions. These contributions highlight the ongoing efforts to overcome technical hurdles and bring quantum computing closer to real-world applications.

The potential applications of error-corrected quantum computers span various fields. In optimization, they could enhance efficiency in complex systems such as supply chains and financial portfolios. Materials science benefits from their ability to simulate new materials at a quantum level, aiding in the discovery of innovative substances. Cryptography stands to gain from enhanced security through quantum-resistant encryption methods.

Collaboration between academia and industry is essential for advancing quantum computing. Research institutions and tech giants are working together to address challenges, with findings published in journals like Nature and Physical Review Letters. These collaborations underscore the importance of shared knowledge and resources in driving technological progress.

Continued research and international cooperation are vital for overcoming remaining obstacles in quantum computing. By fostering a collaborative environment, the scientific community can accelerate advancements, ensuring that error-corrected quantum computers realize their full potential across diverse applications.

Quantum Supremacy Without Error Correction

The development of error-corrected quantum computers is pivotal for advancing reliable quantum computing. These systems employ complex error correction techniques, such as Shor codes or surface codes, to maintain qubit integrity without direct measurement, addressing the decoherence and noise issues inherent in quantum systems.

Leading institutions like IBM, Google, and Rigetti are at the forefront of this endeavour, each utilizing distinct technologies. IBM and Google favor superconducting qubits, while Rigetti employs a unique approach with its Quantum Cloud Services, highlighting diverse strategies to achieve error correction and scalability.

Despite progress, challenges remain in maintaining coherence times and scaling qubits without excessive noise. Efficient error-correcting codes must be developed alongside hardware advancements, ensuring practical implementation and verification of these systems.

Recent milestones include IBM’s 127-qubit processor demonstrating improved error rates, while Google’s quantum supremacy experiment showcased the potential for large-scale quantum computation, underscoring the importance of both performance and reliability in advancing the field.

The connection between achieving quantum supremacy and building error-corrected systems is evident. Demonstrating supremacy validates quantum computing’s potential, while integrating error correction ensures scalability and practicality, marking a crucial phase in realizing robust quantum technologies.

Collaborations Between Academia And Industry

The development of error-corrected quantum computers represents a pivotal challenge in modern technology, necessitating robust collaborations between academia and industry. These partnerships are essential for overcoming technical hurdles such as decoherence and noise, which impede reliable computation. Academic institutions and companies can accelerate progress toward practical quantum computing solutions by pooling resources and expertise.

One notable collaboration involves IBM’s Quantum Experience platform, which facilitates joint research with universities on error correction techniques. Similarly, Google’s Quantum AI team collaborates closely with academic partners to advance surface code implementations for trapped ion qubits. These efforts highlight the synergy between theoretical insights from academia and engineering capabilities from industry, driving innovation in quantum error correction.

Challenges in achieving scalable error correction include maintaining coherence times and developing efficient decoding algorithms. For instance, trapped ion qubits offer lower error rates than superconducting qubits, as demonstrated by studies published in Nature and Physical Review Letters. However, the higher connectivity of superconducting qubits presents unique advantages, as evidenced by research in Science and IEEE journals. These findings underscore the need for tailored approaches depending on the quantum platform.

Progress has been significant through specific academic-industrial partnerships. Rigetti’s collaborations with academic institutions have focused on quantum algorithms and error mitigation, contributing to advancements in hybrid quantum-classical systems. Such initiatives address immediate technical challenges and pave the way for future breakthroughs by fostering a shared knowledge base across sectors.

Integrating classical error correction techniques with quantum methods holds promise for more robust systems. Interdisciplinary collaborations are expected to be crucial in standardizing protocols and enhancing interoperability across different quantum platforms. As government funding from agencies like DARPA continues to support these efforts, the potential for transformative advancements in quantum computing remains strong.