Gaussian boson sampling holds considerable promise as a pathway to demonstrating quantum advantage, yet practical implementations inevitably suffer from noise. Byeongseon Go, Changhun Oh, and Hyunseok Jeong, from NextQuantum Innovation Research Center and Seoul National University, now establish the conditions under which noisy boson sampling remains computationally challenging for classical computers. Their work rigorously defines a threshold for photon loss, a common imperfection in current devices, below which the problem retains the same complexity as its ideal, noise-free counterpart, even when a logarithmic fraction of photons are lost. By directly quantifying the difference between ideal and lossy boson sampling, the team delivers the first definitive characterisation of classically intractable regimes, representing a crucial advance towards realising quantum advantage with near-term technology.

Lossy Gaussian Boson Sampling Hardness Conditions

Gaussian boson sampling (GBS) is a promising approach for demonstrating quantum advantage, but current implementations with limited resources face challenges. This work investigates the conditions under which lossy GBS, where photons are lost during the process, remains computationally difficult for classical computers to simulate. Researchers establish a connection between the complexity of simulating lossy GBS and the difficulty of computing the permanent of a complex matrix. The team demonstrates that simulating lossy GBS with a given number of photons and modes is hard if the probability of photon loss is below a certain threshold and the interferometer used has sufficiently large values.

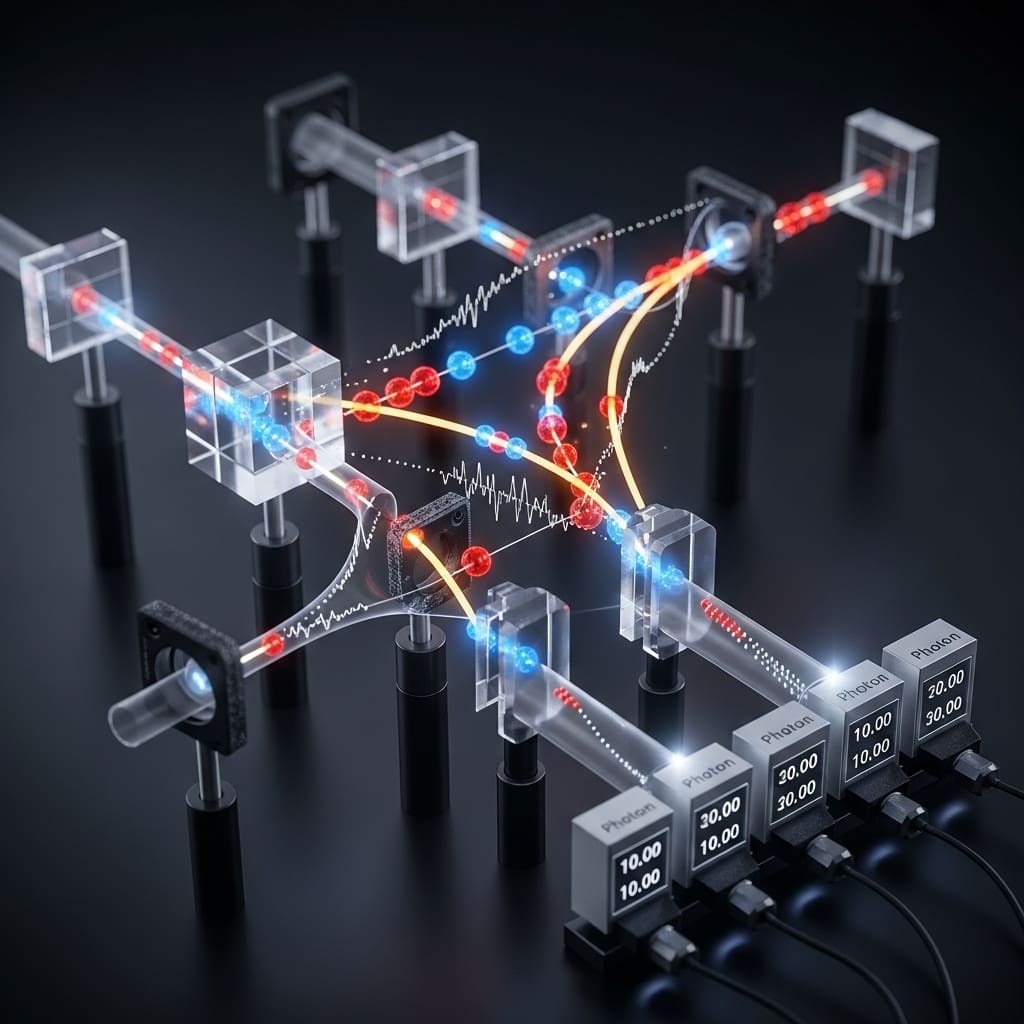

The researchers prove that if the interferometer matrix possesses a minimum singular value above a specific level, then simulating the output distribution of lossy GBS requires computational time that increases exponentially with the number of photons. This result expands upon previous analyses of GBS, which often assumed perfect sampling or restricted the interferometer’s structure. The approach maps the problem of calculating probabilities in lossy GBS to the problem of estimating the Haagerup permanent of a complex matrix, allowing researchers to leverage existing tools from computational complexity theory. This mapping circumvents the difficulties of directly analysing the complex interference patterns in GBS, enhancing understanding of GBS’s computational power and providing guidance for designing experiments likely to demonstrate quantum advantage.

Loss Tolerance in Gaussian Boson Sampling

This research rigorously analyses the impact of photon loss on the computational hardness of Gaussian boson sampling (GBS). GBS is a promising candidate for demonstrating quantum advantage, but real-world quantum devices are noisy, and photon loss is a significant source of error. This work aims to quantify the impact of loss, establish rigorous bounds on the difference between ideal and lossy GBS, and determine the conditions under which GBS remains computationally hard. The analysis focuses on GBS, a quantum computation involving squeezed vacuum states passed through a random interferometer, and measuring the resulting photon numbers.

Squeezed vacuum states exhibit reduced noise in one property at the expense of increased noise in another, and the randomness of the interferometer is key to the computational hardness of GBS. Photon loss is modelled as a loss channel, introducing errors in the output photon number distribution. Researchers utilise covariance matrices to describe the quantum state of Gaussian states, and employ quantum fidelity and total variation distance as measures of similarity between states. The research demonstrates that the total variation distance between ideal and lossy GBS can be bounded in terms of the loss parameter and the squeezing parameter.

This bound is crucial for understanding how loss affects the output distribution. The team establishes conditions on the loss parameter that ensure GBS remains computationally hard, expressed in terms of the squeezing parameter and the number of photons. The total variation distance serves as a metric to quantify the difference between ideal and lossy GBS, providing a measure of how distinguishable the two output distributions are. The squeezing parameter plays a crucial role, with higher squeezing levels potentially mitigating the effects of loss, but requiring more precise experimental control. The number of photons also affects the hardness of GBS, with larger numbers generally making the problem more difficult to solve classically, but also increasing sensitivity to loss.

This work provides a rigorous mathematical analysis of the impact of loss on GBS, with practical implications for designing experiments and developing error mitigation techniques. The results provide a deeper theoretical understanding of the computational hardness of GBS and establish a clear threshold for the amount of loss that can be tolerated before GBS becomes classically simulable. The findings can be used to benchmark the performance of different GBS implementations.

Loss Tolerant Boson Sampling Complexity Confirmed

This research establishes a firm theoretical foundation for understanding the classical difficulty of simulating Gaussian boson sampling (GBS) even when photon loss occurs, a common imperfection in current experimental setups. Researchers demonstrate that GBS remains computationally challenging for classical computers as long as the number of lost photons scales logarithmically with the number of input photons. This finding improves upon previous results for similar sampling problems and provides a crucial step towards demonstrating a practical quantum advantage with near-term devices. The team rigorously characterised how the probability of obtaining a specific output in a lossy GBS experiment relates to the ideal, lossless case.

By establishing a method to estimate ideal probabilities from lossy data, they proved that the computational hardness of GBS is maintained under this realistic noise model. This analysis highlights a key difference between lossy GBS and lossy Fock state boson sampling, where a simple post-selection technique is not applicable to GBS, necessitating the new approach presented in this research. While this work provides a significant advance, the authors acknowledge that the current bounds on photon loss are not necessarily the tightest possible. Future research will focus on achieving even stronger hardness results, extending beyond the logarithmic loss regime, and addressing the impact of other sources of experimental imperfection, such as partial distinguishability of photons. These advancements are essential for ultimately confirming the classical intractability of simulating realistic near-term GBS experiments and solidifying the path towards practical quantum computation.

👉 More information

🗞 Sufficient conditions for hardness of lossy Gaussian boson sampling

🧠 ArXiv: https://arxiv.org/abs/2511.07853