The pursuit of a functional quantum computer, capable of solving problems intractable for classical machines, necessitates a clear pathway beyond simply increasing qubit counts. Researchers now present a performance-centric roadmap, linking algorithmic success directly to required gate fidelity and system size, thereby establishing quantifiable development targets. This approach prioritises demonstrable performance gains as systems scale, charting a course through the current era of noisy intermediate-scale quantum (NISQ) computing towards fault-tolerant, large-scale quantum computation. R. Barends and F.K. Wilhelm, alongside colleagues at the Institute for Functional Quantum Systems and the Institute for Quantum Computing Analytics at Forschungszentrum Jülich, detail their analysis in the paper, “Performance-centric roadmap for building a superconducting quantum computer”. Their work proposes a phased development strategy designed to maximise algorithmic capability and ultimately achieve quantum advantage.

Quantum computation currently faces limitations imposed by qubit coherence times, prompting substantial research into mitigating decoherence and improving qubit performance. Coherence, the duration for which a qubit maintains its quantum state, directly impacts the complexity of computations a quantum processor can perform. Scientists investigate materials science and fabrication techniques to extend coherence and enhance fidelity, establishing a crucial foundation for scalable quantum systems. This concentrated effort defines a quantitative roadmap, linking gate fidelity—a measure of the accuracy of quantum operations—to system size targets and prioritising performance improvements as systems scale.

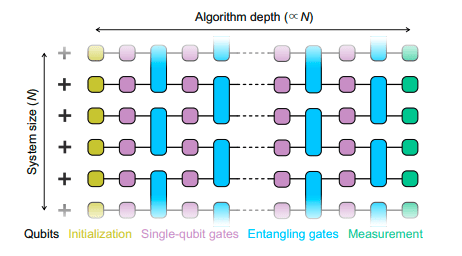

Recent research delineates four distinct phases of development. These progress from initial hardware refinement, through achieving computational advantage with noisy intermediate-scale quantum (NISQ) devices, and ultimately towards constructing a large-scale quantum computer employing quantum error correction (QEC). QEC is a vital technique for protecting quantum information from errors, essential for reliable computation. The central premise optimises algorithmic radius, a measure of the complexity of algorithms a quantum computer can handle, as a key metric for progress.

Current efforts concentrate on extending qubit coherence times, a critical factor limiting quantum computation and the complexity of solvable problems. Researchers actively investigate materials science and fabrication techniques to improve qubit coherence and enhance fidelity, ensuring reliable quantum operations and minimising errors. Specifically, work focuses on planar superconducting resonators, crucial components for qubit readout and control, and minimising quasiparticle generation, a source of decoherence within superconducting circuits. Quasiparticles are excited electrons within a superconductor that disrupt quantum states. This targeted approach addresses specific sources of error and enhances the overall performance of quantum systems.

Investigations into qubit connectivity and control schemes aim to build larger, more complex systems while maintaining high fidelity, addressing the inherent difficulties of scaling quantum processors. Early demonstrations of quantum error correction concepts, such as repetitive error detection, provide a foundation for building fault-tolerant quantum computers, paving the way for reliable and scalable quantum computation. Measurement-based interleaved randomised benchmarking serves as a vital tool for characterising and improving qubit performance throughout the scaling process, ensuring consistent and reliable operation. This benchmarking technique assesses the fidelity of quantum gates by interleaving them with random gates, providing a robust measure of performance.

A notable pattern emerges regarding institutional contributions, with a significant proportion of research originating from IBM and a collaborative group formerly at the University of California, Santa Barbara, and now at Google. This concentration suggests these entities are at the forefront of innovation in superconducting quantum technologies, driving advancements in both hardware and software development. Their sustained commitment to overcoming the technical hurdles in quantum computing positions them as key players in the field.

The chronological distribution of publications reveals a clear evolution within the field, demonstrating a progression from fundamental research to the integration of complex systems. Earlier research concentrated on fundamental materials properties and fabrication processes, establishing the groundwork for more advanced investigations. Later studies increasingly address complex topics, such as error correction and scaling, indicating a maturing field capable of tackling increasingly sophisticated challenges. This progression aligns with the broader roadmap for quantum computing.

Government funding plays a crucial role in supporting this development, as evidenced by initiatives like the German funding guideline for quantum projects, which accelerate research and foster innovation. The practical realities of building these systems also receive attention, with component suppliers highlighting the need for readily available hardware, ensuring a sustainable supply chain, and facilitating rapid prototyping. This holistic approach, encompassing materials science, error correction, and practical engineering, actively shapes the trajectory of quantum computing towards achieving a demonstrable computational advantage.

👉 More information

🗞 Performance-centric roadmap for building a superconducting quantum computer

🧠 DOI: https://doi.org/10.48550/arXiv.2506.23178