Jensen Huang’s CES 2026 delivered a sweeping tour of AI’s transformation—from reasoning models and agentic systems to the dawn of physical AI and a new generation of supercomputing hardware.

Setting the Stage for a New Computing Paradigm

The Mandalay Bay Convention Center in Las Vegas played host to one of the most anticipated presentations of CES 2026 as NVIDIA CEO Jensen Huang took the stage on January 5th for what he promised would be a densely packed exploration of the company’s vision. True to form, Huang delivered a keynote spanning the full breadth of modern AI development, from the philosophical underpinnings of a transformed computing industry to the unveiling of NVIDIA’s next-generation Vera Rubin supercomputing platform.

Huang wasted no time establishing the magnitude of the shift underway. The entire five-layer stack of the computer industry, he argued, is being fundamentally reinvented. Software is no longer programmed in the traditional sense—it is trained. Computation has migrated from CPUs to GPUs. Applications that once ran as pre-compiled code on local devices now understand context and generate outputs from scratch with every interaction. This is not incremental change; it is architectural transformation.

The financial dimensions of this transformation are staggering. Huang pointed to some ten trillion dollars of computing infrastructure from the past decade now undergoing modernisation, with hundreds of billions in venture capital funding flowing into AI annually. The source of this capital, he explained, is not mysterious: it represents a fundamental reallocation of research and development budgets from classical computing methods to artificial intelligence approaches across industries representing a hundred trillion dollars of economic activity.

The ChatGPT moment for physical AI is nearly here

Jensen Huang, CEO of NVIDIA

The Convergence of Scaling Laws, Reasoning, and Agentic Systems

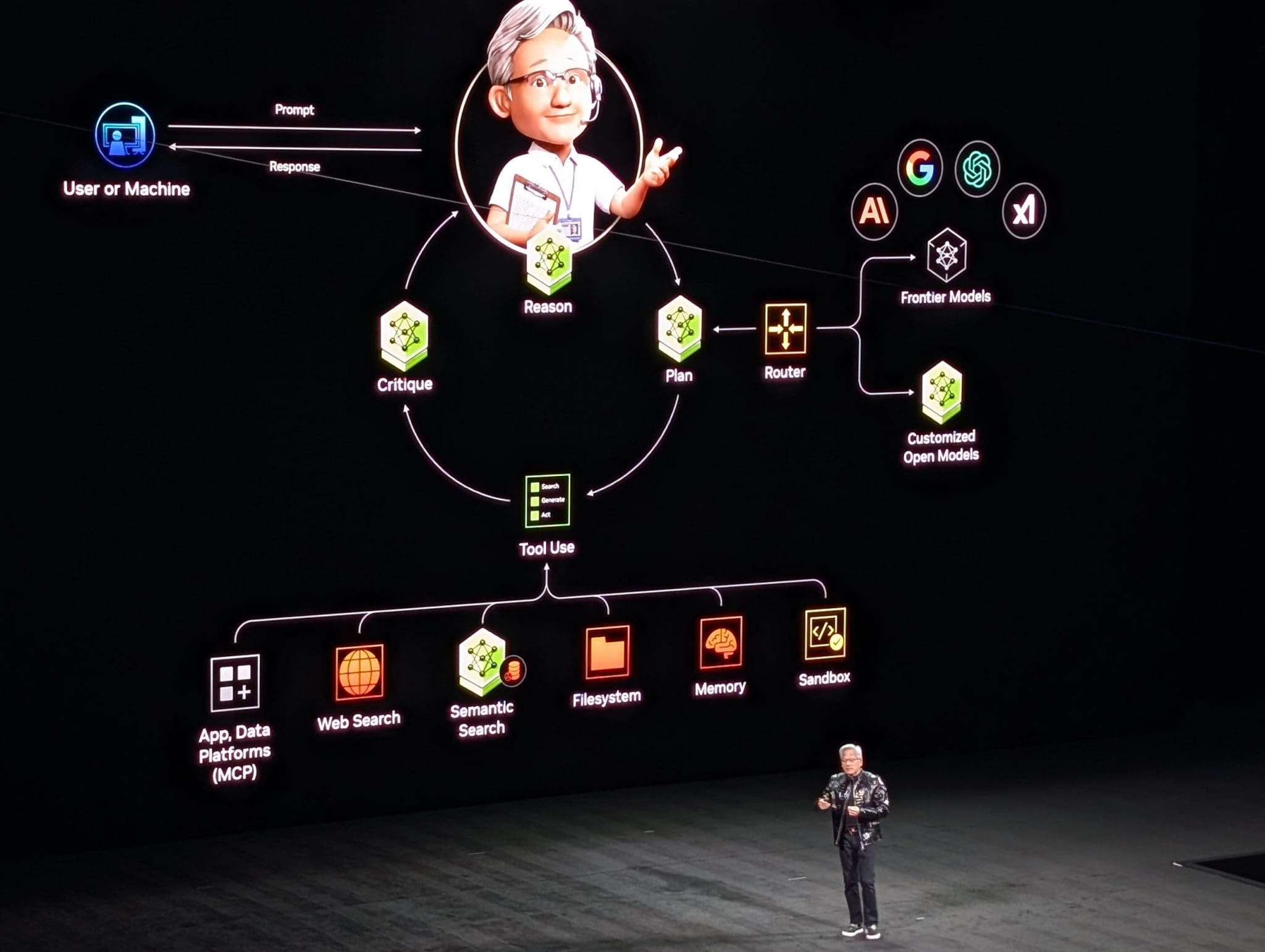

Much of Huang’s presentation traced the technical lineage that brought us to this moment. He cited the 2017 introduction of Transformers as foundational. Then, he mentioned the watershed ChatGPT moment of 2022 that awakened the world to AI’s possibilities. But it was the emergence of reasoning models—beginning with OpenAI’s o1 model—that Huang identified as truly revolutionary.

The concept of test-time scaling, which Huang described in refreshingly plain terms as simply “thinking,” represents a fundamental expansion of how AI systems operate. Beyond pre-training to acquire knowledge and post-training with reinforcement learning to develop skills, AI models can now engage in real-time reasoning, extending computation at inference time to produce more sophisticated outputs. Each of these phases demands enormous computational resources, and Huang made clear that the scaling laws continue to hold: larger models trained on more data continue to improve in capability.

Agentic systems emerged as a central theme of the presentation. These models possess the ability to reason, conduct research, utilise tools, plan future actions, and simulate outcomes. Huang singled out Cursor, an AI-powered coding assistant, as having revolutionised software development practices within NVIDIA itself. The agentic architecture—featuring multiple specialised models coordinated by intelligent routing, capable of accessing external tools and information—represents what Huang characterised as the fundamental framework for future applications.

The open model ecosystem received particular emphasis. Huang highlighted the emergence of DeepSeek R1 as the first open reasoning model, describing how it activated a global movement in open AI development. While acknowledging that open models remain approximately six months behind frontier proprietary models, Huang celebrated the explosive growth in downloads and the democratisation this enables for startups, researchers, students, and nations worldwide.

NVIDIA’s Open Model Contributions

Huang devoted considerable attention to NVIDIA’s own contributions to the open model ecosystem, noting that the company now operates billions of dollars worth of DGX supercomputers to develop its open models. The breadth of this work spans multiple domains: LaProtein and OpenFold 3 for protein synthesis and structure understanding, Evo 2 for multi-protein generation, Earth 2 for physics-based weather prediction, and Nemotron 3 as a groundbreaking hybrid Transformer-SSM architecture optimised for extended reasoning.

The presentation introduced Cosmos, NVIDIA’s open world foundation model designed to understand how physical environments operate. Unlike language models trained on text, Cosmos learns from internet-scale video, real driving data, robotics information, and 3D simulation to develop what Huang described as a unified representation of the world—one that can align language, images, 3D environments, and actions.

NVIDIA’s approach to openness extends beyond model weights to include training data and comprehensive library systems. The Nemo libraries provide lifecycle management for AI systems, handling everything from data processing and generation through training, evaluation, guardrailing, and deployment. Huang positioned this infrastructure as essential for enabling every company, industry, and country to participate in the AI revolution.

Physical AI: Intelligence Meets the Real World

Perhaps the most forward-looking segment of Huang’s presentation concerned physical AI—the challenge of creating artificial intelligence that can understand and interact with the physical world. Huang has discussed this domain for several years, but revealed that NVIDIA has been working on the problem for eight years.

The fundamental challenge is teaching machines what comes naturally to human children: object permanence, causality, friction, gravity, inertia. An AI must understand that objects persist when unobserved, that pushing something causes it to move, that a heavy truck requires significant force to stop. These are commonsense physics principles that must be explicitly learned by artificial systems.

Huang outlined a three-computer architecture for physical AI development. The first computer handles AI training. The second runs inference—the robotics computer deployed in vehicles, robots, or edge environments. The third, and perhaps most novel, is dedicated to simulation. Without the ability to simulate how the physical world responds to an AI’s actions, Huang argued, there is no way to evaluate whether the system is performing correctly.

The data problem for physical AI differs fundamentally from language models. While text provides abundant training material, physical interaction data capturing the diversity of real-world scenarios remains scarce. NVIDIA’s solution leverages synthetic data generation grounded in physical laws. Cosmos can take output from a traffic simulator—too simplistic for direct AI training—and generate physically plausible surround video that provides the rich training signal AI systems require.

Huang announced Alpha Mio as the world’s first “thinking, reasoning autonomous vehicle AI,” trained end-to-end from camera input to actuation output. It enables what Huang described as effectively travelling billions or trillions of miles through simulation, an essential capability for developing autonomous systems without proportional real-world testing.

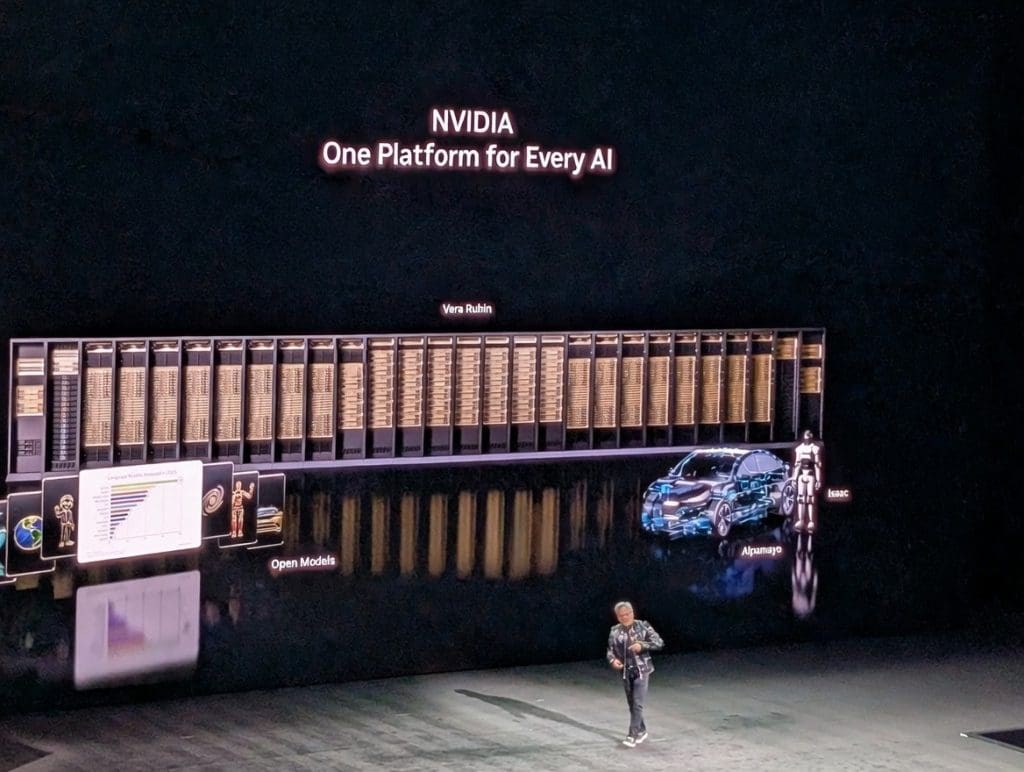

Vera Rubin: The Next Frontier of AI Infrastructure

The hardware centrepiece of the presentation was the unveiling of Vera Rubin, NVIDIA’s next-generation AI supercomputing platform. Named after the astronomer who discovered evidence for dark matter by observing galactic rotation curves, the system represents NVIDIA’s response to what Huang characterised as an existential challenge for the industry.

The computational demands of AI are growing at rates that traditional scaling cannot match. Models are increasing by an order of magnitude annually. Test-time scaling means inference now involves extended reasoning processes rather than single-shot responses, with token generation increasing by approximately five times per year. Meanwhile, competitive pressure drives token costs down by roughly ten times annually as companies race to reach each new frontier.

Vera Rubin’s specifications reflect this reality. The platform delivers 100 petaflops of AI performance—five times its predecessor. The system comprises six breakthrough chips requiring a complete redesign of every component and a full rewrite of the software stack. A single Vera Rubin MDL 72 rack incorporates 220 trillion transistors and weighs nearly two tons. The full system, comprising 1,152 GPUs across 16 racks, represents what NVIDIA describes as one giant leap to the next frontier of AI.

The engineering achievements extend throughout the system. ConnectX9 delivers 1.6 terabits per second of scale-out bandwidth to each GPU. BlueField 4 DPUs offload storage and security operations to keep compute fully focused on AI workloads. The NVLink switch moves more data than the global internet, connecting compute nodes so that up to 72 Rubin GPUs can operate as a unified system.

Huang emphasised that NVIDIA’s internal rule against changing more than one or two chips per generation had to be abandoned for Vera Rubin. The rate of AI advancement simply cannot be sustained if hardware development proceeds at traditional semiconductor industry pace.

On production status, Huang was unambiguous: Vera Rubin is in full production. GB200 systems began shipping a year and a half ago, GB300 is in full-scale manufacturing, and for Vera Rubin to reach customers this year, production had to begin in advance of the announcement.

Quantum Computing

Jensen Huang’s CES 2026 keynote did not directly address quantum computing or quantum technologies. The presentation focused entirely on classical AI systems. It also highlighted GPU-accelerated computing. Their applications in language models, physical AI, and autonomous systems were discussed.

However, several aspects of the presentation carry indirect relevance for the quantum computing industry. The emphasis on simulation focuses on NVIDIA’s Omniverse platform for physically-based digital twins. This platform touches on a domain where quantum computers are expected to offer advantages for certain problem classes. The discussion addresses applications for protein folding and molecular understanding. Models like LaProtein and OpenFold 3 contribute to this understanding. Quantum simulation may eventually provide complementary or superior capabilities in these applications.

The broader infrastructure trajectory Huang outlined is notable. It includes massive data centers consuming gigawatts of power and the pursuit of ever-larger parameter counts and training datasets. This trajectory highlights both the achievements and limitations of classical approaches. While NVIDIA’s roadmap projects continued exponential improvement in GPU capabilities, the fundamental scaling challenges Huang acknowledged may ultimately create openings for quantum approaches in specific domains where classical methods reach practical limits.

For quantum computing companies, the presentation underscores both the competitive landscape and potential integration pathways. NVIDIA’s dominance in AI infrastructure creates a context where quantum systems will likely need to demonstrate clear advantages in specific applications rather than general-purpose capability. The emphasis on hybrid architectures and multi-model systems suggests future scenarios where quantum processors might serve as specialised accelerators within broader AI workflows orchestrated by classical systems.

Key Takeaways for Industry Observers

NVIDIA’s roadmap confirms aggressive annual advancement in AI infrastructure, with Vera Rubin representing a ten-times improvement over Blackwell in factory throughput and token generation cost efficiency. The company’s commitment to shipping major platform upgrades every year, rather than adhering to traditional semiconductor development cycles, establishes a pace that will influence the entire AI ecosystem.

The open model strategy positions NVIDIA as an enabler of AI democratisation rather than merely a hardware vendor. By providing frontier-class models, training data, and lifecycle management tools, the company is building an ecosystem that encourages adoption of its computing platforms across the broadest possible range of applications.

Physical AI emerges as NVIDIA’s major frontier bet beyond language models. The three-computer architecture—training, inference, and simulation—provides a framework for understanding how embodied AI systems will be developed, and positions NVIDIA’s Omniverse platform as essential infrastructure for robotics, autonomous vehicles, and industrial automation.

The partnership with Siemens, announced during the presentation, signals that physical AI applications are moving from research into industrial deployment. Integration of NVIDIA’s CUDA-X libraries, AI models, and Omniverse into Siemens’ design, simulation, and digital twin tools is crucial. This integration represents a pathway for AI to transform manufacturing. It also extends to industrial operations at scale.

Looking Forward: The Race to Physical Intelligence

Jensen Huang’s CES 2026 presentation painted a picture of an industry in transformation so rapid that traditional development timelines have become obsolete. The simultaneous advancement of foundation models, reasoning capabilities, agentic architectures, and physical AI creates compounding pressure on computational infrastructure—pressure that NVIDIA is racing to address with annual platform generations.

The subtext throughout the presentation was clear: competitive advantage in AI requires not just access to capable models, but access to sufficient computation to reach each new frontier first. Training speed determines time to market. Factory throughput determines revenue potential. Token generation efficiency determines economic viability. In this environment, companies that cannot access state-of-the-art infrastructure risk falling permanently behind.

For industry observers, the implications extend beyond hardware specifications. NVIDIA’s vision positions AI not as a software application layer but as a fundamental transformation of how computing works, how applications are built, and how digital systems interact with the physical world. The company that built its reputation on graphics processing now explicitly frames its mission as enabling the next industrial revolution.

Whether the aggressive timelines Huang outlined prove achievable, and whether classical computing can sustain its current trajectory indefinitely, remain open questions. But the direction of travel is unmistakable: computation, intelligence, and physical world interaction are converging, and the infrastructure to support that convergence is being built at unprecedented scale and pace.