Some are reporting on the end of the NISQ era. An era that aimed to deal with noisy qubits and work with those flaws. Could the end of this paradigm be near? Alternative paradigms to noisy or NISQ qubits exist, and the likes of Microsoft have developed these approaches with their topological qubits but have largely been abandoned.

Creating Logical Qubits from Physical Qubits is a big challenge. Still, the physical number of Qubits is always larger than the number of Logical Qubits that can be produced unless each Physical Qubit is effectively noiseless. Headline numbers of Qubits may sound impressive, but these numbers must be scaled back by (often) a significant factor.

Researchers have been developing Logical Qubits, but typically, large numbers of physical qubits resulted in just a handful of Logical Qubits. However, recent news from Harvard researchers in conjunction with Quera Computing has demonstrated 48 Logical Qubits, a considerable number of error-corrected qubits.

Logical Qubits

A logical qubit is a concept in quantum computing, distinguished from a physical qubit by its role in enhancing computation reliability. It is formed by encoding a single qubit across multiple physical qubits, creating a robust structure that guards against errors. This method contrasts with a physical qubit, which directly corresponds to the quantum hardware components, such as ions, photons, or superconducting circuits, and is susceptible to various types of quantum noise and errors. Loosely, you can think of Logical Qubits as being the error-corrected Qubits – just like in a classical computer, we don’t expressly think of registers as being noisy (yes, there is error correction). Still, the point is that we take it for granted we can operate on the register with reasonable fidelity.

What is NISQ?

NISQ, “Noisy Intermediate-Scale Quantum,” refers to a current stage in the development of quantum computing technology. This term was popularized by John Preskill in 2018 to describe the state of quantum computers being developed and experimented with today. Here are some key characteristics and implications of NISQ technology. The article for 2018 is included here for those who want more detail on “Quantum Computing in the NISQ era and beyond“.

N in NISQ: Noisy

The “N” in NISQ stands for “Noisy,” highlighting a core challenge in current quantum computing technology. Quantum bits, or qubits, in NISQ computers are prone to errors due to environmental interference and other factors, leading to quantum information loss, known as decoherence. This noise affects the accuracy and reliability of quantum computations, necessitating the development of sophisticated error mitigation techniques to manage and reduce the impact of these errors. Despite these challenges, the noisy nature of these qubits does not entirely prevent them from performing certain computations that could potentially outperform classical computers.

I in NISQ: Intermediate-Scale

The “I” refers to “Intermediate-Scale,” indicating the size of quantum computers during this phase. NISQ devices typically possess a modest number of qubits, ranging from several dozen to a few hundred. This scale is substantial enough to potentially perform tasks beyond the capabilities of classical computers but not large enough for implementing full-scale quantum error correction. This intermediate scale is a significant step in quantum computing, bridging the gap between early experimental quantum devices and the eventual goal of large-scale, fault-tolerant quantum computers.

S in NISQ: Scale

The “S” in NISQ, which is part of the term “Intermediate-Scale,” emphasizes the current capacity of quantum computers in terms of qubit count. The scale of NISQ devices is a critical factor, as it determines the complexity of problems these computers can tackle. While these quantum computers do not yet have the thousands or millions of qubits required for widespread practical use and robust error correction, the intermediate scale provides a testing ground for various quantum algorithms and helps understand the practical limitations and capabilities of quantum computing at this stage.

Q in NISQ: Quantum

The “Q” stands for “Quantum,” the fundamental aspect of this technology. NISQ devices operate on quantum mechanical principles, leveraging the unique properties of quantum states like superposition and entanglement to perform calculations. This quantum nature allows NISQ computers to explore computational pathways and algorithms in a manner fundamentally different from classical computers. Despite the current limitations in scale and noise, the quantum aspect of NISQ machines opens up possibilities for solving certain complex problems more efficiently than classical systems, exploring new realms of computational theory and practice.

The ISQ regime?

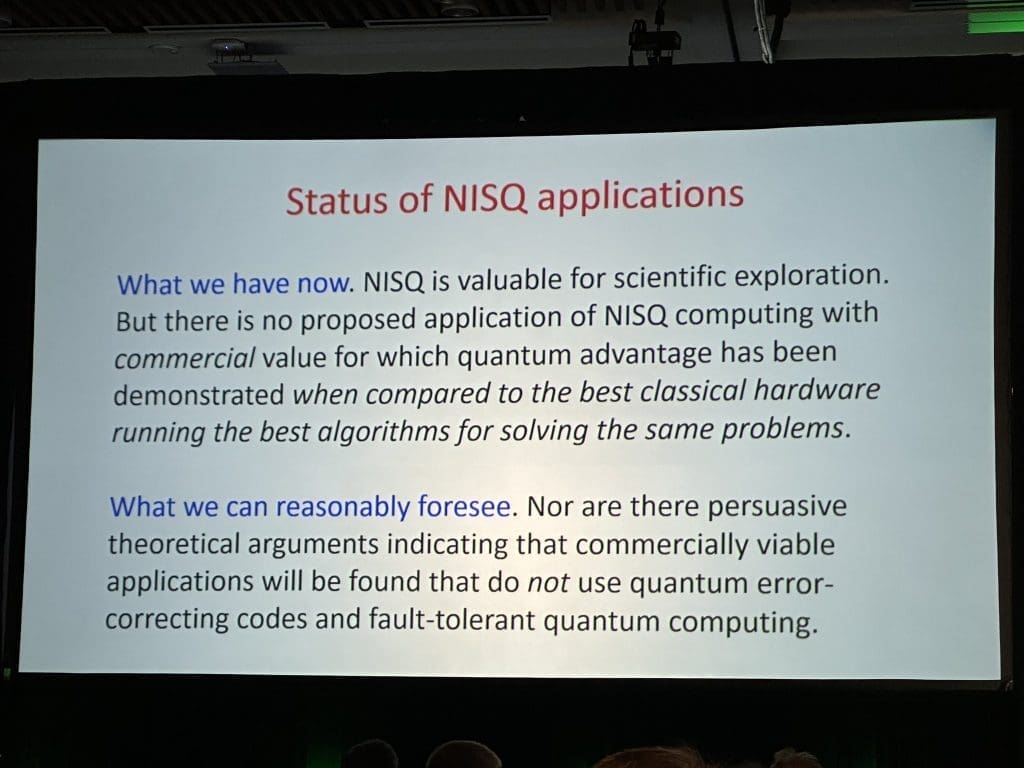

Have we entered a new regime? Could we eventually move away from the NISQ era? Some seem to think so; at a recent conference, have we seen the NISQ era has limited utility? John Preskill, the creator of the NISQ acronym, seems to think so. Perhaps a little harsh for those keen to evangelize Quantum, but pretty accurate, we think. The content of the slides from Preskill seems to suggest there is no real benefit of any NISQ devices currently compared to classical hardware and processes.

What we have now. NISQ is valuable for scientific exploration. But there is no proposed application of NISQ computing with commercial value for which quantum advantage has been demonstrated when compared to the best classical hardware running the best algorithms for solving the same problems.

John Preskill

What we can reasonably foresee. Nor are there persuasive theoretical arguments indicating that commercially viable applications will be found that do not use quantum error-correcting codes and fault-tolerant quantum computing.

NISQ useless without Quantum Error Correction (QEC)

Some in the quantum community suggest that without full quantum error correction (QEC), NISQ is useless for non-trivial quantum use cases. Quantum algorithms and applications without proper error correction is useless. This hasn’t stopped the march of ever greater numbers of qubits and developments. Quantum Ecosystems are getting quite mature, with IBM having allowed cloud access to their quantum hardware for more than half a decade. Companies have explored how Quantum can be integrated into workflows, but there has been no real-world use cases.

The Future of Quantum Computation

The approach of creating high-fidelity, perfect logical qubits that can be scaled up is perhaps going to emerge as, by far, the smarter approach compared to the NISQ regime. It’s still early days, but we’ll need to see how these early attempts to build logical qubits evolve.

There are a few headwinds here. QEC may not be enough to enable practical quantum computing. Living with the need to perform error correction may put so many restraints on the ability to develop quantum systems.

What about fault-tolerant quantum computing?

NISQ + Error correction leads to fault-tolerant quantum computing and is no longer NISQ. According to definitions, In quantum computing, the threshold theorem (or quantum fault-tolerance theorem) states that a quantum computer with a physical error rate below a certain threshold can, through the application of quantum error correction schemes, suppress the logical error rate to arbitrarily low levels.