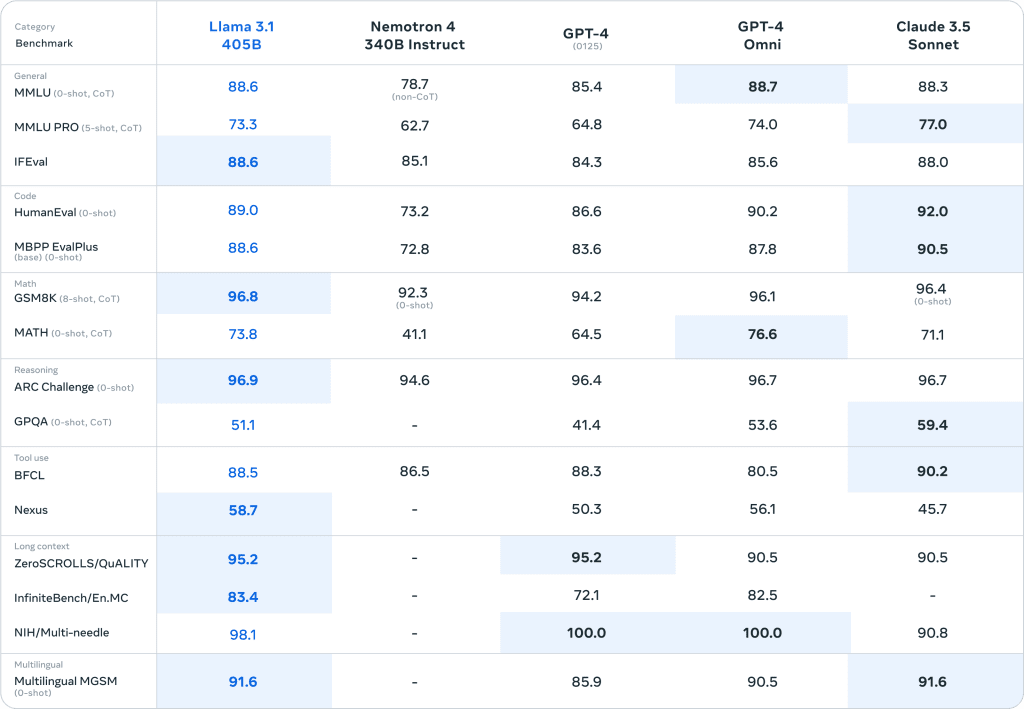

The company is releasing its largest and most powerful language model yet, Llama 3.1 405B, which boasts an impressive 405 billion parameters. Benchmark scores offer an MMLU of 85.2 compared to 66.7 for the previous model of Llama 3 8B.

Mark Zuckerberg has emphasized the importance of open-source AI, ensuring that its benefits are accessible to people worldwide and not concentrated in the hands of a few. To this end, Llama models have been developed, offering some of the lowest cost per token in the industry.

The latest model, Llama 3.1 405B, is an incredibly powerful tool that requires significant compute resources and expertise to work with. However, the Llama ecosystem provides advanced capabilities, enabling developers to start building immediately. Partners such as AWS, NVIDIA, and Databricks have optimized low-latency inference for cloud deployments, while Dell has achieved similar optimizations for on-prem systems. The release of the 405B model is expected to spur innovation in making inference and fine-tuning of models easier. Key community projects like vLLM, TensorRT, and PyTorch have been involved in building support from day one. With the Llama Stack and new safety tools, the open source community can build responsibly, exploring new experiences using multilinguality and increased context length.

What’s significant about this release is that it’s open-source, meaning developers can fully customize the models for their needs and applications, train on new datasets, and conduct additional fine-tuning. This democratization of AI technology has the potential to accelerate innovation and deployment across various industries.

One of the key benefits of open-source AI models is that they can be deployed in any environment, including on-premises, in the cloud, or even locally on a laptop, without sharing data with Meta. This approach also ensures that the power of AI isn’t concentrated in the hands of a few large companies, but rather is distributed more evenly across society.

The Llama 3.1 collection of models has already shown promising results, with developers building innovative applications such as an AI study buddy, an LLM tailored to the medical field, and a healthcare non-profit startup in Brazil that helps organize and communicate patients’ information about their hospitalization.

However, working with large-scale models like the 405B can be challenging, requiring significant compute resources and expertise. To address this, Meta has developed the Llama ecosystem, which provides advanced capabilities such as real-time and batch inference, supervised fine-tuning, evaluation of models for specific applications, and more.

The company has also worked with key community projects like vLLM, TensorRT, and PyTorch to ensure seamless integration and support from day one. This collaboration is expected to spur innovation across the broader community, making it easier to work with large-scale models and enabling further research in model distillation.

As with any powerful technology, there are potential risks associated with generative AI. Meta has taken steps to identify, evaluate, and mitigate these risks through measures such as pre-deployment risk discovery exercises and safety fine-tuning.

Overall, the release of Llama 3.1 marks a significant milestone in the development of open-source AI models. With its impressive scale and capabilities, it has the potential to unlock new experiences and applications across various industries. As the community begins to explore and build with this technology, I’m excited to see the innovative solutions that will emerge.

External Link: Click Here For More