Solving large systems of equations forms the backbone of many scientific and engineering applications, and researchers continually seek faster methods to tackle these complex calculations. David Jin from Massachusetts Institute of Technology, Alexis Montoison from Argonne National Laboratory, and Sungho Shin from Massachusetts Institute of Technology present a new approach to efficiently solve block tridiagonal systems, a common challenge in areas like time-dependent estimation and control. Their work leverages the power of modern graphics processing units (GPUs) and utilizes batched mathematical routines, traditionally used for sparse calculations, in a novel way that exploits the specific structure of these equation systems. The resulting method, implemented in their open-source software TBD-GPU, achieves significant speed improvements over existing CPU-based solvers and demonstrates strong performance compared to other GPU implementations, paving the way for faster simulations and more efficient problem-solving in numerous fields.

GPU Acceleration of Block Tridiagonal Solvers

Researchers have developed a new algorithm, called Tridiagonal Block Decomposition (TBD), for efficiently solving large block-tridiagonal systems of linear equations, a common challenge in scientific computing. These systems appear in diverse applications including solving partial differential equations, Kalman filtering, and model predictive control. The new method decomposes the complex system into a series of smaller, more manageable tridiagonal systems, allowing for efficient parallel processing on the GPU. Implemented in the Julia programming language, TBD consistently achieves significant speedups compared to existing GPU solvers, especially for large problem sizes, and demonstrates excellent scalability. The algorithm combines established techniques like cyclic reduction with domain decomposition, carefully optimized for the GPU architecture by maximizing parallelism and efficiently managing data transfer. This new method is applicable to a variety of scientific computing domains, including Kalman smoothing, model predictive control, and solving partial differential equations, presenting a valuable tool for scientists and engineers tackling computationally intensive problems.

Recursive Algorithm Accelerates Block-Tridiagonal Solves

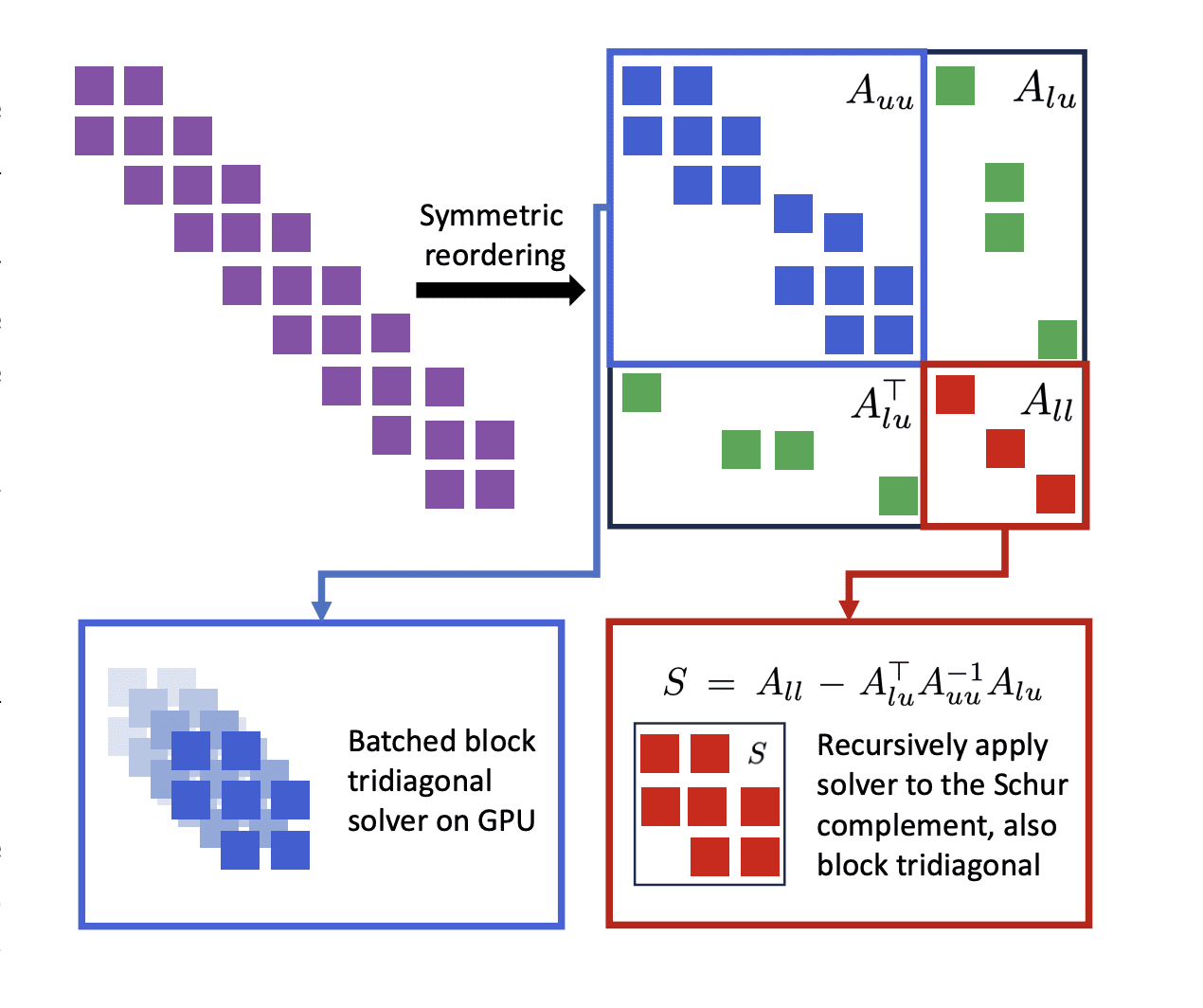

Researchers have developed a new method for solving block-tridiagonal systems of equations, frequently arising in complex time-dependent estimation and control problems, achieving substantial performance gains on modern graphics processing units (GPUs). The team’s approach centers on a recursive algorithm that systematically transforms a sparse problem into a hierarchy of dense kernels, ideally suited for parallel execution on GPUs, and leverages batched BLAS/LAPACK routines for increased efficiency. The core of the breakthrough lies in the recursive application of Schur complement reduction, which breaks down the large system into smaller, independent blocks that can be solved concurrently. By utilizing batched BLAS/LAPACK routines, the method minimizes kernel launch overhead and maintains high GPU utilization, particularly when dealing with numerous small to medium-sized matrices.

Experiments demonstrate that this tailored utilization delivers performance competitive with state-of-the-art solvers like cuDSS, while significantly outperforming established CPU direct solvers, including CHOLMOD and HSL MA57. The researchers implemented their algorithm in an open-source solver, TBD-GPU, designed for flexibility and portability, seamlessly switching between CUDA and ROCm backends and supporting both single and double precision arithmetic. This implementation allows for a trade-off between computational complexity and enhanced parallelism, maximizing GPU throughput and enabling efficient handling of large block sizes.

TBD-GPU Accelerates Block-Tridiagonal System Solutions

Researchers present TBD-GPU, a new method for solving large-scale block-tridiagonal linear systems, frequently arising in estimation and control problems. This approach combines a recursive algorithm with batched dense linear algebra routines, designed to efficiently utilise modern GPU hardware. Performance benchmarks demonstrate that TBD-GPU consistently outperforms sequential GPU solvers and remains competitive with NVIDIA’s cuDSS, particularly for problems with larger block sizes. The key advantage of TBD-GPU lies in its structure-aware design, allowing it to effectively leverage batched BLAS and LAPACK kernels. This tailored approach overcomes the sequential bottlenecks of traditional algorithms and maximises GPU utilisation. The authors acknowledge that the performance of TBD-GPU is currently limited by the overhead associated with launching multiple GPU kernels, and future work will focus on developing a solver that fuses the recursive algorithm into a single kernel to minimise this overhead and improve performance on smaller block sizes.

👉 More information

🗞 Harnessing Batched BLAS/LAPACK Kernels on GPUs for Parallel Solutions of Block Tridiagonal Systems

🧠 ArXiv: https://arxiv.org/abs/2509.03015