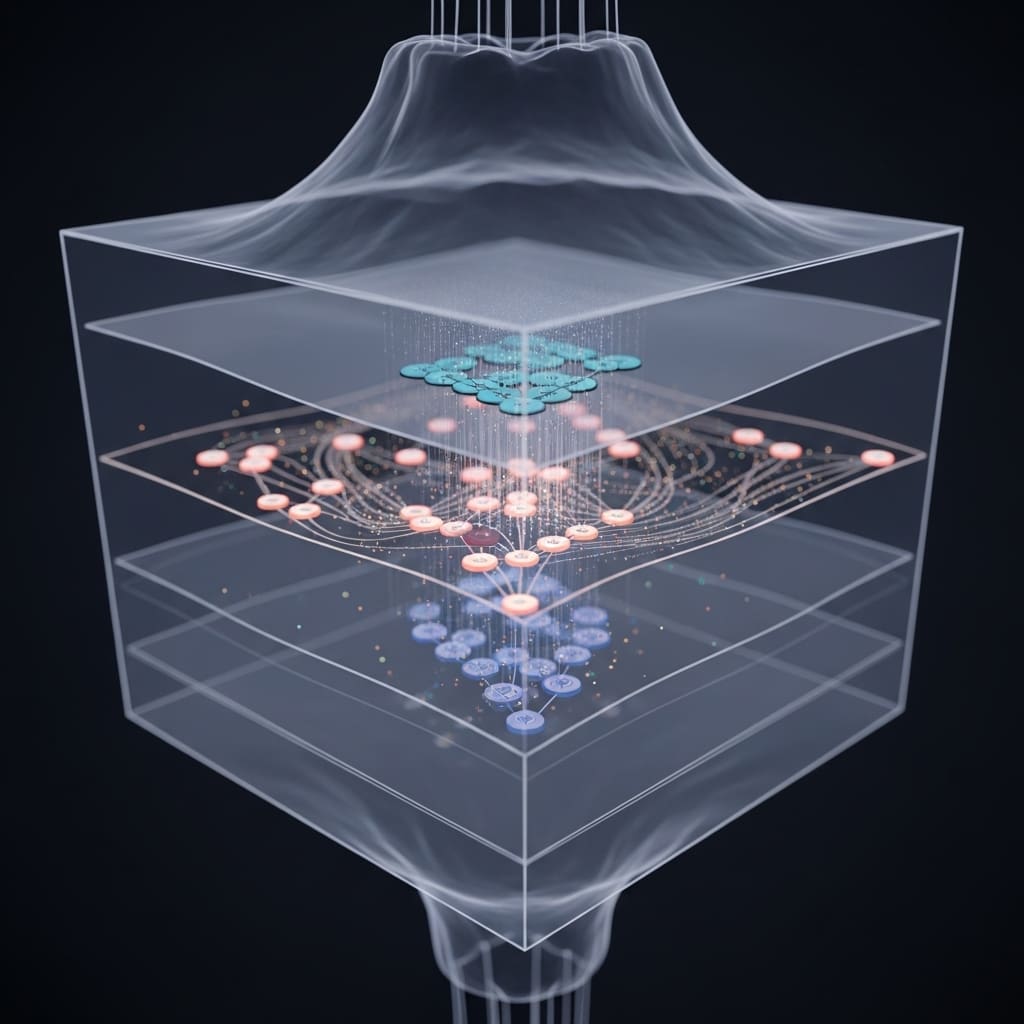

Graph neural networks (GNNs) excel at analysing relational data, but their deployment on limited hardware is hampered by substantial model size. Researchers Chenyu Liu, Haige Li, and Luca Rossi, all from The Hong Kong Polytechnic University, address this challenge with a novel technique called Low-Rank Aggregation Prompting (LoRAP). Their work focuses on improving the performance of quantized GNNs , compressed versions designed for faster inference , by strategically manipulating input data via prompt learning. Unlike large language model quantization, GNNs require particular attention to feature quantization, and LoRAP optimises the critical aggregation step by injecting lightweight, adaptable prompts into each aggregated feature. Extensive testing across multiple frameworks and datasets demonstrates LoRAP consistently boosts the accuracy of low-bit GNNs with minimal added computational cost, paving the way for more efficient graph data analysis.

GNNs excel at processing graph data by capturing relationships between nodes, finding applications in diverse fields like biology, chemistry, and recommender systems, but their computational demands have limited their use in mobile phones, autonomous vehicles, and edge devices. This work directly tackles this limitation by focusing on feature quantization, a more pressing concern for GNNs than for large language models. The study reveals that traditional quantization methods often lead to accuracy drops in GNNs due to the loss of information in node features and graph structure, particularly when reducing bit-width representations.

To mitigate this, the researchers leveraged prompt learning, a technique that manipulates input data by injecting learnable parameters into feature spaces. The innovation lies in the strategic placement of these prompts during the aggregation phase, reducing quantization errors in node representations. This approach distinguishes itself from existing methods by focusing on aggregation prompting, a novel strategy for enhancing quantized GNN performance. This research establishes a new direction for GNN quantization, moving beyond traditional weight-focused techniques to address the unique challenges of graph data. The work opens possibilities for more efficient and scalable graph analytics on edge devices, enabling advancements in areas like personalised medicine, smart cities, and fraud detection, where real-time processing of complex graph data is crucial.

LoRAP for Quantized Graph Neural Networks enables efficient

Recognizing that quantization errors accumulate in deeper layers and originate primarily from feature quantization, the research team pioneered a prompt learning approach to mitigate performance degradation. The study employed prompt learning, a technique injecting learnable parameters into data features, adapting a method previously successful in Large Language Models (LLMs) to the unique challenges of GNNs. Initial experiments utilized the GPF-plus framework for Quantization-Aware Training (QAT), integrating prompted features for each node within the graph structure. These prompts leverage low-rank basis matrices, ensuring computational efficiency and minimal latency, a crucial consideration for real-world deployments.

The researchers meticulously designed LoRAP to be input-dependent, meaning the prompts adapt based on the specific data being processed, further refining the aggregation process. To validate LoRAP’s effectiveness, the study conducted extensive evaluations across four leading QAT frameworks, testing performance on nine diverse datasets. Experiments employed graph structures defined by a set of nodes, V, and edges, E, with each node possessing a feature vector, xi, in Rd, represented as a matrix X ∈ RN×d and an adjacency matrix, A ∈ RN×N, defining node connectivity. The message-passing mechanism, central to GNNs, was implemented with a message computed as mij = φ h(l) i, h(l) j, eij, where h(l) i and h(l) j represent node features at layer l and eij denotes edge features. This optimization streamlines the computation, enabling faster inference and making the technique more practical for resource-constrained environments. The work demonstrates that combining GPF-plus and LoRAP consistently increases model performance, offering a robust solution for deploying efficient and accurate GNNs.

LoRAP boosts quantized Graph Neural Network performance significantly

The research addresses the challenge of deploying GNNs on resource-constrained devices by reducing model size and accelerating inference through quantization, representing data with lower precision. Experiments reveal that feature quantization is particularly critical in GNNs, where feature representations can comprise up to 98.44% of the total memory footprint, compared to 87% for model weights in LLMs. This highlights the need for specialized quantization strategies for GNNs. The team measured significant improvements by leveraging prompt learning, a method that manipulates input data to optimize quantization-aware training (QAT).

The breakthrough delivers a computationally efficient solution by utilizing low-rank basis matrices within the prompts. Measurements confirm that LoRAP effectively reduces quantization errors in deeper layers, where they tend to accumulate. Data shows that LoRAP consistently improves model performance across diverse datasets and quantization frameworks. Scientists achieved a substantial step forward in enabling efficient and accurate graph-based machine learning on a wider range of platforms.

LoRAP boosts quantized graph neural network performance significantly

Quantization, a technique for reducing model size and accelerating inference, often leads to performance loss in GNNs, particularly concerning feature representation. LoRAP achieves superior parameter efficiency compared to full-rank prompting strategies, requiring fewer trainable parameters. Furthermore, combining LoRAP with node feature prompting, known as GPF-LoRAP, yields the strongest gains, successfully matching the accuracy of full-precision models in several instances. The authors acknowledge that the performance gains are dependent on the specific GNN architecture and quantization framework used. Future work could explore the application of LoRAP to other graph-based machine learning models and investigate its effectiveness with different prompting strategies. This approach offers a novel and effective way to improve GNN performance in resource-constrained environments, potentially enabling deployment on edge devices and in other applications where computational efficiency is paramount.

👉 More information

🗞 LoRAP: Low-Rank Aggregation Prompting for Quantized Graph Neural Networks Training

🧠 ArXiv: https://arxiv.org/abs/2601.15079