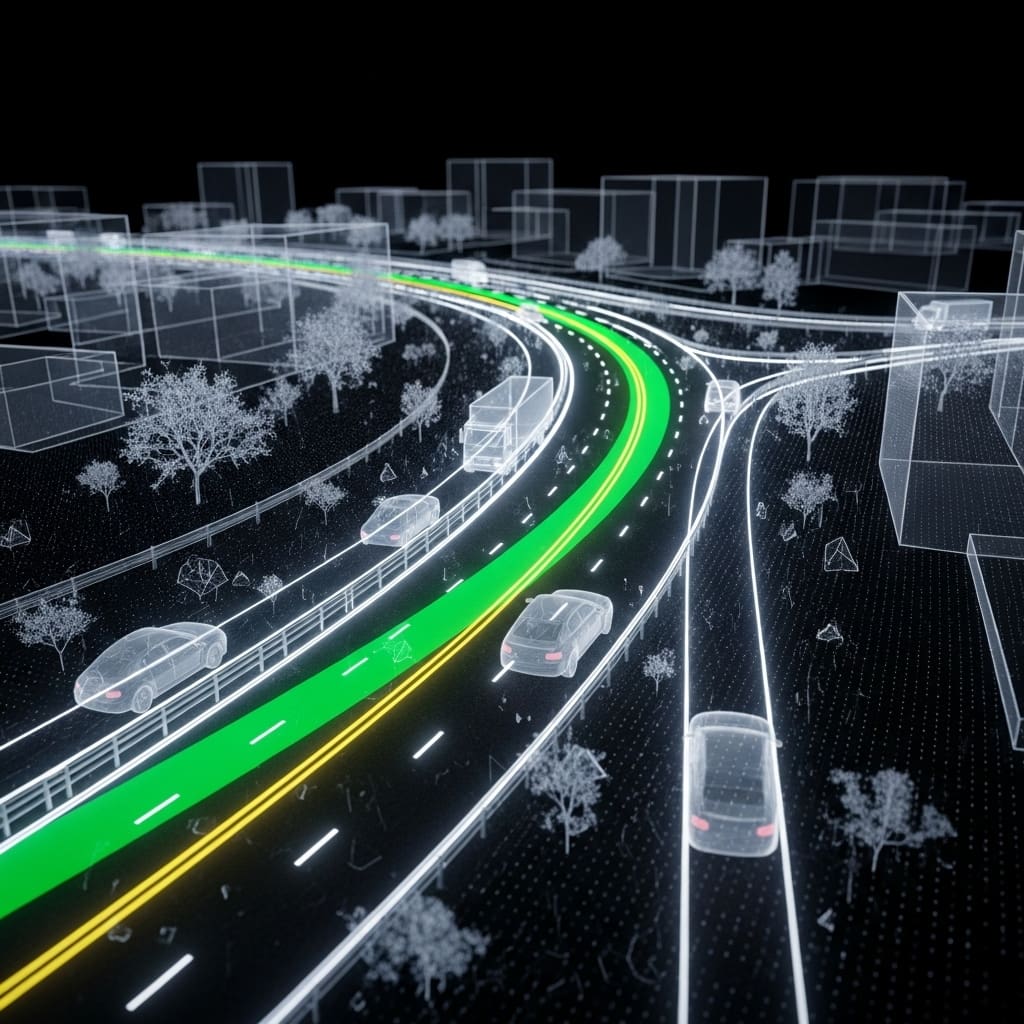

Researchers are tackling the challenge of improving autonomous driving through self-supervised learning from video data. Linhan Wang from XPENG Motors, Zichong Yang from Purdue University, and Chen Bai, alongside Guoxiang Zhang, Xiaotong Liu, and Xiaoyin Zheng all from XPENG Motors, present Drive-JEPA, a novel framework combining Video Joint-Embedding Predictive (V-JEPA) learning with multimodal trajectory distillation. This work addresses the limitations of current video world models by learning from diverse trajectories , both human and simulated , and is significant because it achieves a new state-of-the-art performance on the challenging NAVSIM benchmark, surpassing previous methods by a considerable margin and paving the way for more robust and safer self-driving systems.

Drive-JEPA learns driving via video prediction and distillation,

Scientists have developed a new framework, Drive-JEPA, to significantly advance end-to-end autonomous driving capabilities. This research addresses key limitations in current approaches that rely on self-supervised video pretraining for learning transferable planning representations, which have previously yielded only modest improvements. Drive-JEPA learns predictive representations from large-scale driving videos using a ViT encoder, aligning these representations with effective trajectory planning.

The core innovation lies in the proposal-centric planner, which distills knowledge from both human and simulator-generated trajectories. This is achieved through a momentum-aware selection mechanism designed to promote stable and safe behaviour during autonomous navigation. By leveraging diverse trajectories, the system overcomes the inherent ambiguity of driving scenarios where a single human trajectory often represents only one possible solution. Experiments conducted on the NAVSIM platform demonstrate the effectiveness of this approach, with the V-JEPA representation, combined with a Transformer-based decoder, surpassing prior methods by 3 PDMS in perception-free settings.

The complete Drive-JEPA framework achieves a state-of-the-art performance of 93.3 PDMS on NAVSIM v1 and 87.8 EPDMS on v2, establishing a new benchmark for autonomous driving systems. Specifically, the research details the adaptation of V-JEPA for driving, pretraining a vision transformer encoder on extensive driving video data to generate predictive representations crucial for trajectory planning. The waypoint-anchored proposal generation utilises deformable attention to refine trajectory proposals iteratively, aggregating Bird’s Eye View features at key waypoints. Furthermore, the multimodal trajectory distillation component leverages both human and simulator data, incorporating safety and comfort constraints to facilitate effective knowledge transfer from the simulator. A momentum-aware selection module then assigns scores to candidate trajectories based on collision risk, traffic rule compliance, and comfort, reducing frame-to-frame distortion and enhancing overall driving quality. The research team first adapted V-JEPA for driving by pretraining a Vision Transformer (ViT) encoder on a large-scale driving video dataset, producing predictive representations aligned with trajectory planning. This pretraining process focused on predicting future latent states, incorporating techniques to prevent mode collapse during representation learning. Subsequently, they engineered a proposal-centric planner that distills knowledge from both simulator-generated and human trajectories, addressing the inherent ambiguity of driving scenarios.

Experiments employed a waypoint-anchored proposal generation method, utilising deformable attention to aggregate Bird’s Eye View (BEV) features at trajectory waypoints and iteratively refine proposals. To enhance trajectory diversity, the team supervised these proposals using a combination of human demonstrations and trajectories generated by a simulator, ensuring adherence to safety and comfort constraints. This innovative approach facilitates effective knowledge distillation from the simulation environment, enriching the learning process. The study pioneered a momentum-aware selection mechanism, assigning scores to candidate trajectories based on predicted collision risk, traffic rule compliance, and passenger comfort.

Furthermore, the selection module incorporates a momentum-aware penalty, designed to minimise frame-to-frame distortion in the generated trajectories, promoting smoother and more stable driving behaviour. Researchers validated the Drive-JEPA framework on the NAVSIM v1 and v2 datasets, alongside Bench2Drive, demonstrating its performance across diverse driving conditions. The V-JEPA representation, when combined with a transformer-based decoder, outperformed prior methods by 3 PDMS in a perception-free setting, indicating robust feature extraction. The complete Drive-JEPA framework achieved 93.3 PDMS on v1 and 87.8 EPDMS on v2, establishing a new state-of-the-art performance level in autonomous driving.

Drive-JEPA achieves state-of-the-art autonomous driving scores on challenging

Scientists have developed a new framework, Drive-JEPA, which significantly advances end-to-end autonomous driving capabilities. Experiments revealed that the V-JEPA representation, when combined with a simple decoder, outperformed prior methods by 3 PDMS in a perception-free setting, demonstrating the strength of the predictive representations learned from large-scale driving videos. The complete Drive-JEPA framework attained 93.3 PDMS on NAVSIM v1 and 87.8 EPDMS on NAVSIM v2, establishing a new benchmark in autonomous driving performance.

Researchers trained the models on two NVIDIA A30 GPUs for 20 epochs, utilising a total batch size of 64 with the Adam optimiser, employing learning rates of 1 × 10−4 and 1 × 10−5 for the main network and ViT encoder respectively. The team implemented Np = 32 proposals, balancing computational efficiency with strong performance, and processed front-view camera images resized to 512 × 256 pixels. Data shows that Drive-JEPA, utilising a ResNet34 backbone, achieved a PDMS of 91.5, while the ViT/L version reached an even higher score of 93.3 on NAVSIM v1. Notably, the method maintained high scores across various metrics, including a Diversity score of 90.8 and an Efficiency score of 157.85 on the Bench2Drive benchmark.

Ablation studies confirmed the effectiveness of each component, with the addition of multimodal trajectory distillation and momentum-aware trajectory selection consistently improving performance, culminating in a 3.7% increase in EPDMS. Furthermore, the system demonstrated robust performance on NAVSIM v2, achieving an EPDMS of 87.8 with the ViT/L backbone, surpassing other leading methods like Transfuser, HydraMDP++, and iPad. The core of this approach lies in pretraining a ViT encoder on extensive driving video data to generate predictive representations suitable for trajectory planning. This pretraining is coupled with a proposal-centric planner which distills both human and simulator-generated trajectories, employing a momentum-aware selection process to ensure stable and safe driving behaviour. When tested on the NAVSIM benchmark, the V-JEPA representation, combined with a simple decoder, achieved a performance increase of 3 PDMS in a perception-free setting.

The complete Drive-JEPA framework attained state-of-the-art results, scoring 93.3 PDMS on NAVSIM v1 and 87.8 EPDMS on v2, and also demonstrated improved closed-loop performance on Bench2Drive. The authors acknowledge that the framework, like many in this field, has limitations and further research is needed to address these. This research demonstrates the effectiveness of combining self-supervised video pretraining with multimodal trajectory distillation to overcome the challenges of learning from limited and ambiguous driving data. By leveraging V-JEPA for representation learning and incorporating diverse trajectories, Drive-JEPA mitigates the risk of imitation-learning modal collapse, resulting in more robust and reliable autonomous driving systems. The authors suggest that future work could focus on refining the simulator-guided trajectory generation and exploring alternative momentum-aware selection mechanisms to further enhance performance and safety.

👉 More information

🗞 Drive-JEPA: Video JEPA Meets Multimodal Trajectory Distillation for End-to-End Driving

🧠 ArXiv: https://arxiv.org/abs/2601.22032