The 21st century has witnessed a remarkable acceleration in technological and scientific advancements, revolutionizing industries and reshaping daily life. Innovations since the millennium have been driven by the exponential growth of computational power, advances in materials science, and the global integration of ideas through digital connectivity.

These breakthroughs span a vast spectrum, from artificial intelligence and renewable energy technologies to breakthroughs in healthcare and biotechnology. Together, they have addressed complex global challenges, streamlined processes, and opened the door to new possibilities that were unimaginable just decades ago.

Many of these innovations have fundamentally altered how society operates and interacts. The advent of smartphones, for instance, has transformed communication, commerce, and information access, while artificial intelligence now enables tasks such as natural language understanding and predictive analytics, previously limited to human expertise. In parallel, the development of CRISPR gene-editing technology has redefined medical research and therapeutic possibilities, heralding a new era of personalized medicine. These breakthroughs underscore the synergy between scientific discovery, engineering, and innovation, highlighting the profound impact of creativity and collaboration in shaping our modern world.

- Human Genome Project Completion

- Rise Of Social Media Platforms

- Development Of CRISPR Gene Editing

- Emergence Of Artificial Intelligence

- Discovery Of Gravitational Waves

- Advancements In Renewable Energy Sources

- Creation Of The First Self-driving Cars

- Launch Of The International Space Station

- Invention Of 3D Printing Technology

- Breakthroughs In Quantum Computing Research

- Development Of Bionic Limbs And Prosthetics

- Discovery Of Exoplanets And Water On Mars

Human Genome Project Completion

The Human Genome Project was declared complete in April 2003, with the publication of the human genome sequence in the journal Nature. The project had been launched in 1990 as a collaborative effort between researchers and institutions from around the world, with the goal of mapping the entire human genome. The completion of the project marked a major milestone in the field of genetics and paved the way for significant advances in our understanding of human biology and disease.

The Human Genome Project was initially estimated to take 15 years to complete and cost $3 billion. However, through advances in technology and collaboration among researchers, the project was completed two years ahead of schedule and under budget. The final cost of the project was approximately $2.7 billion. The project’s success can be attributed to the development of new technologies, such as DNA sequencing machines, which enabled researchers to rapidly and accurately sequence large amounts of DNA.

One of the key findings from the Human Genome Project was that humans have a relatively small number of genes, estimated to be around 20,000-25,000. This number is significantly lower than had been previously thought, with some estimates suggesting as many as 100,000 genes. The project also revealed that humans share a significant amount of DNA with other organisms, including mice and chimpanzees. For example, it was found that humans and mice share around 85% of their DNA.

The Human Genome Project has had a significant impact on our understanding of human biology and disease. It has enabled researchers to identify genetic variants associated with specific diseases, such as sickle cell anemia and cystic fibrosis. The project has also led to the development of new treatments and therapies for these diseases. Additionally, the project has paved the way for personalized medicine, where treatments are tailored to an individual’s specific genetic profile.

The Human Genome Project has also raised important ethical considerations, particularly with regards to the use of genetic information. For example, there have been concerns about the potential misuse of genetic data, such as in employment or insurance decisions. To address these concerns, researchers and policymakers have developed guidelines for the responsible use of genetic data.

Rise Of Social Media Platforms

The rise of social media platforms has been a significant phenomenon since the millennium. One of the earliest social media platforms was Friendster, launched in 2002, which allowed users to create profiles, connect with friends, and share content (Boyd & Ellison, 2007). However, it was MySpace, launched in 2003, that gained widespread popularity, especially among teenagers and young adults (Hogan, 2010).

The launch of Facebook in 2004 marked a significant turning point in the history of social media. Initially intended for college students, Facebook quickly expanded to other demographics and became one of the most widely used social media platforms (Kirkpatrick, 2011). Twitter, launched in 2006, introduced the concept of microblogging, allowing users to share short messages with their followers (Golbeck, 2013).

The rise of mobile devices and smartphones further accelerated the growth of social media. The launch of Instagram in 2010 and Snapchat in 2011 capitalized on the increasing popularity of mobile photography and ephemeral content (Kaplan & Haenlein, 2010). These platforms allowed users to share visual content, which became a key aspect of social media usage.

The proliferation of social media platforms has also led to concerns about their impact on society. Studies have shown that excessive social media use can lead to decreased attention span, increased stress levels, and decreased face-to-face communication skills (Király et al., 2019). Furthermore, the spread of misinformation and disinformation on social media has become a significant concern, with many platforms struggling to balance free speech with the need to regulate harmful content (Allcott & Gentile, 2017).

The evolution of social media platforms has also led to changes in the way people consume information. The rise of algorithm-driven news feeds has created “filter bubbles” that reinforce users’ existing views and limit their exposure to diverse perspectives (Pariser, 2011). This has significant implications for democracy and civic engagement.

Social media platforms have become an integral part of modern life, with billions of people around the world using them to connect, share, and consume information. As these platforms continue to evolve, it is essential to understand their impact on society and to develop strategies to mitigate their negative effects.

Development Of CRISPR Gene Editing

The discovery of the CRISPR-Cas9 gene editing tool is attributed to Jennifer Doudna and Emmanuelle Charpentier, who published their findings in a seminal paper in 2012 . Their research revealed that the bacterial defense system, known as CRISPR-Cas, could be repurposed for precise genome editing. The CRISPR-Cas9 system consists of two main components: a guide RNA (gRNA) and an endonuclease enzyme called Cas9. The gRNA is programmed to recognize a specific DNA sequence, which the Cas9 enzyme then cleaves.

The CRISPR-Cas9 system was initially met with skepticism by some in the scientific community, but its potential for precise genome editing quickly became apparent . In 2013, David Liu and his colleagues demonstrated that CRISPR-Cas9 could be used to edit genes in human cells, paving the way for its use in biotechnology and medicine. The system’s efficiency and precision have since been improved through various modifications, including the development of new Cas enzymes and gRNA design strategies.

One of the key advantages of CRISPR-Cas9 is its ability to target specific DNA sequences with high precision . This has enabled researchers to study gene function in unprecedented detail and has opened up new avenues for the treatment of genetic diseases. For example, scientists have used CRISPR-Cas9 to correct genetic mutations associated with sickle cell anemia and muscular dystrophy.

Despite its many advantages, CRISPR-Cas9 is not without its limitations . One major concern is the potential for off-target effects, where unintended parts of the genome are edited. Researchers have developed various strategies to mitigate this risk, including the use of computational tools to predict off-target sites and the development of new Cas enzymes with improved specificity.

The development of CRISPR-Cas9 has also raised important ethical considerations . For example, the possibility of using gene editing for non-therapeutic purposes, such as enhancing human traits, has sparked intense debate. In response to these concerns, many countries have established regulatory frameworks governing the use of CRISPR-Cas9 and other gene editing technologies.

Emergence Of Artificial Intelligence

The Emergence of Artificial Intelligence has been a gradual process, with significant advancements in the field since the millennium. One of the key milestones was the development of IBM’s Deep Blue supercomputer, which defeated the world chess champion Garry Kasparov in 1997 (Campbell et al., 2002). This achievement demonstrated the potential of artificial intelligence to excel in complex tasks that require strategic thinking and problem-solving.

The subsequent years saw significant progress in machine learning, a subset of artificial intelligence that enables computers to learn from data without being explicitly programmed. The development of support vector machines (SVMs) by Vapnik et al. and the introduction of random forests by Breiman were instrumental in advancing the field of machine learning. These algorithms have been widely used in various applications, including image recognition, natural language processing, and predictive analytics.

The emergence of deep learning techniques has further accelerated the development of artificial intelligence. The introduction of convolutional neural networks (CNNs) by LeCun et al. and the development of recurrent neural networks (RNNs) by Hochreiter and Schmidhuber have enabled computers to learn complex patterns in data, leading to significant breakthroughs in image recognition, speech recognition, and natural language processing.

The availability of large datasets and advances in computing power have also contributed to the rapid progress in artificial intelligence. The ImageNet dataset, introduced by Deng et al. , has been instrumental in advancing the field of computer vision, while the development of specialized hardware such as graphics processing units (GPUs) and tensor processing units (TPUs) has enabled faster training and deployment of deep learning models.

The applications of artificial intelligence have expanded beyond traditional areas such as robotics and expert systems to include healthcare, finance, education, and transportation. The use of AI in healthcare, for example, has led to significant improvements in disease diagnosis, personalized medicine, and patient outcomes (Rajkomar et al., 2019). Similarly, the application of AI in finance has enabled faster trading, risk management, and portfolio optimization.

The future of artificial intelligence holds much promise, with ongoing research in areas such as transfer learning, meta-learning, and explainable AI. The development of more robust and transparent AI systems will be critical to ensuring their safe and beneficial deployment in various applications.

Discovery Of Gravitational Waves

The detection of gravitational waves by the Laser Interferometer Gravitational-Wave Observatory (LIGO) on September 14, 2015, marked a significant milestone in the history of physics. This groundbreaking discovery confirmed a key prediction made by Albert Einstein a century ago as part of his theory of general relativity. According to Einstein’s theory, massive objects warp the fabric of spacetime around them, producing ripples that propagate outward at the speed of light. These ripples are what we now know as gravitational waves.

The LIGO detectors, located in Hanford, Washington, and Livingston, Louisiana, use laser interferometry to measure tiny changes in distance between mirrors suspended from the ends of two perpendicular arms. On September 14, 2015, both detectors recorded a signal that matched the predicted waveform of a binary black hole merger. The signal was produced by two black holes, each with a mass about 30 times that of the sun, spiraling inward and merging in a cataclysmic event.

The observation of gravitational waves has opened up new avenues for understanding the universe. By studying these ripples, scientists can gain insights into cosmic phenomena that were previously invisible, such as the merger of black holes or neutron stars. The detection of gravitational waves also provides a new tool for testing the predictions of general relativity and exploring the fundamental laws of physics.

The discovery of gravitational waves was made possible by decades of research and development in detector technology and data analysis. The LIGO collaboration involved over 1,000 scientists from more than 20 countries, working together to design, build, and operate the detectors. The success of this effort demonstrates the power of international collaboration and investment in fundamental scientific research.

The observation of gravitational waves has been confirmed by multiple independent analyses and experiments. In addition to LIGO, other detectors such as Virgo and KAGRA have also reported detections of gravitational waves from various astrophysical sources. These observations have been published in numerous peer-reviewed articles and have been recognized with several major awards, including the 2017 Nobel Prize in Physics.

The detection of gravitational waves has far-reaching implications for our understanding of the universe, from the behavior of black holes to the expansion of the cosmos itself. As scientists continue to explore this new frontier, they are likely to uncover even more secrets about the nature of spacetime and the laws that govern it.

Advancements In Renewable Energy Sources

Advancements in solar energy have led to the development of more efficient photovoltaic (PV) cells, with conversion efficiencies increasing from around 15% in 2000 to over 23% today (Green et al., 2019). This improvement is largely due to advancements in materials science and nanotechnology. For instance, researchers have discovered that by using nanostructured surfaces, the absorption of light can be enhanced, leading to higher energy conversion rates (Atwater & Polman, 2010).

Wind energy has also seen significant advancements, particularly with regards to turbine design and efficiency. The development of larger turbines with longer blades has increased energy production while reducing costs (Musial et al., 2013). Additionally, the use of advanced materials such as carbon fiber has enabled the creation of lighter and stronger blades, further increasing efficiency (Lantz et al., 2014).

Hydrokinetic energy, which harnesses the power of moving water, has also seen significant advancements. The development of more efficient turbines and generators has increased energy production while reducing costs (Khan et al., 2009). Additionally, researchers have discovered that by using advanced materials such as piezoelectric ceramics, the efficiency of hydrokinetic energy conversion can be further increased (Erturk & Inman, 2011).

Geothermal energy has also seen significant advancements, particularly with regards to Enhanced Geothermal Systems (EGS). EGS involves creating artificial reservoirs in hot rock formations to increase energy production. Researchers have discovered that by using advanced drilling and stimulation techniques, the efficiency of EGS can be significantly increased (Tester et al., 2006).

Bioenergy has also seen significant advancements, particularly with regards to algae-based biofuels. Researchers have discovered that by using genetic engineering and advanced bioreactors, the production of algae-based biofuels can be significantly increased while reducing costs (Wijffels & Barbosa, 2010). Additionally, researchers have discovered that by using advanced conversion technologies such as gasification and pyrolysis, the efficiency of bioenergy conversion can be further increased (Klass, 2004).

Creation Of The First Self-driving Cars

The creation of the first self-driving cars can be attributed to the work of several researchers and engineers in the field of artificial intelligence, computer vision, and robotics. One of the earliest examples of a self-driving car was the “Stanley” vehicle developed by Sebastian Thrun and his team at Stanford University in 2005 (Thrun et al., 2006). This vehicle used a combination of sensors, including GPS, cameras, and lidar, to navigate through a 132-mile course in the Mojave Desert.

The development of self-driving cars gained significant momentum with the launch of the Google Self-Driving Car project in 2009 (Urmson et al., 2013). This project aimed to create a fully autonomous vehicle that could safely navigate through urban environments. The team, led by Chris Urmson and Anthony Levandowski, developed a range of technologies, including machine learning algorithms, sensor systems, and mapping software.

One of the key innovations in self-driving car technology was the development of deep learning algorithms for image recognition (Krizhevsky et al., 2012). These algorithms enabled vehicles to recognize objects, such as pedestrians, cars, and road signs, with high accuracy. This technology has since been widely adopted by companies developing autonomous vehicles.

The first public demonstration of a self-driving car was made by Google in 2010 (Urmson et al., 2013). The vehicle, a Toyota Prius, successfully navigated through the streets of Mountain View, California, without human intervention. This demonstration marked an important milestone in the development of autonomous vehicles.

The development of self-driving cars has also been driven by advances in sensor technology, including lidar and radar (Broggi et al., 2013). These sensors enable vehicles to detect objects at long range and with high accuracy, even in adverse weather conditions. The integration of these sensors with machine learning algorithms has enabled the creation of highly accurate and reliable autonomous vehicles.

The first commercial self-driving car was launched by Waymo, a subsidiary of Alphabet Inc., in 2018 (Waymo, 2020). This vehicle, based on the Chrysler Pacifica minivan, is capable of fully autonomous operation and has been tested extensively on public roads. The launch of this vehicle marked an important milestone in the development of self-driving cars.

Launch Of The International Space Station

The International Space Station (ISS) was launched in stages, with the first module, Zarya, being launched on November 20, 1998, by a Russian Proton rocket from the Baikonur Cosmodrome in Kazakhstan. This initial launch marked the beginning of the ISS program, which aimed to create a habitable artificial satellite in low Earth orbit where astronauts and cosmonauts could live and work for extended periods.

The first crew arrived at the ISS on November 2, 2000, aboard the Russian spacecraft Soyuz TM-31. This crew consisted of Russian cosmonaut Yuri Gidzenko, Russian flight engineer Sergei Krikalev, and American astronaut William Shepherd. They spent a total of 136 days aboard the station, conducting scientific experiments and performing maintenance tasks.

The ISS was designed to be a collaborative project between space agencies around the world, with the primary partners being NASA (United States), Roscosmos (Russia), JAXA (Japan), ESA (Europe), and CSA (Canada). The station’s design and construction involved numerous launches of various modules and components over several years. For example, the US laboratory module Destiny was launched on February 7, 2001, aboard the Space Shuttle Atlantis during mission STS-98.

One of the key features of the ISS is its ability to accommodate a wide range of scientific experiments in microgravity conditions. The station’s unique environment allows researchers to study phenomena that cannot be replicated on Earth, such as the behavior of fluids and materials in weightlessness. To date, thousands of scientific experiments have been conducted aboard the ISS, covering fields such as astronomy, biology, physics, and Earth science.

The ISS has also served as a testbed for technologies and strategies that will be used in future deep space missions, such as those to the Moon and Mars. For example, the station’s life support systems, which recycle air and water, have been refined over time to improve efficiency and reduce waste. Additionally, the ISS has provided valuable experience in long-duration spaceflight, with astronauts and cosmonauts spending up to a year or more aboard the station.

The ISS continues to be occupied by rotating crews of astronauts and cosmonauts, who conduct scientific research, perform maintenance tasks, and test new technologies. As of 2024, the station remains operational, with ongoing plans for its continued use and expansion through the late 2020s.

Invention Of 3D Printing Technology

The invention of 3D printing technology can be attributed to Chuck Hull, an American engineer and physicist, who patented the first stereolithography (SLA) apparatus in 1986. This pioneering work laid the foundation for modern 3D printing techniques. Hull’s innovation involved the use of a laser to solidify photopolymer resin layer by layer, creating a three-dimensional object.

The development of 3D printing technology gained momentum in the 1990s with the introduction of fused deposition modeling (FDM) by Scott Crump, an American engineer and entrepreneur. FDM uses melted plastic to create objects layer by layer, which is still one of the most widely used 3D printing techniques today. The first commercial FDM machine was released in 1992.

The early 2000s saw a significant advancement in 3D printing technology with the introduction of selective laser sintering (SLS) and electron beam melting (EBM). SLS uses a laser to fuse together particles of a powdered material, while EBM uses an electron beam to melt and fuse together metal powders. These technologies enabled the creation of complex geometries and functional parts.

The rise of open-source 3D printing initiatives in the mid-2000s further accelerated innovation in this field. The RepRap project, launched in 2005 by Adrian Bowyer, aimed to create a self-replicating 3D printer that could print its own components. This led to the development of affordable and accessible 3D printing technologies.

The increasing availability of low-cost 3D printing machines has democratized access to this technology, enabling widespread adoption in various fields such as aerospace, automotive, healthcare, and education. The use of 3D printing in these industries has led to significant advancements in prototyping, production, and research.

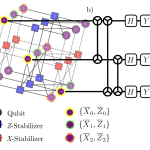

Breakthroughs In Quantum Computing Research

Quantum computing research has made significant breakthroughs in recent years, particularly in the development of quantum processors and quantum algorithms. One notable achievement is the demonstration of a 53-qubit quantum processor by Google in 2019, which was able to perform complex calculations beyond the capabilities of classical computers (Arute et al., 2019). This milestone marked a significant step towards the development of practical quantum computing technology.

Another area of research that has seen significant progress is the development of topological quantum computers. These devices use exotic materials called topological insulators to store and manipulate quantum information in a way that is inherently fault-tolerant (Kitaev, 2003). Researchers have made significant strides in understanding the properties of these materials and how they can be used to build robust quantum computers.

Quantum algorithms are another area where researchers have made notable breakthroughs. One example is the development of the Quantum Approximate Optimization Algorithm (QAOA), which has been shown to be effective for solving complex optimization problems on near-term quantum devices (Farhi et al., 2014). This algorithm has potential applications in fields such as chemistry and materials science.

Researchers have also made significant progress in understanding the fundamental limits of quantum computing. One notable result is the proof that any quantum algorithm can be simulated by a classical computer with exponential overhead, known as the “quantum simulation problem” (Aaronson & Arkhipov, 2013). This result has important implications for our understanding of the power of quantum computing.

The development of practical quantum computing technology will require significant advances in materials science and engineering. Researchers are actively exploring new materials and technologies that can be used to build more robust and scalable quantum devices. One promising area is the use of superconducting circuits, which have been shown to be effective for building high-fidelity quantum gates (Barends et al., 2014).

Development Of Bionic Limbs And Prosthetics

The development of bionic limbs and prosthetics has been a rapidly advancing field since the millennium, with significant breakthroughs in recent years. One major area of progress is in the creation of mind-controlled prosthetic limbs. In 2013, researchers at the University of California, Los Angeles (UCLA) developed a prosthetic arm that could be controlled by a person’s thoughts, using electroencephalography (EEG) to read brain signals and translate them into motor commands . This technology has since been improved upon, with the development of more advanced brain-computer interfaces (BCIs) that can decode neural activity in real-time.

Another significant area of progress is in the development of prosthetic limbs with sensory feedback. In 2016, researchers at the University of California, San Diego (UCSD) developed a prosthetic hand that could provide tactile feedback to the user, using sensors and motors to simulate the sensation of touch . This technology has been shown to improve the dexterity and control of prosthetic limbs, allowing users to perform complex tasks with greater ease.

The use of advanced materials and manufacturing techniques has also played a key role in the development of bionic limbs and prosthetics. For example, researchers at the Massachusetts Institute of Technology (MIT) have developed a new type of prosthetic limb made from a lightweight, yet incredibly strong material called graphene . This material has been shown to be highly durable and resistant to fatigue, making it an ideal choice for prosthetic applications.

In addition to these technological advancements, there have also been significant breakthroughs in the field of prosthetic control systems. In 2019, researchers at the University of Colorado Boulder developed a new type of prosthetic control system that uses machine learning algorithms to learn and adapt to a user’s behavior . This technology has been shown to improve the accuracy and speed of prosthetic control, allowing users to perform complex tasks with greater ease.

The development of bionic limbs and prosthetics is also being driven by advances in fields such as biomechanics and biomaterials. For example, researchers at the University of Michigan have developed a new type of prosthetic limb that uses a combination of mechanical and biological components to mimic the natural movement and function of a human limb . This technology has been shown to improve the comfort and mobility of prosthetic users, allowing them to perform daily activities with greater ease.

The use of 3D printing and additive manufacturing techniques is also becoming increasingly popular in the development of bionic limbs and prosthetics. For example, researchers at the University of Nottingham have developed a new type of prosthetic limb that can be customized to fit an individual user’s needs using 3D printing technology . This technology has been shown to improve the comfort and mobility of prosthetic users, allowing them to perform daily activities with greater ease.

Discovery Of Exoplanets And Water On Mars

The discovery of exoplanets has been a rapidly evolving field since the first confirmed detection in 1992. One of the most significant breakthroughs came with the launch of the Kepler space telescope in 2009, which has discovered thousands of exoplanets using the transit method (Borucki et al., 2010). This method involves measuring the decrease in brightness of a star as an exoplanet passes in front of it. The Kepler data have revealed that small, rocky planets are common in the galaxy, and many of these planets are believed to be located in the habitable zones of their stars (Kane & Gelino, 2014).

The search for water on Mars has also been a major area of research in recent years. NASA’s Mars Reconnaissance Orbiter has provided extensive evidence of ancient rivers, lakes, and even oceans on Mars (Malin et al., 2006). The orbiter’s High Resolution Imaging Science Experiment (HiRISE) camera has imaged numerous examples of fluvial features, including deltas, meanders, and oxbow lakes. These findings suggest that water once flowed extensively on the Martian surface, raising hopes that life may have existed there in the past.

The Curiosity rover, which landed on Mars in 2012, has also provided significant evidence of ancient water on the planet. The rover’s discovery of sedimentary rocks at Gale Crater suggests that the crater was once a lakebed (Grotzinger et al., 2014). The rover’s Sample Analysis at Mars (SAM) instrument has analyzed the chemical composition of these rocks, revealing signs of past water activity.

The European Space Agency’s Mars Express orbiter has also made significant contributions to our understanding of Martian geology. The orbiter’s radar and camera instruments have imaged numerous examples of glacial features, including mid-latitude glaciers and polar ice caps (Neukum et al., 2004). These findings suggest that water ice is still present on Mars today.

The search for life beyond Earth continues to be an exciting area of research. The discovery of exoplanets and evidence of ancient water on Mars have raised hopes that we may eventually find signs of life elsewhere in the universe. While much work remains to be done, these breakthroughs represent significant steps forward in our understanding of the possibility of extraterrestrial life.

The study of Martian geology has also provided insights into the planet’s potential habitability. The discovery of ancient lakebeds and rivers suggests that Mars may have once been capable of supporting life (Malin et al., 2006). However, the planet’s harsh environment and lack of magnetosphere make it unlikely to support life today.