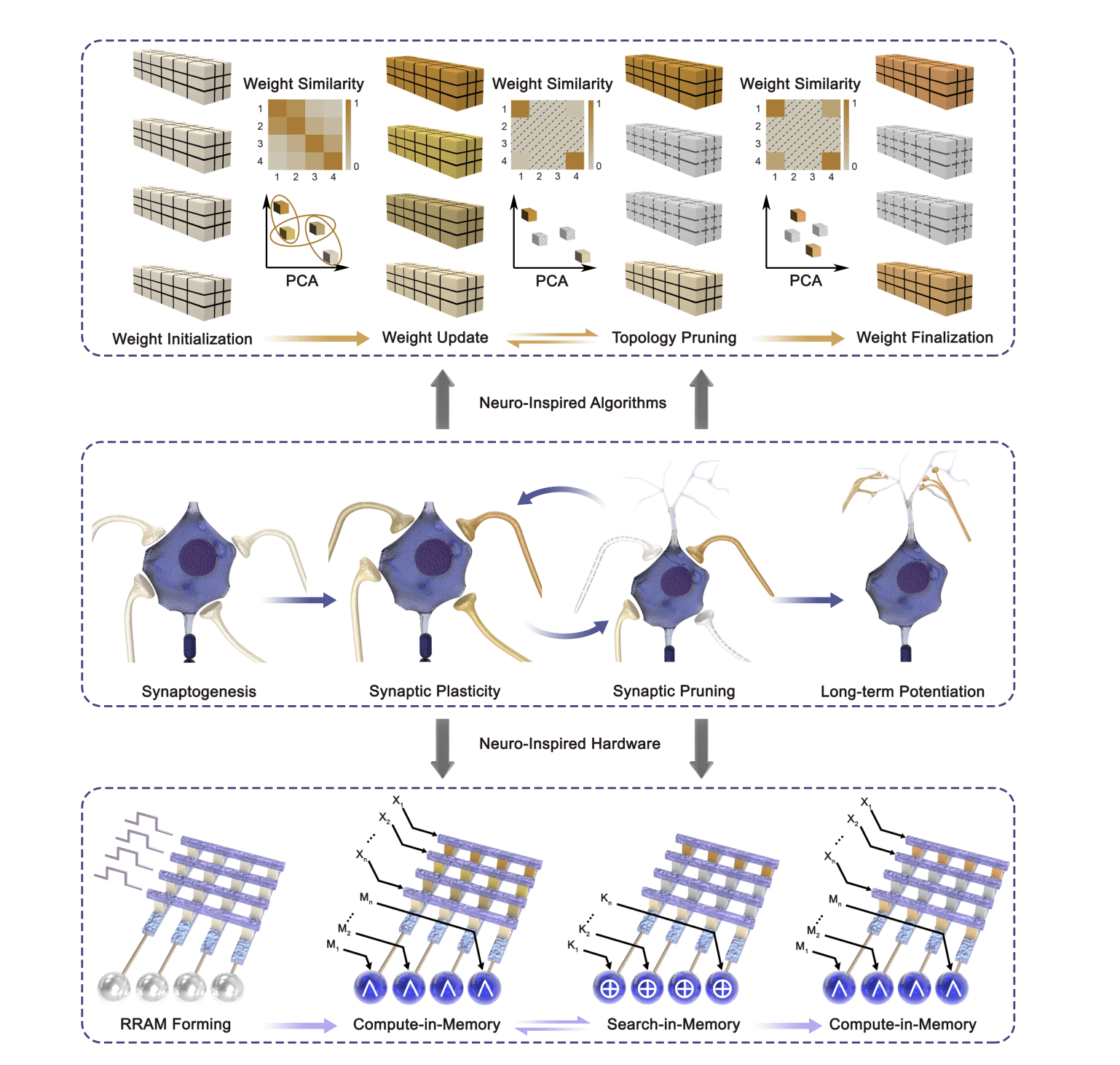

A novel software-hardware co-design achieves substantial gains in energy efficiency and silicon area reduction by mimicking brain-inspired computation. Real-time weight pruning reduces operations by up to 59.94% without loss of accuracy, while a reconfigurable compute-in-memory chip utilising resistive random-access memory (RRAM) lowers energy consumption by up to 86.53% compared to conventional hardware.

The pursuit of artificial intelligence increasingly focuses on mimicking the efficiency of the human brain, where computation and memory are intrinsically linked and neural connections are dynamically refined through learning. Current AI systems, however, typically rely on separate processing and memory units, creating a significant energy bottleneck. Researchers are now demonstrating a novel approach to address this limitation, integrating both learning and structural adaptation within a single hardware architecture.

Songqi Wang, Jichang Yang, Yingjie Yu, Yi Li, Zhongrui Wang, Xiaojuan Qi, Han Wang, Jia Chen, Xinyuan Zhang, Yi Li, Ning Lin, Yangu, and Yue Zhang detail their work in the article, “Reconfigurable Digital RRAM Logic Enables In-Situ Pruning and Learning for Edge AI”, presenting a software-hardware co-design that facilitates real-time weight pruning and learning directly within memory, utilising ReRAM (Resistive Random-Access Memory) technology. Their system, based on a fully digital compute-in-memory chip, aims to significantly reduce energy consumption and silicon area, offering a scalable pathway towards more efficient edge intelligence.

The pursuit of energy-efficient computation fuels innovation in artificial intelligence, prompting investigation into architectures that mirror the human brain’s inherent efficiency. Conventional computing systems, based on the von Neumann architecture, experience a performance bottleneck due to the constant data transfer between processing units and memory, which concurrently increases energy consumption. Compute-in-memory (CIM) technologies, an emerging paradigm, directly process data within the memory itself, circumventing this bottleneck and promising substantial gains in both speed and energy efficiency.

Researchers currently develop CIM chips utilising resistive random-access memory (RRAM), prioritising accuracy and scalability while addressing challenges inherent in analogue implementations. RRAM, a type of non-volatile memory, alters its resistance based on applied voltage, allowing it to store data and perform computations. The presented work details a fully digital CIM chip fabricated using 180nm one-transistor-one-resistor (1T1R) RRAM arrays, achieving zero bit-error rates and offering advantages over analogue RRAM CIM implementations. This chip embeds flexible Boolean logic, specifically NAND, AND, XOR, and OR gates, enabling both convolution and similarity evaluation directly within the memory itself, thereby eliminating the need for analogue-to-digital (ADC) and digital-to-analogue (DAC) conversion overhead. ADC and DAC convert signals between analogue and digital formats, processes which consume energy and introduce latency.

Comparative analysis demonstrates substantial benefits of this approach, revealing significant improvements in performance, energy efficiency, and scalability compared to conventional computing systems. The digital CIM design reduces silicon area by 72.30% and overall energy consumption by 57.26% when contrasted with analogue RRAM CIM implementations. Furthermore, the system achieves energy reductions of 75.61% and 86.53% on the MNIST and ModelNet10 datasets respectively, relative to a high-performance RTX 4090 graphics processing unit. MNIST is a widely used dataset of handwritten digits, while ModelNet10 comprises 3D CAD models, both commonly used for benchmarking machine learning algorithms.

Researchers actively explore various applications of this CIM technology, envisioning a future where intelligent devices can perform complex tasks with minimal energy consumption. This technology holds immense potential for edge computing, enabling powerful AI capabilities in resource-constrained environments such as mobile devices and IoT sensors. The development of energy-efficient CIM chips will also play a crucial role in enabling sustainable AI, reducing the environmental impact of increasingly complex machine learning models.

This work builds upon a growing trend in neuromorphic computing, which seeks to emulate the structure and function of the human brain to achieve greater efficiency and performance. By moving computation closer to memory, CIM technologies overcome the limitations of the von Neumann architecture and enable more efficient processing of data.

The development of this CIM technology required a multidisciplinary approach, bringing together experts in computer architecture, circuit design, and machine learning. This collaborative effort highlights the importance of interdisciplinary research in addressing complex challenges in the field of artificial intelligence.

Future research will focus on scaling up the CIM chip to accommodate larger and more complex neural networks, improving the energy efficiency of the system, and exploring new applications for this technology. Researchers also plan to investigate the use of advanced materials and fabrication techniques further to enhance the performance and reliability of the CIM chip.

This research represents a significant step towards realising the promise of brain-inspired computing, offering a path towards more efficient, scalable, and sustainable artificial intelligence. By overcoming the limitations of the von Neumann architecture and embracing the principles of neuromorphic computing, researchers are paving the way for a new era of intelligent systems.

👉 More information

🗞 Reconfigurable Digital RRAM Logic Enables In-Situ Pruning and Learning for Edge AI

🧠 DOI: https://doi.org/10.48550/arXiv.2506.13151