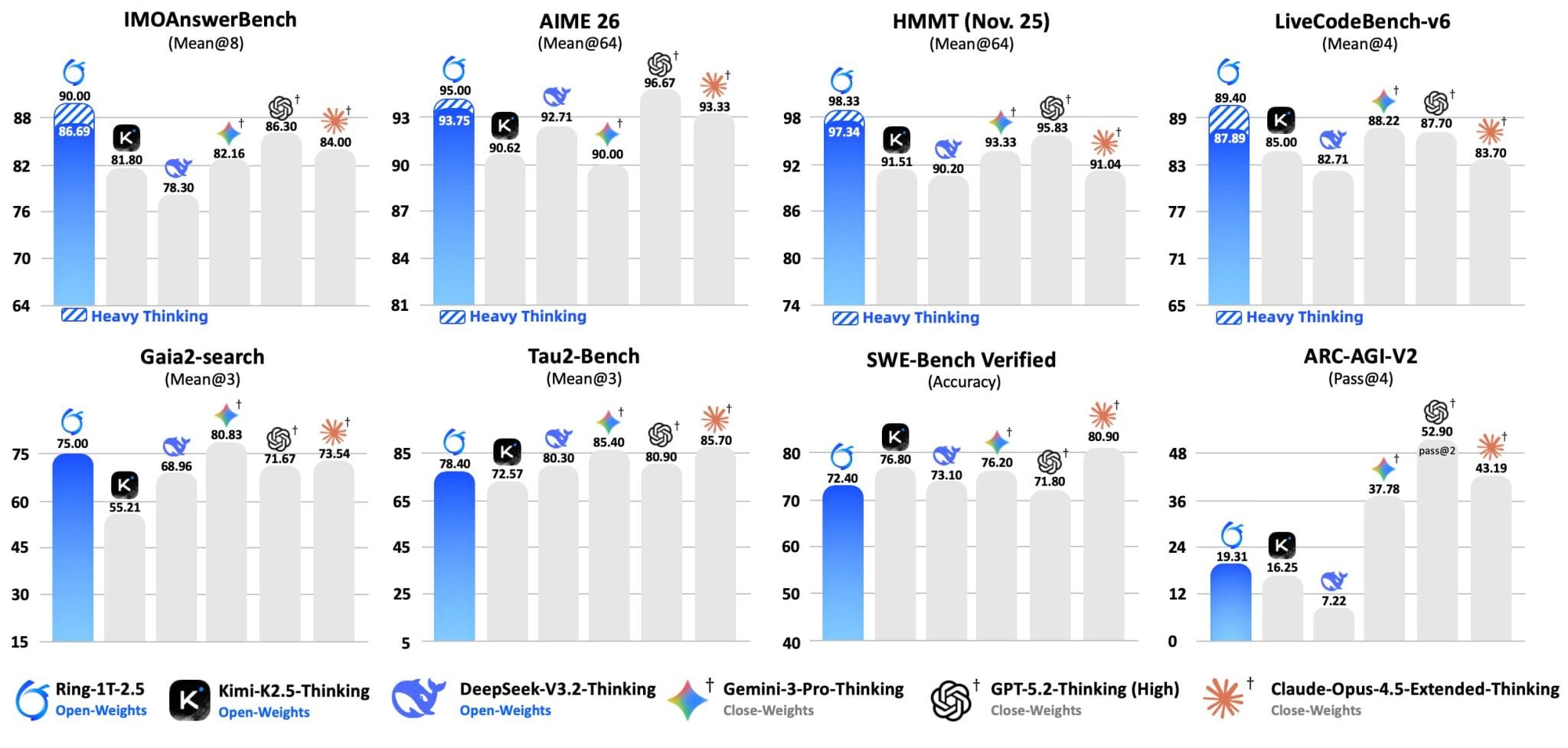

Ant Group has shattered performance barriers with Ring-1T-2.5, the world’s first hybrid linear-architecture “thinking model” boasting a 1T-scale parameter design. This breakthrough delivers unprecedented efficiency – running faster than 32B models while using over 10 times less memory – without compromising intelligence. Rigorous testing demonstrates elite-level reasoning, with Ring-1T-2.5 achieving gold-tier results on both the IMO 2025 (35/42) and CMO 2025 (105/126) benchmarks, even surpassing the cutoff for China’s national team. Designed for complex problem-solving and agentic workflows, the model rivals leading closed-source alternatives like Gemini-3.0-Pro-thinking and GPT-5.2-thinking, and currently holds open-source state-of-the-art performance across reasoning, mathematics, code, and agents.

Hybrid Linear Architecture Enables Ring-1T-2.5’s 1T Parameter Scale

A unique combination of attention mechanisms allows Ring-1T-2.5 to process information with remarkable speed and efficiency. Ant Group’s new model achieves this through a “1:7 blend of Multi-head Linear Attention (MLA) and Lightning Linear,” coupled with partial RoPE and QK normalization—a reimagining of how the model focuses on relevant data. This innovative architecture supports throughput exceeding three times that of existing systems when handling context lengths greater than 32K, significantly reducing computational costs for complex reasoning tasks. Ring-1T-2.5’s design prioritizes practical application, integrating seamlessly with both Claude Code and the OpenClaw agent framework.

The model’s capabilities extend to autonomous task completion, demonstrated by its success in internally building a functional TinyOS, powered by the ASystem RL engine. According to Ant Group, Ring-1T-2.5 boasts a 1T-scale parameter design, yet operates with only 63B active parameters during inference—a substantial reduction in memory usage compared to conventional 32B models.

Dense-Reward RLVR Training Rivals Gemini & GPT-5.2-thinking

Ant Group’s Ring-1T-2.5 achieves performance comparable to leading proprietary models through a unique training methodology—dense-reward Reinforcement Learning from Visual Rewards (RLVR). This approach has allowed the 1T-scale parameter model to rival Gemini-3.0-Pro-thinking and GPT-5.2-thinking in tackling complex problem-solving tasks, despite utilizing 63B active parameters during inference. The model’s success isn’t simply about scale; it’s about how that scale is harnessed, with Ant Group claiming it runs faster than conventional 32B models while demanding over ten times less memory.

Rigorous testing demonstrates Ring-1T-2.5’s capabilities in demanding academic challenges, achieving a score of 35/42 on the IMO 2025 benchmark—the standard for a gold medal—and 105/126 on the CMO 2025, even “surpassing China’s national team cutoff,” according to Ant Group. This suggests a broad applicability beyond narrowly defined tasks.

With a 1T-scale parameter design and 63B active parameters during inference, Ring-1T-2.5 runs faster than conventional 32B models while using over 10x less memory.

Ant Group