On April 15, 2025, researchers Peng Du, Shuolei Wang, Shicheng Li, and Jinjing Shi introduced QAMA. This quantum annealing-based attention mechanism enhances efficiency in deep learning models by significantly reducing memory consumption and energy costs while maintaining real-time responsiveness.

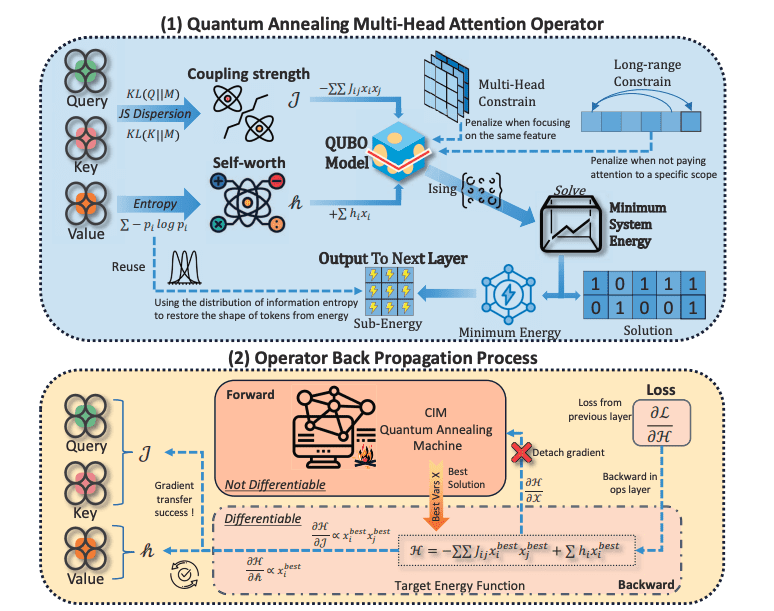

As large language models grow, conventional attention mechanisms face memory and energy challenges. This study introduces Annealing-based Multi-head Attention (QAMA), leveraging quadratic unconstrained binary optimization (QUBO) and Ising models to reduce resource consumption from exponential to linear while maintaining accuracy. QAMA integrates with optical advantages of coherent Ising machines for millisecond responsiveness, overcoming traditional attention limitations through a soft selection mechanism. Experiments show comparable accuracy to classical methods with reduced inference time and improved efficiency, pioneering architectural integration of quantum principles in deep learning.

At the core of this advancement lies parameterized Hamiltonian learning, a method that utilizes quantum circuits to learn Hamiltonians—mathematical descriptions of physical systems—for machine learning applications. This approach has been successfully applied to classify handwritten digits from the MNIST dataset and fashion items from the Fashion-MNIST dataset, both widely used benchmarks in machine learning.

The process involves encoding input data into quantum states, which are then processed by a parameterized quantum circuit. The circuit’s parameters are iteratively adjusted through an optimization algorithm, enabling it to learn the Hamiltonian that best represents the data. This method not only enhances classification accuracy but also provides insights into the underlying structure of the data.

The implications of this innovation extend beyond image recognition. Parameterized Hamiltonian learning could significantly enhance various machine learning tasks by offering a more efficient alternative to classical algorithms. It holds particular promise for optimization problems, which are prevalent in fields such as logistics, finance, and drug discovery.

Moreover, this approach bridges the gap between quantum computing and practical applications, demonstrating that quantum methods can be effective even with current noisy intermediate-scale quantum (NISQ) devices. This marks a crucial step toward making quantum computing accessible and applicable to real-world challenges.

Despite these promising developments, several challenges remain. The noise inherent in NISQ devices can degrade performance, necessitating robust error correction mechanisms. Additionally, the development of efficient optimization algorithms for parameterized circuits is crucial for scaling up these methods to more complex problems. Addressing these challenges will require collaborative efforts from researchers across disciplines, combining insights from quantum physics and machine learning to unlock the full potential of this technology.

The integration of quantum computing with machine learning represents a significant leap forward in computational capabilities. Parameterised Hamiltonian learning exemplifies how quantum methods can enhance traditional algorithms, offering new possibilities for solving intricate problems. As research progresses, we anticipate further advancements that will bring us closer to realizing the transformative potential of quantum computing across various domains.

👉 More information

🗞 QAMA: Quantum annealing multi-head attention operator with classical deep learning framework

🧠 DOI: https://doi.org/10.48550/arXiv.2504.11083