The AMD CEO’s keynote in Las Vegas charts an ambitious course for artificial intelligence infrastructure, with compute demands set to grow 10,000-fold by decade’s end.

The lights dimmed at the Venetian’s sprawling convention hall on Monday evening. AMD Chair and CEO Lisa Su took the stage. She delivered the opening keynote of CES 2026. What followed was not merely a product announcement. It was a sweeping declaration about the future of computing itself. In this future, the limiting factor for human progress is no longer imagination. Instead, it is raw computational capacity.

AI is the most important technology of the last 50 years, and I can say it’s absolutely our number one priority at AMD

Lisa Su

“AI is the most important technology of the last 50 years, and I can say it’s absolutely our number one priority at AMD,” Su proclaimed to an audience of thousands. Her message was unambiguous: artificial intelligence has transcended the domain of technologists and entered a new phase where it touches healthcare, science, manufacturing, commerce, and virtually every facet of modern life. And yet, according to Su, this transformation has barely begun.

From One Million to Five Billion: The Explosive Trajectory of AI Adoption

The statistical picture Su painted was nothing short of staggering. Since the launch of ChatGPT just a few years ago, the number of active AI users has exploded from approximately one million to over one billion. Su placed this trajectory in historical context, noting that the internet took decades to reach a similar milestone. But her projections extend far beyond current figures.

AMD anticipates AI adoption growing to over five billion active users—a number that would position artificial intelligence alongside the mobile phone and internet as technologies that are genuinely indispensable to daily life across the globe. This isn’t merely market optimism; it represents a fundamental thesis about how human beings will interact with technology in the coming years.

The compute infrastructure required to service this demand has already undergone a transformation that strains comprehension. Global computational capacity for AI has grown from approximately one zetaflop in 2022 to more than one hundred zetaflops in 2025—a hundredfold increase in just three years. Yet Su made clear this represents only a waypoint on a much longer journey.

“There’s just never, ever been anything like this in the history of computing. And that’s really because there’s never been a technology like AI.”

Lisa Su, AMD Chair and CEO

Introducing the Yottaflop: Computing at Civilisational Scale

In one of the keynote’s more memorable moments, Su asked the audience how many were familiar with the term “yottaflop.” The sparse show of hands confirmed what she suspected: this unit of computational measurement, representing a one followed by twenty-four zeros in floating-point operations per second, remains exotic even to technology professionals.

That obscurity is about to end. AMD’s roadmap calls for global AI compute capacity to reach ten yottaflops within the next five years—ten thousand times the computational capacity available in 2022. Su did not understate the significance of this projection. “There’s just never, ever been anything like this in the history of computing,” she observed. “And that’s really because there’s never been a technology like AI.”

This yottascale vision requires AI capabilities across every compute platform: cloud infrastructure delivering intelligence globally, personal computers enabling smarter work and personalised experiences, and edge devices powering real-time decision-making in the physical world. AMD’s value proposition, as Su framed it, lies in being the only company with the full spectrum of compute engines—GPUs, CPUs, NPUs, and custom accelerators—necessary to realise this vision.

Helios: The Architecture of Yottascale AI

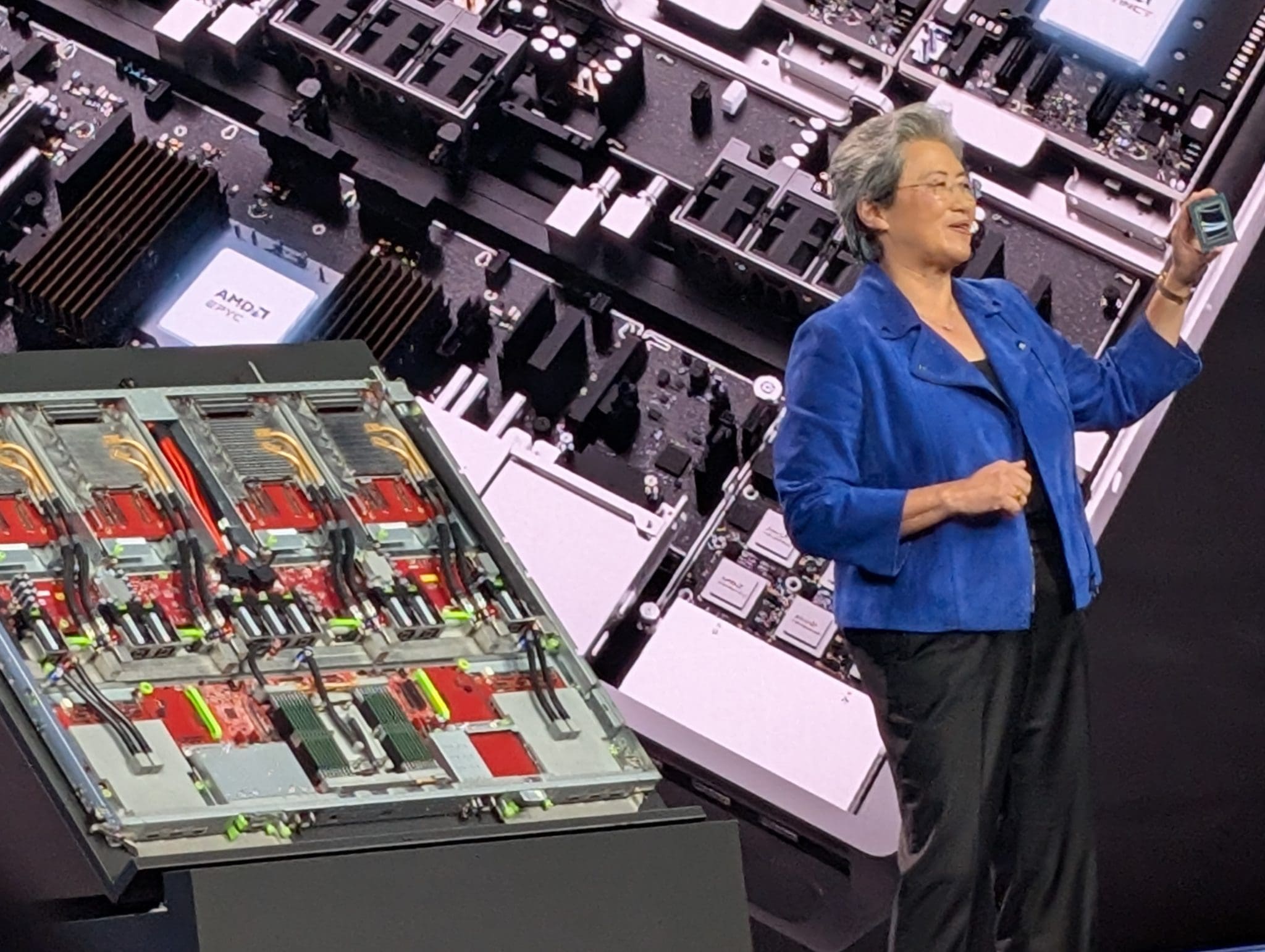

The centrepiece of AMD’s announcements was Helios, the company’s next-generation rack-scale platform designed specifically for the yottascale era. Rather than simply describing it, Su arranged for the physical infrastructure to be brought to the CES stage—a decision that required considerable logistics, given that a single Helios rack weighs nearly seven thousand pounds, more than two compact cars combined.

“Helios is a monster of a rack,” Su acknowledged. “This is no regular rack.” The system utilises a double-wide design based on the OCP open rack-wide standard, developed in collaboration with Meta, and represents AMD’s approach to integrating high-performance computing, advanced accelerators, and networking into a unified, scalable platform.

The technical specifications are formidable. Each Helios rack incorporates more than eighteen thousand CDNA5 GPU compute units and over forty-six hundred Zen 6 CPU cores, delivering up to 2.9 exaflops of performance. The system includes thirty-one terabytes of HBM4 memory with 260 terabytes per second of scale-up bandwidth and 43 terabytes per second of aggregate scale-out bandwidth. The rack’s seventy-two GPUs are connected via high-speed ultra-accelerator link protocol tunnelled over Ethernet, enabling them to function as a single unified compute unit. From there, thousands of Helios racks can be interconnected using industry-standard Ethernet networking and AMD’s Pensando programmable DPUs.

At the heart of Helios sits the MI455X, AMD’s most advanced chip to date. Su held up the processor for the audience to see—a component featuring 320 billion transistors, seventy percent more than its predecessor the MI355. The chip incorporates twelve compute tiles fabricated on leading-edge two-nanometre and three-nanometre process technologies, paired with 432 gigabytes of ultra-fast HBM4 memory, all connected through AMD’s next-generation 3D chip-stacking architecture.

The performance claims are substantial. While the MI355, launched just six months prior, delivered up to three times more inference throughput than its predecessor, the MI455 extends this curve further, offering up to ten times more performance across a range of models and workloads. AMD expects Helios to set new benchmarks for AI performance when it launches later this year.

Powering the MI455 accelerators is Venice, AMD’s next-generation EPYC CPU. Built on two-nanometre process technology, Venice features up to 256 Zen 6 cores and has been specifically designed to serve as the optimal AI CPU, with doubled memory and GPU bandwidth compared to the prior generation. This allows Venice to feed MI455 with data at full speed, even at rack scale.

OpenAI’s Greg Brockman on the Compute Imperative

To underscore the urgency of AMD’s compute roadmap, Su welcomed Greg Brockman, president and co-founder of OpenAI, to the stage. Their conversation illuminated the practical realities facing organisations at the frontier of AI development.

“Every time we want to release a new feature, we want to produce a new model, we want to bring this technology to the world, we have a big fight internally over compute because there are so many things we want to launch and produce for all of you that we simply cannot because we’re in a compute constraint.”

Greg Brockman, OpenAI President and Co-founder

Brockman described OpenAI’s compute situation in stark terms. Despite tripling their computational capacity and revenue annually over the past several years, demand consistently outpaces supply. “Every time we want to release a new feature, we want to produce a new model, we want to bring this technology to the world, we have a big fight internally over compute,” he explained. “Because there are so many things we want to launch and produce for all of you that we simply cannot because we’re in a compute constraint.”

His vision for the future proved even more expansive. Brockman articulated a world where computing becomes so integral to economic activity that GDP growth itself becomes a function of available computational capacity. “I think we’re moving to a world where GDP growth will itself be driven by the amount of compute that is available in a particular country, in a particular region,” he said. “And I think that we’re starting to see the first inklings of this.”

“I think we’re moving to a world where GDP growth will itself be driven by the amount of compute that is available in a particular country, in a particular region.”

Greg Brockman, OpenAI President and Co-founder

The OpenAI president offered concrete examples of AI’s transformative potential, including several healthcare anecdotes. He described a colleague whose husband experienced leg pain that emergency room doctors diagnosed as a pulled muscle. After symptoms worsened at home, they consulted ChatGPT, which suggested the possibility of a blood clot and recommended returning to the emergency room immediately. The diagnosis proved correct—deep vein thrombosis in the leg, along with two blood clots in the lungs. “If they just waited it out, that would have been likely fatal,” Brockman noted.

The conversation also touched on the evolving nature of AI workflows. Brockman described a shift from simple question-and-answer interactions to “objective workflows” where AI models might work autonomously for minutes, hours, or even days on complex tasks. He envisioned users operating entire “fleets of agents” simultaneously—ten different work streams running in parallel on a single developer’s behalf. The ultimate goal, as Brockman described it, is a world where “you wake up in the morning, and ChatGPT has taken items off your to-do list at home and at work.”

Reasoning Video and the Future of Multimodal AI

The keynote also featured Amit Jain, CEO and co-founder of Luma AI, who demonstrated advancements in video generation that point toward a more capable multimodal AI future. Jain articulated Luma’s mission as building “multimodal and general intelligence so AI can understand our world and help assimilate and improve it.”

He noted that most AI video models today remain in early stages, primarily focused on generating pixels and producing aesthetically pleasing imagery. Luma’s approach aims higher: training systems that simulate physics and causality, conduct research, utilise tools, and render results across audio, video, image, and text as appropriate. “In short, we are modelling and generating worlds,” Jain explained.

The demonstration featured Ray 3, which Jain described as “the world’s first reasoning video model.” The system can evaluate whether its intended output meets quality standards before rendering, and represents the first model capable of generating content in 4K and HDR formats.

Software Strategy: The Open Ecosystem Imperative

Su devoted significant attention to AMD’s software strategy, arguing that hardware represents only part of the equation. “We believe an open ecosystem is essential to the future of AI,” she stated. “Time and time again, we’ve seen that innovation actually gets faster when the industry comes together and aligns around an open infrastructure and shared technology standards.”

AMD’s software approach centres on ROCm, described as “the industry’s highest performance open software stack for AI.” The platform offers native support for major open-source projects including PyTorch, vLLM, SGLang, and Hugging Face—tools that collectively see more than one hundred million downloads monthly and are designed to work out-of-the-box on AMD hardware.

Quantum Doesn’t Get A Mention….Yet

The keynote did not explicitly address quantum computing or quantum-adjacent technologies. However, several elements resonate with themes relevant to the quantum computing community.

The MI430x platform, mentioned as part of AMD’s MI400 series portfolio, was specifically highlighted for “sovereign AI and supercomputing, where extreme accuracy matters the most.” Su described it as delivering “leadership hypercomputing capabilities for both high-precision scientific and AI data centres.” This emphasis on extreme precision computing for scientific applications represents territory where classical and quantum approaches may increasingly intersect.

The broader infrastructure vision—particularly the scale of computational requirements being projected and the emphasis on interconnecting thousands of accelerators into unified systems—parallels challenges familiar to quantum computing researchers. The networking architectures being developed for classical AI acceleration, including high-bandwidth, low-latency interconnects, may eventually inform hybrid classical-quantum system designs.

Additionally, Brockman’s comments about AI bridging scientific disciplines that “humanity has been unable to bridge” echo arguments frequently made about quantum computing’s potential role in molecular simulation, materials science, and drug discovery. As classical AI increasingly addresses problems in these domains, the question of where quantum advantage might emerge becomes more precisely defined.

That said, quantum computing enthusiasts should note the implicit message: the classical computing industry is mounting an extraordinary mobilisation to address AI’s computational demands. The yottascale vision represents a commitment to pushing classical architectures to their limits, potentially extending the timeline before quantum computing becomes essential for certain workloads.

Key Takeaways

First, the scale of classical compute investment is unprecedented. There has been a hundredfold increase in three years. Projections indicate another hundredfold increase over the next five years. This represents the most aggressive infrastructure buildout in computing history. For quantum computing advocates, this defines both the opportunity—eventual limitations of classical scaling—and the competition for capital and attention.

Second, the convergence of AI and high-performance computing is accelerating. AMD’s portfolio strategy, spanning from consumer devices to exascale infrastructure, suggests these markets are increasingly unified around AI workloads. Quantum computing’s path to relevance likely runs through demonstrating advantage on problems that matter to this converged ecosystem.

Third, the “agentic computing” paradigm is described by Brockman. This paradigm involves AI systems operating autonomously on complex, extended tasks. It creates new categories of computational demand. This may open opportunities for hybrid approaches where quantum resources handle specific subroutines within larger classical workflows.

Fourth, the emphasis on open standards and interoperability suggests that successful quantum computing platforms will need to integrate smoothly with classical infrastructure. AMD’s ROCm strategy, prioritising compatibility with widely-used frameworks, offers a model for quantum software ecosystem development.

Closing Remarks

Lisa Su’s CES 2026 keynote painted a picture of an industry in hypergrowth, racing to build infrastructure for a technological transformation that is still in its early chapters. The yottascale vision—requiring ten thousand times more compute than existed four years ago—represents both an engineering challenge of historic proportions and a statement about AI’s anticipated role in human civilisation.

For observers in adjacent fields, including quantum computing, the message is dual-edged. The classical computing industry’s mobilisation demonstrates the magnitude of opportunity in serving AI workloads, while simultaneously raising the bar for what quantum systems must achieve to claim practical advantage. As AMD’s Helios racks begin shipping later this year, the infrastructure for the next generation of AI capabilities will take physical form, setting the stage for what promises to be an intensely competitive decade in computing. The race to yottascale has begun. The question for quantum computing is whether, and when, the classical road runs out.