A team of researchers led by Mohammad Zarei has developed an innovative approach to identify rare failure modes in autonomous vehicle perception systems. Their paper, titled AUTHENTICATION, published on April 23, 2025, introduces a method using adversarially guided diffusion models to generate diverse environments that expose AI vulnerabilities, thereby enhancing the reliability of autonomous vehicles.

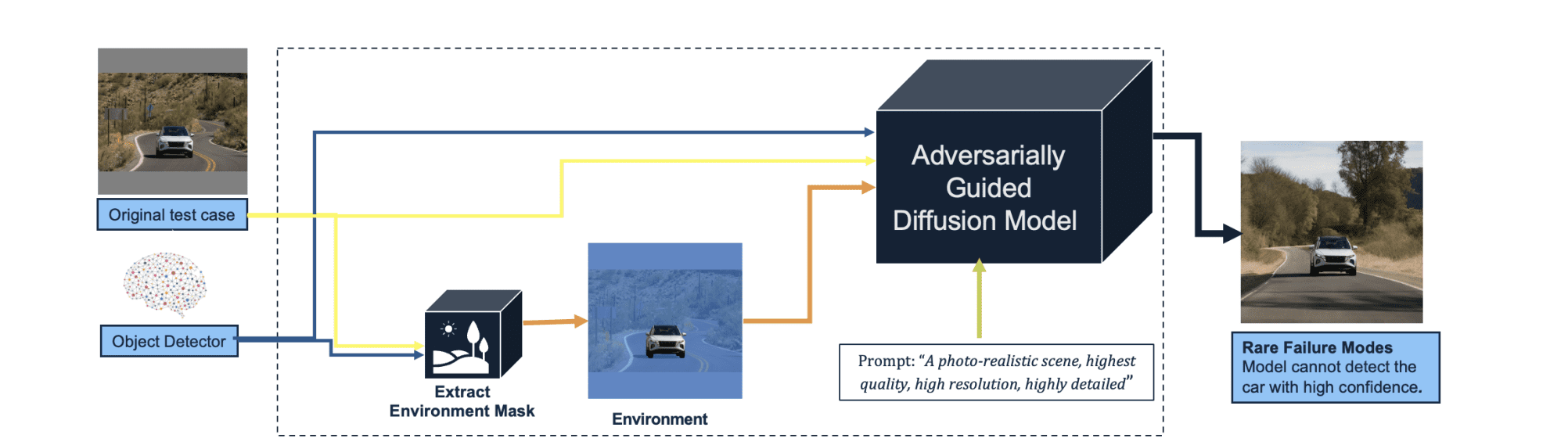

Autonomous vehicles often fail to detect rare failure modes despite extensive training data. This paper introduces a novel approach using generative AI techniques to address this long-tail challenge. By creating segmentation masks for objects of interest and inverting them into environmental masks, combined with text prompts, the authors developed a custom diffusion model. This model generates diverse environments designed to evade object detection systems, exposing vulnerabilities in AI. The resulting natural language descriptions guide developers and policymakers to enhance AV safety and reliability.

The article explores the innovative use of diffusion models, traditionally employed for generating high-quality images, in creating adversarial examples to test AI vulnerabilities. Here’s a structured summary of the key points and insights:

- Introduction to Diffusion Models and Adversarial Attacks:

- Diffusion models, such as Stable Diffusion, are known for their image generation capabilities.

- Researchers are now using these models to create adversarial examples—inputs altered to mislead AI classifiers.

- Methodology:

- Modification of diffusion models involves training them to generate images with perturbations that cause misclassification.

- A loss function is introduced to balance misclassification encouragement and image realism.

- Segmentation masks are used to target specific image regions, ensuring subtle and localized changes.

- Results:

- Adversarial examples were effective in causing misclassifications across various classifiers.

- The images remained visually plausible, making them hard to distinguish from genuine inputs.

- Targeted perturbations via segmentation masks enhanced attack precision, highlighting potential vulnerabilities.

- Conclusion and Implications:

- This approach marks a significant advancement in AI security, revealing system weaknesses and offering insights for improvement.

- As diffusion models evolve, they could play dual roles in both attacking and defending AI systems.

- Collaboration among researchers, developers, and security experts is crucial for enhancing AI robustness.

- Questions and Considerations:

- The balance between misclassification encouragement and image realism in loss functions requires further exploration.

- Understanding the types of classifiers tested (e.g., image recognition) could provide more context on attack applicability.

- The implementation details of segmentation masks in model training are areas for deeper investigation.

In summary, the article underscores the dual potential of diffusion models in AI security, emphasizing the need for ongoing research to enhance system resilience.

👉 More information

🗞 AUTHENTICATION: Identifying Rare Failure Modes in Autonomous Vehicle Perception Systems using Adversarially Guided Diffusion Models

🧠 DOI: https://doi.org/10.48550/arXiv.2504.17179