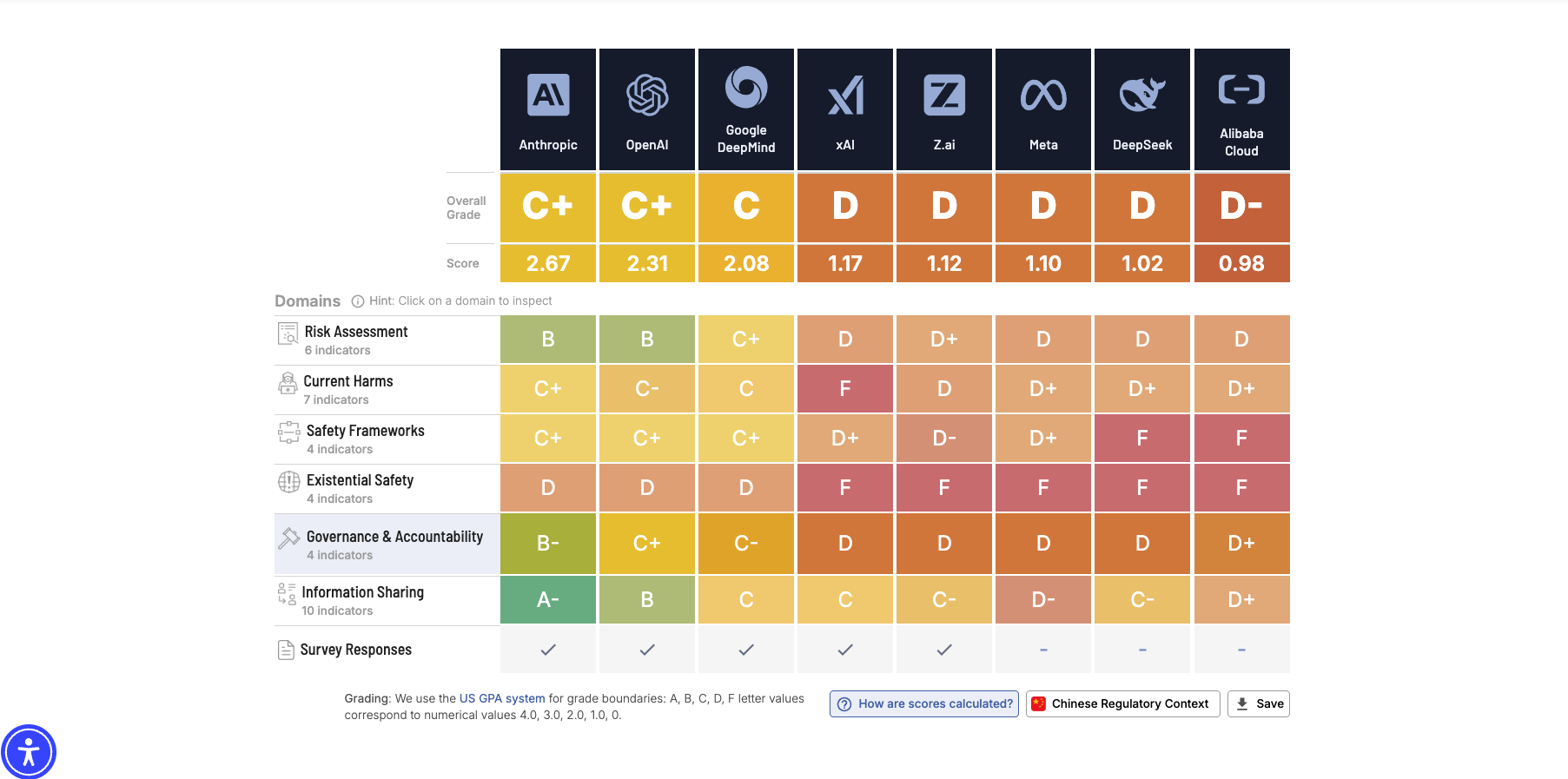

The Future of Life Institute (FLI) conducted an independent assessment, utilizing an expert review panel, to evaluate the efforts of eight leading AI companies in managing both immediate harms and catastrophic risks from advanced AI systems. This evaluation, detailed in the AI Safety Index released Winter 2025, reveals a significant performance gap within the industry, with Anthropic, OpenAI, and Google DeepMind consistently outperforming xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud. FLI’s grading, based on the US GPA system, highlights Anthropic’s sustained leadership through transparency in risk assessment and substantial investment in safety research, though areas of deterioration were also noted.

AI Safety Index Overview

The Future of Life Institute’s AI Safety Index assesses eight leading AI companies on managing both immediate harms and catastrophic risks. The evaluation, conducted with expert review, reveals a sector struggling to match safety planning with rapid capability advances. Findings highlight a widening gap between ambition and safeguards, with even top performers lacking concrete, long-term risk-management strategies. The Index uses a US GPA system for grading, ranging from A+ (4.3) to F (0).

Anthropic, OpenAI, and Google DeepMind lead in safety practices, though Anthropic consistently scores highest across all domains. However, even these leaders show areas for improvement; Anthropic, for example, lacked a human uplift trial in its latest assessment. A significant gap exists between these top three and the remaining companies – xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud. While some of the latter are showing promising improvements, they still lag in risk-assessment disclosure, safety framework completeness, and robust governance structures like whistleblowing policies.

Existential safety remains a core structural failure across the industry, with no company demonstrating a credible plan to prevent catastrophic misuse or loss of control. No company achieved a score above a D in this domain for the second consecutive edition. The evaluation, based on evidence collected up to November 8, 2025, doesn’t reflect recent releases like Gemini 3 Pro or Claude Opus 4.5. The Index urges all companies to move beyond statements and create evidence-based safeguards with clear triggers and thresholds.

Executive Summary Findings

The Future of Life Institute’s AI Safety Index reveals a concerning lag between AI capability and safety commitment across the industry. While Anthropic, OpenAI, and Google DeepMind lead in safety practices, even these top performers lack concrete safeguards, independent oversight, and long-term risk-management strategies needed for increasingly powerful AI systems. The Index uses a US GPA system for grading, with scores reflecting performance across domains like risk assessment, current harms, and existential safety.

A significant gap exists between the top three companies and the rest of the field, including xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud. Though some progress is evident in the next tier – Meta’s new safety framework and Z.ai’s development of an existential risk plan – major gaps remain in risk assessment disclosure, framework completeness, and governance structures like whistleblowing policies. No company achieved a score above a ‘D’ in the critical domain of existential safety, highlighting a sector-wide structural failure.

Existential safety remains the core structural failure, with companies accelerating AGI/superintelligence ambitions without credible plans for preventing catastrophic misuse or loss of control. Reviewers noted that even stated commitments to existential safety haven’t translated into measurable safety plans, concrete alignment strategies, or internal control interventions. The evidence collected for this index is current up to November 8, 2025, and does not reflect recent releases like Google’s Gemini 3 Pro or OpenAI’s GPT-5.1.

Methodology of the AI Safety Index

The AI Safety Index methodology centers on a comprehensive assessment of leading AI companies’ safety practices. This evaluation utilizes indicators across six key domains: Risk Assessment, Current Harms, Safety Frameworks, Existential Safety, Governance & Accountability, and Information Sharing. Companies are graded using a standard US GPA system (A+ to F) based on evidence collected up to November 8, 2025. The Index aims to identify gaps between AI capabilities and safety preparedness, highlighting a widening disparity that demands immediate attention.

The grading process incorporates both quantitative and qualitative data. Companies are evaluated on 30 indicators, encompassing aspects like whistleblowing policies, external safety testing, and the completeness of safety frameworks. Notably, the assessment revealed a consistent failure in Existential Safety, with no company achieving above a ‘D’ in this domain for the second consecutive evaluation. The Index also acknowledges recent developments, but clarifies that data doesn’t reflect releases after November 8, 2025, such as updates to Gemini, Grok, GPT, or Claude models.

Findings reveal a significant performance gap; Anthropic, OpenAI, and Google DeepMind consistently rank highest, while xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud lag behind. The report emphasizes the need for concrete safeguards, measurable thresholds, and independent oversight. Companies are urged to move beyond rhetoric and develop evidence-based plans for mitigating catastrophic risks, particularly concerning AGI/ASI development, or clarify their intentions regarding such systems.

Indicator and Company Selection

The AI Safety Index assesses leading AI companies based on indicators across domains like risk assessment, current harms, and existential safety. Grading utilizes the US GPA system, with Anthropic, OpenAI, and Google DeepMind consistently ranking highest. However, even these top companies exhibit gaps, such as Anthropic’s recent shift toward user interaction-based training and a lack of human uplift trials in their latest risk assessment. A significant disparity exists between this leading tier and companies like xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud.

A core structural failure across the sector is a lack of credible plans for existential safety; no company scored above a ‘D’ in this domain for the second consecutive edition. While companies articulate concerns about catastrophic risks, this hasn’t translated into concrete safeguards or alignment strategies. Recent releases like Google’s Gemini 3 Pro, xAI’s Grok 4.1, OpenAI’s GPT-5.1, and Anthropic’s Claude Opus 4.5 are not reflected in the evaluation, which collected data up to November 8, 2025.

Despite some progress, like Meta and xAI publishing structured safety frameworks, limitations exist in scope, measurability, and independent oversight. Domestic regulations in China provide some firms with stronger baseline accountability for certain indicators compared to Western counterparts. The index highlights a persistent gap between published frameworks and actual safety practices, noting failures to meet requirements like independent oversight and transparent threat modeling, indicating a widening disconnect between capability and safety.

We’ve published research and joined a broader working paper urging against optimizing on chains of thought: As we noted in the GPT-5 system card, “our commitment to keep our reasoning models’ CoTs as monitorable as possible (i.e., as faithful and legible as possible) allows us to conduct studies into our reasoning models’ behavior by monitoring their CoTs.”

OpenAI

Evidence Collection Process

The AI Safety Index assesses companies based on indicators across several domains including Risk Assessment, Current Harms, Safety Frameworks, Existential Safety, Governance & Accountability, and Information Sharing. Grading utilizes the US GPA system, ranging from A+ to F, with numerical values corresponding to letter grades. The evaluation revealed a significant performance gap; Anthropic, OpenAI, and Google DeepMind led, while xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud lagged behind. This assessment is based on evidence collected up to November 8, 2025.

A core finding is the sector’s failure in existential safety, with no company achieving above a ‘D’ in this domain for the second consecutive evaluation. While companies discuss existential risks, this hasn’t translated into concrete safety plans, alignment strategies, or internal controls. Reviewers noted a widening gap between capability ambition and safety commitment, even among top performers. The index highlights a need for evidence-based safeguards with clear triggers and realistic thresholds to address catastrophic risks, or clarification regarding intentions to pursue AGI/ASI systems.

Several companies are taking steps to improve. Meta introduced a comprehensive safety framework with outcome-based thresholds, while xAI formalized its framework with quantitative thresholds. However, reviewers found these efforts limited in scope, measurability, and independent oversight. Moreover, while more companies are conducting risk assessments, these often lack breadth, validity, and genuinely independent review. Domestic regulations in China were also noted for mandating content labeling and incident reporting, offering stronger baseline accountability.

Grading System Explained

The AI Safety Index utilizes a grading system mirroring the US GPA scale, with letter grades (A+, A, A-, etc.) corresponding to numerical values (4.3, 4.0, 3.7, etc.) down to an F (0). This system is employed to assess eight leading AI companies on their management of both immediate harms and catastrophic risks associated with advanced AI. The evaluation, conducted with an expert review panel, reveals a gap between rapidly advancing AI capabilities and the development of adequate risk management and safety planning.

Findings indicate a significant performance disparity among companies. Anthropic, OpenAI, and Google DeepMind consistently rank highest, though even these leaders show areas for improvement. A substantial gap exists between this top tier and companies like xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud, which face major deficits in risk assessment, safety frameworks, and governance structures like whistleblowing policies. Overall scores range from Anthropic’s leading position to lower scores for the trailing companies, demonstrating uneven commitment to AI safety.

Existential safety remains a core failure across the sector. No company has demonstrated a credible plan for preventing catastrophic misuse or loss of control of advanced AI. Notably, none achieved a score above a ‘D’ in this domain for the second consecutive evaluation. Despite increasing rhetoric about existential risks from leaders at firms like Anthropic and OpenAI, this hasn’t translated into concrete safety plans, mitigation strategies, or internal control interventions. The evidence used for this evaluation was collected up to November 8, 2025.

We maintain a bug bounty program through BugCrowd, and welcomes responsible disclosures from third parties via our coordinated vulnerability disclosure policy. In addition, OpenAI runs a Cybersecurity Grant Program to support research and development focused on protecting AI systems and infrastructure.

OpenAI

Key Findings Summary

The AI Safety Index reveals a significant performance gap among leading AI companies. Anthropic, OpenAI, and Google DeepMind consistently lead in safety practices, with Anthropic receiving the highest scores across all domains. However, even these top performers show areas for improvement, such as a lack of human uplift trials and a shift towards user interaction-based training. The remaining companies—xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud—lag behind, particularly in risk-assessment disclosure, safety framework completeness, and governance structures like whistleblowing policies.

A core structural failure across the sector is existential safety; no company has demonstrated a credible plan for preventing catastrophic misuse or loss of control of advanced AI. While companies express ambition regarding AGI/superintelligence, this has not translated into concrete safety plans, alignment strategies, or internal monitoring. Reviewers noted that even the strongest performers lack safeguards and independent oversight, while the broader industry struggles to meet basic transparency and governance obligations, creating a widening gap between capability and safety.

Several companies are showing positive, though limited, steps forward. xAI and Meta have published structured safety frameworks, though these are limited in scope and independent oversight. Increased internal and external evaluations of AI risks are also occurring, with companies like xAI and Z.ai sharing more about their risk assessment processes. Notably, Chinese companies benefit from domestic regulations requiring content labeling and incident reporting, leading to stronger baseline accountability for some indicators.

Company Performance Highlights

The Future of Life Institute’s AI Safety Index assesses eight leading AI companies, revealing a significant gap between capability advancement and safety preparedness. Anthropic, OpenAI, and Google DeepMind lead in safety practices, with Anthropic receiving the highest scores across all domains. However, even these top performers lack concrete safeguards and independent oversight for long-term risk management. The Index uses a US GPA system, with scores ranging from A+ to F, to evaluate performance across indicators like risk assessment and existential safety.

A substantial performance gap exists between the leading three companies and the remaining tier—xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud. While some of these companies show promising signs of improvement, they still face major gaps in key areas like risk assessment disclosure and governance structures, including whistleblowing policies. Notably, Meta and xAI have taken steps towards publishing safety frameworks, though limitations exist in scope, measurability, and independent oversight.

Existential safety remains a core failure across the sector, with no company demonstrating a credible plan to prevent catastrophic misuse or loss of control of advanced AI. No company achieved a score above a ‘D’ in this domain for the second consecutive evaluation. The Index highlights a widening gap between accelerating AGI/superintelligence ambitions and the lack of corresponding safety plans, leaving the industry structurally unprepared for the risks it is creating.

Domain-Level Findings Analysis

Domain-level findings reveal a significant gap between leading AI companies regarding safety practices. Anthropic, OpenAI, and Google DeepMind consistently score highest, with Anthropic leading in every domain—though even they show deterioration in some areas, like a lack of human uplift trials. Companies in the next tier – xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud – lag substantially, particularly in risk-assessment disclosure, comprehensive safety frameworks, and robust governance structures like whistleblowing policies.

A core structural failure across the sector is existential safety; no company has demonstrated a credible plan to prevent catastrophic misuse or loss of control of advanced AI. While companies articulate ambitions around AGI and superintelligence, this has not translated into quantifiable safety plans, concrete alignment strategies, or internal control interventions. No company achieved a score above a ‘D’ in the existential safety domain, indicating a widespread lack of preparedness for extreme risks.

Despite some progress, companies’ safety practices generally fall short of emerging standards, including the EU AI Code of Practice. Reviewers found a consistent gap between published frameworks and actual implementation, noting failures in independent oversight, transparent threat modeling, measurable thresholds, and clearly defined mitigation triggers. Domestic regulations in China are noted as providing stronger baseline accountability for some indicators compared to Western counterparts, though overall the sector’s safety commitment lags behind its capability ambition.

Existential Safety Concerns

Existential safety is a core structural failure within the sector, with no company achieving above a ‘D’ grade in this domain for the second consecutive evaluation. While companies like Anthropic, OpenAI, Google DeepMind, and Z.ai discuss existential risks, this has not translated into concrete safety plans, alignment strategies, or internal monitoring. The findings reveal a widening gap between ambitions regarding AGI/superintelligence and the absence of credible control mechanisms, leaving the industry structurally unprepared for potential catastrophic risks.

The AI Safety Index highlights a significant gap between capability and safety commitment across the industry. Even leading companies lack concrete safeguards, independent oversight, and long-term risk management strategies necessary for powerful AI systems. All companies are urged to move beyond statements about existential safety and develop evidence-based safeguards with clear triggers and realistic thresholds to reduce catastrophic risk—or clarify their intentions regarding the pursuit of AGI/ASI.

Reviewers found that current risk assessments remain narrow in scope and lack external validity. Though some companies like xAI and Z.ai shared more about their risk assessment processes, disclosures still fall short in addressing key risk categories and demonstrating adequate testing. Truly independent external reviews are also lacking, hindering effective oversight. The findings underscore the need for increased transparency and robust evaluation of frontier AI risks.

Governance & Accountability Practices

The AI Safety Index assesses companies’ governance and accountability through four indicators, revealing significant disparities. While Anthropic received a B- in this domain, other companies—OpenAI, Google DeepMind, xAI, Z.ai, Meta, DeepSeek, and Alibaba Cloud—all received lower grades, ranging from C- to D. This evaluation considers factors like whistleblowing policies and internal safety practices, highlighting a need for improved transparency and structured accountability across the industry, particularly regarding risk mitigation strategies.

A key finding is the gap between stated commitments to existential safety and demonstrable safeguards. Despite rhetoric about existential risks from leaders at companies like Anthropic, OpenAI, and Google DeepMind, no company scored above a D in the “Existential Safety” domain. The index stresses a need for concrete, evidence-based safeguards, clear triggers, realistic thresholds, and monitoring mechanisms to address catastrophic risks, or a clear articulation of intentions not to pursue potentially dangerous systems.

The evaluation notes that companies’ safety practices generally fall short of emerging standards, including the EU AI Code of Practice. Reviewers consistently found deficiencies in independent oversight, transparent threat modeling, measurable thresholds, and clearly defined mitigation triggers. Even top performers lack credible long-term risk-management strategies, indicating a wider systemic issue where capability advances outpace safety commitments, leaving the frontier-AI ecosystem structurally unprepared.

Information Sharing and Transparency

The AI Safety Index reveals a significant disparity in information sharing and transparency amongst leading AI companies. Anthropic leads in this domain, receiving an A- grade, while others like Meta and Alibaba Cloud receive lower grades, including a D+. The index specifically assessed ten indicators related to information sharing, highlighting that even top performers need to improve beyond high-level statements about safety to demonstrate concrete safeguards with clear triggers and thresholds. This suggests a general lag in openly detailing safety practices.

While some companies like xAI and Z.ai have begun sharing more about their risk assessment processes – joining Anthropic, OpenAI, and Google DeepMind – reviewers found disclosures incomplete. Key risk categories remain under-addressed, and external validity isn’t adequately tested, with concerns about the true independence of external reviewers. This lack of robust, verifiable information hinders independent evaluation and accountability within the rapidly evolving AI landscape, impacting overall transparency.

Notably, Chinese companies benefit from domestic regulations requiring content labeling and incident reporting, providing a stronger baseline accountability for certain indicators compared to Western counterparts. However, across the industry, reviewers underscore a gap between published governance frameworks and actual safety practices. Companies consistently fail to meet basic requirements like independent oversight, transparent threat modeling, and clearly defined mitigation triggers, indicating a broad need for improved information sharing and demonstrable safety measures.

Current Harms Assessment

The Future of Life Institute’s AI Safety Index assesses companies on managing both immediate harms and catastrophic risks from advanced AI. The evaluation, conducted with expert review, reveals a gap between rapidly advancing AI capabilities and adequate risk management planning. Findings highlight a structural failure in existential safety, with no company scoring above a D in this domain for the second consecutive edition. This suggests a concerning lack of credible plans to prevent misuse or loss of control as AGI/superintelligence ambitions accelerate.

Current harms assessment receives low grades across the board, with the majority of companies receiving a D or F. Specifically, Anthropic, OpenAI, and Google DeepMind received a C+ or below in the Current Harms domain, based on seven indicators. While some companies like Meta and xAI have begun publishing safety frameworks, these are limited in scope, measurability, and independent oversight. Reviewers note that disclosures often fall short, with key risk categories under-addressed and a lack of truly independent external validity testing.

Despite some progress, the AI sector’s safety practices remain below emerging standards like the EU AI Code of Practice. Reviewers consistently point to a disconnect between published governance frameworks and actual safety practices. Companies often fail to meet basic requirements, including independent oversight, transparent threat modeling, measurable thresholds, and clearly defined mitigation triggers. The index emphasizes a need for concrete, evidence-based safeguards with realistic thresholds, monitoring, and control mechanisms.

Risk Assessment Procedures

The Future of Life Institute’s AI Safety Index assesses leading AI companies on managing both immediate harms and catastrophic risks. Grading utilizes the US GPA system, with letter values corresponding to numerical scores (A+ = 4.3, F = 0). Findings reveal a sector struggling to keep pace with rapid advancements, with critical gaps in risk management. Anthropic, OpenAI, and Google DeepMind lead, but even these companies lack concrete safeguards and independent oversight needed for increasingly powerful AI systems.

Existential safety represents a core structural failure across the industry. While companies pursue advanced AGI and superintelligence, none have demonstrated a credible plan to prevent misuse or loss of control; no company scored above a ‘D’ in this domain for the second consecutive evaluation. Rhetoric regarding existential risks has not translated into quantitative safety plans, concrete alignment strategies, or effective internal monitoring. Companies are urged to develop evidence-based safeguards with clear triggers and realistic thresholds.

Recent improvements were noted in disclosures of risk assessment processes by companies like xAI and Z.ai, joining Anthropic, OpenAI, and Google DeepMind. However, reviewers found key risk categories under-addressed, external validity untested, and external reviewers lacking true independence. Notably, Chinese firms demonstrate stronger baseline accountability in some areas due to domestic regulations requiring content labeling and incident reporting, and voluntary national technical standards.

OpenAI has developed and continues to improve incident response programs across key areas of its operations, including by improving and iterating on our AI safety incident-specific protocols that are tailored to our operations and technology.

OpenAI

Internal & External Evaluations of AI Risks

The Future of Life Institute’s AI Safety Index assesses eight leading AI companies, revealing a sector struggling to manage rapidly advancing capabilities. The evaluation uses a US GPA system, with Anthropic consistently scoring highest across all domains. However, even top performers lack concrete safeguards and independent oversight needed for powerful AI systems. A core structural failure exists in existential safety, with no company achieving above a ‘D’ in this domain for the second consecutive edition, despite increasing ambition around AGI/superintelligence.

Recent steps show some promise, with xAI and Meta publishing structured safety frameworks, although limited in scope and measurability. Meta’s framework includes outcome-based thresholds, but mitigation triggers are set too high. Several companies have increased transparency by conducting both internal and external evaluations of AI risks, including Anthropic, OpenAI, Google DeepMind, xAI, and Z.ai. Despite this, reviewers note that risk scope remains narrow and external reviews lack true independence.

The Index highlights a widening gap between capability and safety, underscoring the need for concrete, evidence-based safeguards. Companies need clear triggers, realistic thresholds, and demonstrated monitoring, either for aligning AGI/ASI or clarifying they won’t pursue such systems. Notably, the evidence collected concluded on November 8, 2025, and doesn’t reflect releases like Google’s Gemini 3 Pro or Anthropic’s Claude Opus 4.5, meaning the landscape is rapidly evolving.

Source: https://futureoflife.org/