Slot filling, a vital component of systems that understand spoken language, traditionally relies on separate speech recognition and natural language understanding processes. Kadri Hacioglu from Uniphore, Manjunath K E, and Andreas Stolcke from Uniphore now demonstrate a new approach using SpeechLLMs, powerful models that directly process speech and text together. This unified method promises greater efficiency and the ability to recognise a wider range of requests, even those with previously unseen categories, without requiring additional training data. The team establishes a benchmark for performance, identifies areas for improvement, and proposes enhancements to model design and training techniques, ultimately paving the way for more adaptable and effective spoken language understanding systems.

SpeechLLM Training for Conversational Slot Filling

This research details the development and training of a SpeechLLM, a large language model specifically designed for understanding spoken language and accurately identifying key information, known as slots, within conversations. The team focused on improving slot filling accuracy, a crucial task for building effective spoken dialogue systems, by exploring innovative training strategies and data annotation techniques. The core of the research involved multi-stage fine-tuning, a process where the model is progressively trained on increasingly specific data. This approach was compared to single-stage joint fine-tuning to determine its effectiveness.

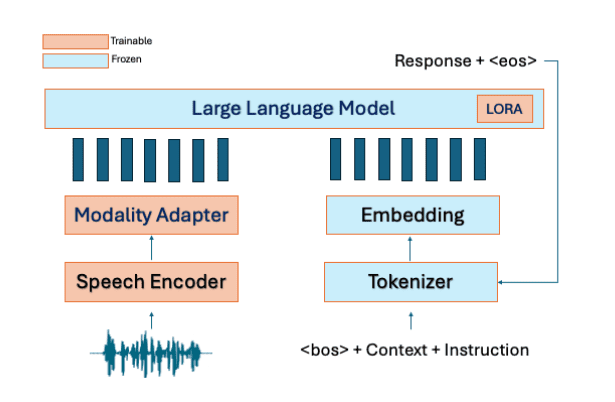

A key element was the use of GPT-4o to annotate the training data, extracting slots and their corresponding values from dialogue transcripts. Carefully designed prompts guided this annotation process, ensuring accuracy and consistency. The team also investigated parameter-efficient fine-tuning techniques, such as LoRA and QLoRA, to reduce the computational demands of training such a large model. Experiments demonstrated that multi-stage fine-tuning consistently outperformed single-stage approaches, while parameter-efficient techniques effectively reduced computational costs. The model achieved state-of-the-art performance on slot filling tasks, although training transformers can be unstable, requiring careful learning rate scheduling and warm-up periods. This research provides valuable insights into building more robust and accurate speech-based conversational AI systems.

GPT-4o Annotates Large Call Center Dataset

This study addresses the critical task of slot filling in spoken language understanding by systematically investigating methods to maximize the performance and generalization ability of speech-based language models, known as speechLLMs. Researchers curated a large dataset, CallCenter-A, comprising approximately 31,000 calls, totaling 2,100 hours of speech and nearly one million conversational turns, covering banking, telecommunications, insurance, and retail domains. To annotate this extensive corpus, the team harnessed the capabilities of GPT-4o, instructing it to identify and label slots representing real-world entities, dates, times, and numerals within each turn of the conversations. This approach enabled the creation of a richly annotated dataset without predefining a fixed set of slot labels, promoting broad and open-ended slot filling capabilities.

To further enhance model training, the researchers transformed the slot filling data into an instruction-based format, crucial for guiding speechLLMs. Instructions were carefully crafted to describe the task in natural language, and a diverse set of prompts were employed to improve generalization to unseen prompts during testing. The team introduced strategies to vary the context provided to the model, randomizing the number of preceding turns considered and incorporating distractors when querying specific slots, further increasing the robustness of the system. In addition to the primary slot filling dataset, the study incorporated auxiliary datasets for automatic speech transcription, spoken instruction tasks, and spoken query instruction tasks, facilitating modality alignment and preventing overfitting.

To rigorously evaluate performance, the team created a second evaluation dataset, CallCenter-B, consisting of 80 real call-center conversations, annotated using GPT-4o. This dataset differed acoustically from CallCenter-A and contained slot labels with only 48% overlap, allowing for assessment of out-of-domain generalization. Experiments were conducted using four NVIDIA A10G GPUs, leveraging the Hugging Face ecosystem for model development and training. The team systematically explored architectural decisions, training methods, and multitask learning strategies to approach optimal performance, ultimately demonstrating significant improvements in slot filling accuracy and generalization capabilities.

LLM Fine-tuning Boosts Call Center Slot Filling

This work demonstrates substantial advancements in speech-based slot filling, a crucial task for spoken dialogue understanding. Researchers achieved a peak F1 score of 0. 9160 on the CallCenter-A dataset using a fine-tuned large language model (LLM), significantly outperforming a vanilla LLM. This improvement was realized through a carefully designed training pipeline, incorporating data preparation, architectural decisions, and training strategies. The team meticulously prepared a dataset of approximately 31,000 call-center conversations, totaling almost 1 million turns and 2,100 hours of speech, annotated with slot labels and values using GPT-4o.

To enhance generalization, the annotation process avoided priming the LLM with specific slot labels, instead instructing it to identify mentions of real-world entities, events, dates, times, and numerals. This instruction-based training dataset incorporated randomized context sizes and varying numbers of distractor slots to improve robustness and adaptability. Experiments revealed the importance of modality adapters, demonstrating that their complexity directly impacts performance. Furthermore, the team found that multistage training is essential for faster and better modal alignment, enabling the system to effectively integrate speech and textual information.

Starting from foundational speechLLMs resulted in a significant performance advantage. To assess real-world performance, the researchers created a new evaluation dataset, CallCenter-B, comprising 80 calls and 4,495 turns, annotated similarly to CallCenter-A. This dataset differed acoustically and contained slot labels with only 48% overlap with CallCenter-A, providing a challenging test of the system’s generalization capabilities. These results demonstrate a clear path toward more accurate and robust speech-based slot filling systems for practical applications.

SpeechLLMs Excel at Open-Ended Slot-Filling

This research successfully demonstrates the potential of speechLLMs for open-ended slot-filling, a crucial task in spoken language understanding. Through systematic experimentation with architectural components, training strategies, and multitask learning, the team achieved substantial performance improvements over a simple baseline system.

👉 More information

🗞 SpeechLLMs for Large-scale Contextualized Zero-shot Slot Filling

🧠 ArXiv: https://arxiv.org/abs/2510.15851