Researchers demonstrate a fully integrated photonic neural network trained using on-chip backpropagation, a standard digital training algorithm. Performing all linear and nonlinear operations on a single chip achieves scalable and robust training despite device variations, matching the performance of ideal digital models in data classification tasks.

The pursuit of more efficient computation increasingly focuses on photonic neural networks, which utilise light rather than electrons to perform calculations, offering potential advantages in speed and energy consumption. However, realising the full potential of these photonic neural networks (PNNs) requires effective training methodologies, traditionally hampered by the difficulty of implementing error backpropagation – a core algorithm for training digital neural networks – directly on a chip. Researchers at Nokia Bell Labs, Farshid Ashtiani, Mohamad Hossein Idjadi, and Kwangwoong Kim, now report a significant advance in this field, detailed in their article, “Integrated photonic deep neural network with end-to-end on-chip backpropagation training”. Their work demonstrates a fully integrated photonic deep neural network capable of performing all linear and nonlinear operations, including the crucial error backpropagation algorithm, entirely on a single chip, thereby overcoming limitations imposed by device variations and paving the way for scalable and robust next-generation photonic computing systems.

Integrated photonic neural networks (PNNs) offer a potential alternative to conventional electronic systems, and recent developments demonstrate a fully integrated deep neural network capable of on-chip training utilising the backpropagation (BP) algorithm. Backpropagation is a supervised learning algorithm used to train artificial neural networks, adjusting the internal parameters – weights – to minimise the difference between predicted and actual outputs. This represents a significant advancement, addressing a key limitation in the field, namely the difficulty of implementing scalable on-chip activation gradients, essential for effective training. Previous PNNs frequently depend on digital co-processors for BP or employ gradient-free algorithms, restricting their capabilities.

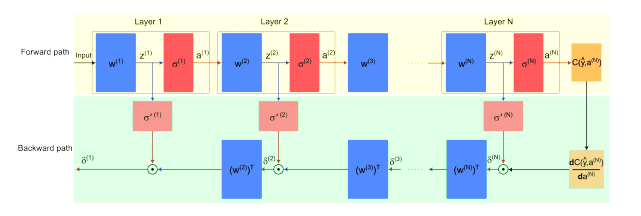

The demonstrated system executes all linear and nonlinear operations directly on a single chip, enabling robust and scalable training despite typical fabrication-induced device variations. Researchers achieve this through an architecture prioritising hardware reuse, substantially reducing the complexity and size of the photonic circuit. Specifically, nonlinear activation functions – both sigmoid and rectified linear unit (ReLU) – are implemented using Mach-Zehnder Interferometers (MZIs) and Microring Resonators (MRRs). MZIs split light into two paths and recombine them, creating interference patterns dependent on the phase difference between the paths, while MRRs are circular waveguides that resonate at specific wavelengths. Critically, these same circuits perform the gradient calculation required for backpropagation, accomplished through mode switching, where electrical or optical control alters the circuit’s function depending on whether the network is in the forward or backward pass.

The system employs a standard backpropagation algorithm, calculating errors and updating weights through successive forward and backward passes. A Hadamard product block facilitates weight updates by multiplying the transposed output with the gradient of the activation function. Data is loaded via a microcontroller and custom electronics, utilising digital-to-analog converters (DACs) and analog-to-digital converters (ADCs). Intermediate results, such as weighted sums and neural outputs, are stored in on-chip memory.

Experimental results demonstrate the network’s ability to perform two nonlinear data classification tasks with accuracy and robustness comparable to an ideal digital model. The successful implementation of on-chip gradient descent training validates the potential of this approach for generalisation across various photonic neural network architectures, paving the way for next-generation AI systems capable of reliable and efficient operation.

This contrasts with previous photonic neural networks which often rely on off-chip digital processing for training or employ gradient-free algorithms that limit versatility. The core innovation lies in the complete on-chip implementation of the backpropagation algorithm, utilising photonic circuits to perform weighted sums and nonlinear activation functions. This minimises data movement, a major source of energy consumption in traditional neural network training, and allows for scalable training despite variations in device performance. Hardware reuse, achieved through modified weight blocks and mode-switching activation, further reduces chip area and complexity, contributing to a more compact and efficient design.

👉 More information

🗞 Integrated photonic deep neural network with end-to-end on-chip backpropagation training

🧠 DOI: https://doi.org/10.48550/arXiv.2506.14575