Research demonstrates that GraphLLMs, which combine large language models with graph data, exhibit significant vulnerabilities to adversarial attacks targeting textual node attributes, graph structure, and prompt manipulation. Experiments across six datasets reveal susceptibility even to minor textual alterations, prompting investigation into data and adversarial training as potential defences.

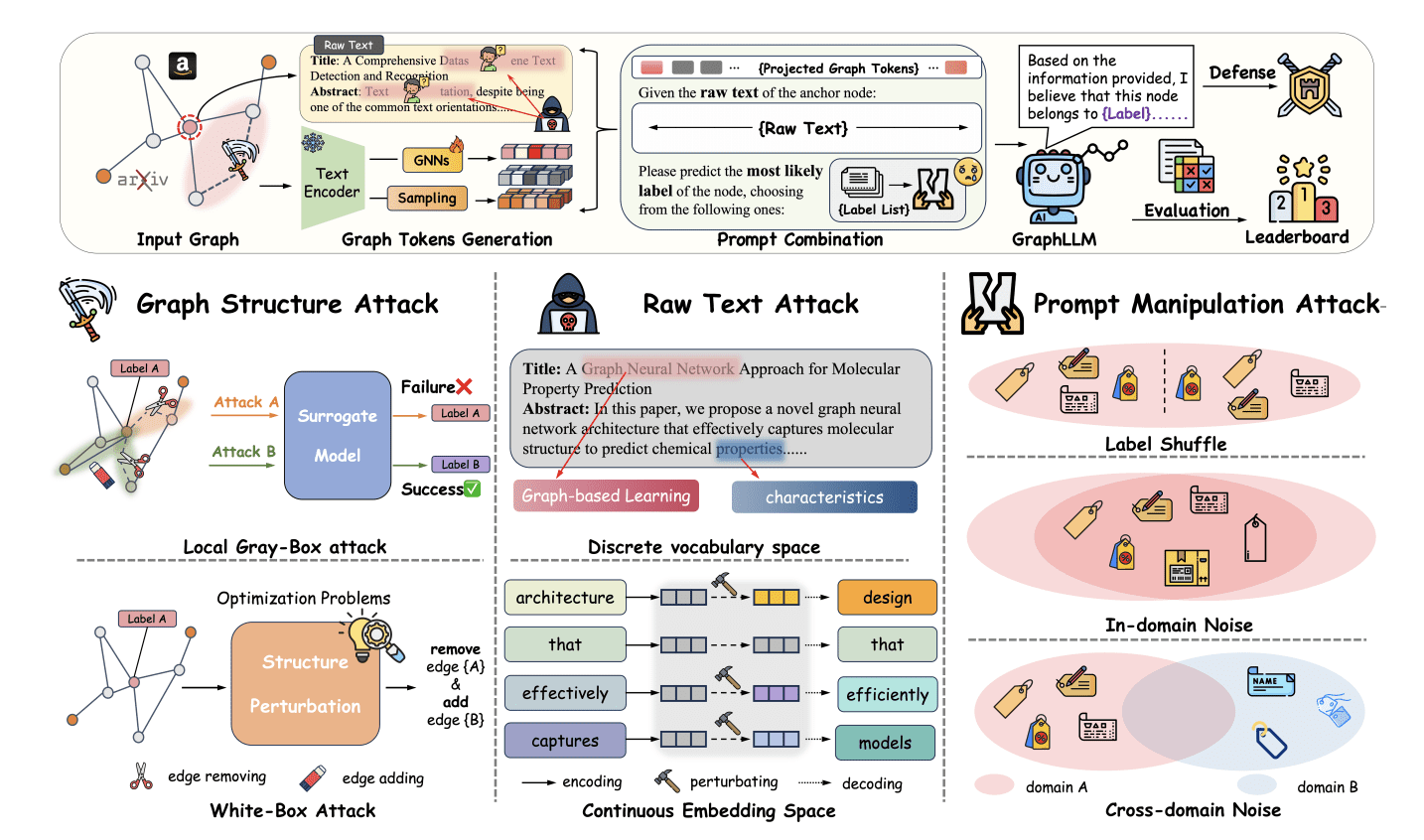

The increasing application of large language models (LLMs) extends beyond natural language processing and into the realm of graph-based data analysis, giving rise to GraphLLMs which combine textual node attributes, network topology, and task-specific prompts to facilitate reasoning over complex relationships. However, the susceptibility of these models to deliberate manipulation, or adversarial attacks, remains largely unaddressed, posing a significant risk for deployment in critical applications. Researchers at New York University and NYU Shanghai, including Qihai Zhang, Yuanfu Sun, Xinyue Sheng, and Qiaoyu Tan, now present a comprehensive evaluation of GraphLLM robustness in their paper, TrustGLM: Evaluating the Robustness of GraphLLMs Against Prompt, Text, and Structure Attacks. Their work details a systematic assessment of vulnerabilities arising from perturbations to node text, graph structure, and prompt design, alongside explorations of potential mitigation strategies through data augmentation and adversarial training.

Graph Language Models (GraphLLMs), which integrate the capabilities of large language models with structured graph data, present a promising avenue for innovation across fields such as knowledge discovery and pharmaceutical design. However, a systematic evaluation reveals significant vulnerabilities to adversarial attacks, a critical concern as deployment expands into sensitive areas. This research assesses GraphLLMs across three key dimensions: textual attributes of nodes, graph structural integrity, and prompt manipulation, demonstrating susceptibility in all areas.

Investigations begin by examining the impact of alterations to the textual descriptions associated with nodes. Even minor changes, such as synonym substitution, readily induce errors in model predictions. This suggests current GraphLLMs rely heavily on superficial textual cues rather than demonstrating a deeper semantic understanding of the underlying information, potentially allowing malicious actors to manipulate outputs. The models appear to prioritise lexical matching over genuine comprehension of the concepts represented by the nodes.

Researchers then focus on the integrity of the underlying graph structure, demonstrating substantial performance degradation when connections between nodes are perturbed. This indicates a reliance on precise graph connectivity and a failure to generalise effectively when faced with even slight deviations from the training data. Adversaries could exploit this vulnerability by strategically adding or removing edges, disrupting information flow and compromising predictive accuracy. A graph, in this context, represents data as nodes (entities) connected by edges (relationships), and maintaining the correct structure is vital for accurate reasoning.

Furthermore, the study reveals susceptibility to manipulation through prompt engineering, where carefully crafted input prompts can elicit unintended or incorrect responses. This highlights the need for robust security considerations across all aspects of model design and deployment. Prompt engineering exploits the models’ sensitivity to input phrasing, demonstrating a lack of robustness against subtle variations in the way questions are posed.

To facilitate ongoing evaluation and encourage further innovation, researchers release an open-source library accompanying this research. This library provides a comprehensive suite of tools for generating adversarial attacks, evaluating model robustness, and implementing defence mechanisms, fostering collaboration and accelerating progress in the development of secure and reliable GraphLLMs.

The study meticulously details the experimental setup, including the datasets used, the model architectures employed, and the evaluation metrics adopted. Researchers utilise a diverse range of datasets and GraphLLM architectures to ensure the generalisability of their findings. Datasets included knowledge graphs and biological networks, while model architectures spanned various transformer-based approaches adapted for graph data.

Researchers also conduct a thorough analysis of the computational cost of different defence mechanisms, providing valuable insights into their practicality for real-world deployment. The analysis reveals that some defence mechanisms, while effective in improving robustness, can significantly increase computational cost, highlighting the need for efficient and scalable solutions. Techniques such as adversarial training, which involves exposing the model to perturbed data during training, can improve robustness but require substantial computational resources.

The study emphasises the importance of considering the trade-offs between robustness, accuracy, and computational cost when designing secure GraphLLM systems. Researchers demonstrate that it is often possible to achieve a significant improvement in robustness without sacrificing accuracy or incurring a substantial increase in computational cost. Optimisation techniques and efficient model architectures can help mitigate the computational overhead associated with robustness enhancements.

Researchers acknowledge the limitations of their study, including the fact that they only considered a limited number of attack algorithms and defence strategies, and that their evaluation was conducted on a relatively small number of datasets. However, they emphasise that their study provides a valuable starting point for future research in this area.

The study concludes by highlighting the growing importance of security and robustness in the field of graph machine learning. As GraphLLMs become increasingly prevalent in critical applications, it is essential to address the potential vulnerabilities and develop effective defence mechanisms. Researchers hope that their work will contribute to the development of more secure and reliable GraphLLM systems, enabling the widespread adoption of this powerful technology.

👉 More information

🗞 TrustGLM: Evaluating the Robustness of GraphLLMs Against Prompt, Text, and Structure Attacks

🧠 DOI: https://doi.org/10.48550/arXiv.2506.11844