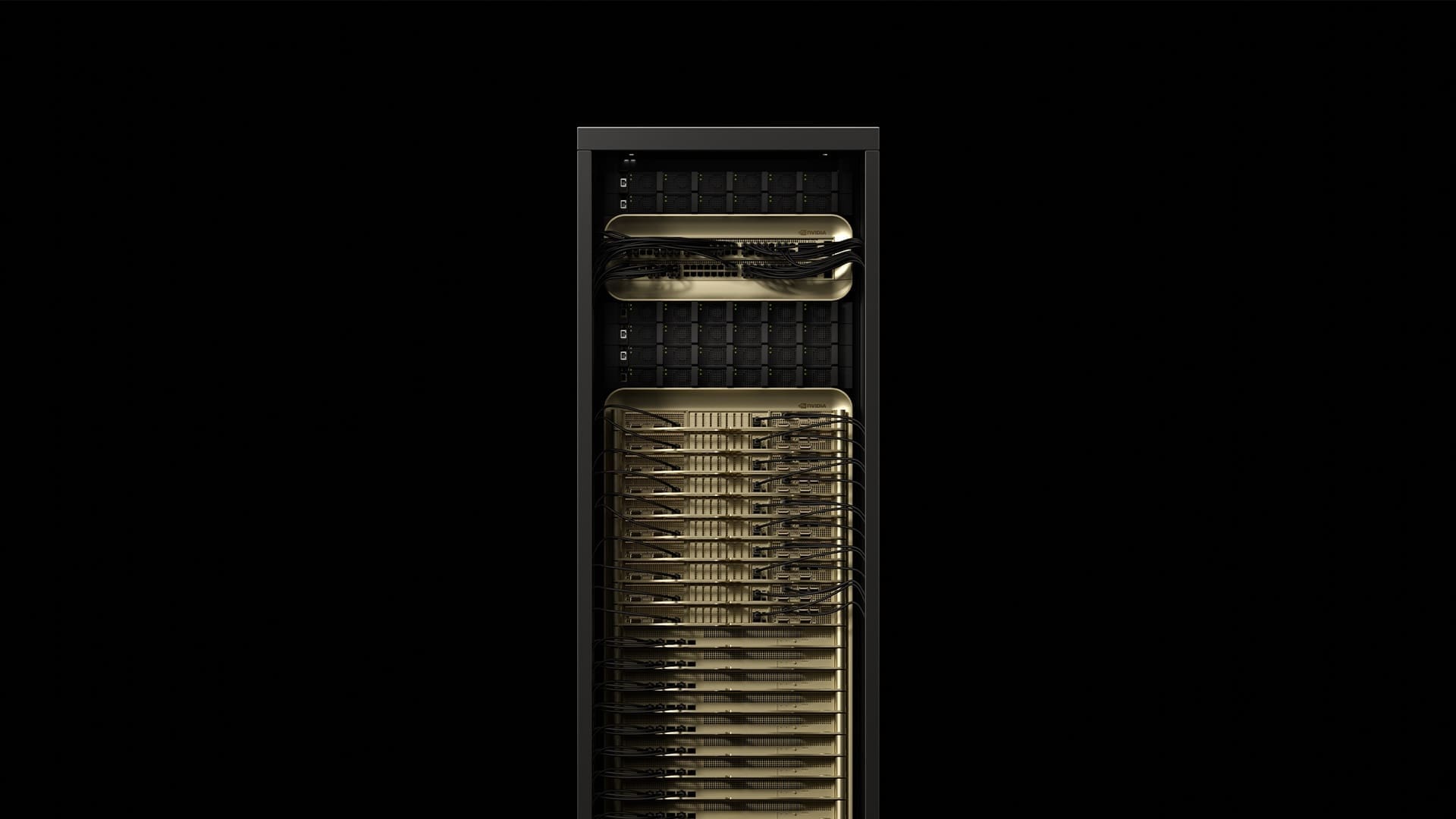

NVIDIA announced the launch of its Blackwell Ultra AI Factory Platform at GTC on March 18, 2025. The platform is designed to advance AI reasoning, agentic AI, and physical AI applications. It enhances training and test-time scaling inference, enabling higher accuracy and efficiency for complex workloads. It features the GB300 NVL72 rack-scale solution and HGX B300 NVL16 system, offering significant performance improvements over previous generations.

Blackwell Ultra integrates with NVIDIA Spectrum-X 800G Ethernet networking to reduce latency and jitter while also supporting NVIDIA Dynamo, an open-source inference framework that optimizes throughput and reduces costs for AI services. The platform is supported by leading hardware providers like Cisco and Dell Technologies and cloud service providers including Amazon Web Services, Google Cloud, and Microsoft Azure. Jensen Huang emphasized Blackwell Ultra’s versatility in addressing the growing demand for advanced AI capabilities.

NVIDIA Blackwell Ultra AI Factory Platform

NVIDIA has introduced its Blackwell Ultra AI Factory Platform, designed to advance AI reasoning, agentic AI, and physical AI. This platform includes hardware solutions like the GB300 NVL72 and HGX B300 NVL16 systems, which offer significant performance improvements over previous generations.

Software innovations such as NVIDIA Dynamo support the platform, enabling efficient scaling of AI services. Networking enhancements with Spectrum-X 800G Ethernet reduce latency and jitter in AI infrastructure, ensuring optimal performance.

Industry partnerships and cloud provider support for Blackwell Ultra are extensive, indicating broad adoption across the sector. The platform integrates seamlessly with NVIDIA’s existing ecosystem of tools and software, providing developers and enterprises with robust implementation solutions.

This integration enhances capabilities for enterprises deploying production-grade AI, leveraging NVIDIA’s CUDA-X libraries and a developer community of over 6 million professionals.

NVIDIA Blackwell Ultra Enables AI Reasoning

NVIDIA’s Blackwell Ultra platform introduces advanced hardware solutions for AI reasoning tasks. The GB300 NVL72 system offers enhanced performance, designed to handle complex computational demands efficiently. Similarly, the HGX B300 NVL16 system significantly improves over previous models, ensuring optimal processing capabilities for intricate AI operations.

The platform leverages NVIDIA Dynamo, a cutting-edge software framework that enhances scalability for AI services. Specifically, Dynamo optimizes GPU utilization by separating and independently optimizing different phases of large language model processing on separate GPUs.

Networking enhancements with Spectrum-X 800G Ethernet reduce latency and jitter, ensuring seamless communication across distributed systems. This high-speed infrastructure supports efficient data transfer and synchronization, critical for maintaining performance in complex AI tasks.

NVIDIA Scale-Out Infrastructure for Optimal Performance

The platform’s scale-out architecture is designed to efficiently handle the computational demands of advanced workloads. By leveraging disaggregated serving, NVIDIA Dynamo optimizes GPU resource utilization, allowing independent processing phases on separate GPUs for maximum efficiency and scalability in AI services.

This approach ensures that distributed AI operations are performed with optimal resource allocation, enhancing overall performance and scalability.

Blackwell Ultra benefits from extensive industry partnerships with leading hardware manufacturers and cloud providers, facilitating broad availability and support across various sectors. The platform’s integration into NVIDIA’s ecosystem further enhances its capabilities, providing developers with robust tools such as CUDA-X libraries and production-grade AI solutions through platforms like NVIDIA AI Enterprise.

NVIDIA’s Blackwell Ultra platform incorporates advanced hardware solutions, including the GB300 NVL72 and HGX B300 NVL16 systems, which provide enhanced performance compared to previous generations. These systems enable efficient handling of complex computational tasks.

The platform also features networking enhancements with Spectrum-X 800G Ethernet, reducing latency and jitter for seamless communication across distributed systems. This high-speed infrastructure supports efficient data transfer and synchronization, critical for maintaining performance in complex AI tasks.

More information

External Link: Click Here For More