Reconstructing detailed 3D scenes from collections of images remains a major challenge in computer vision, but a new approach called YoNoSplat offers a significant leap forward. Botao Ye, along with Boqi Chen and Haofei Xu from ETH Zurich, and Daniel Barath and Marc Pollefeys from ETH Zurich and Microsoft, present a feedforward model capable of building high-quality 3D representations from any number of images, even without prior knowledge of camera positions or calibration. This versatility stems from YoNoSplat’s ability to simultaneously predict both the 3D structure of a scene and the camera poses needed to view it, a task traditionally complicated by the interdependence of these elements. By introducing a novel training strategy and a method for resolving scale ambiguity, the team achieves state-of-the-art reconstruction speeds and quality, demonstrating the ability to build a scene from 100 images in just seconds on modern hardware and opening new possibilities for rapid 3D modelling.

Pose-Free 3D Reconstruction via Gaussian Splatting

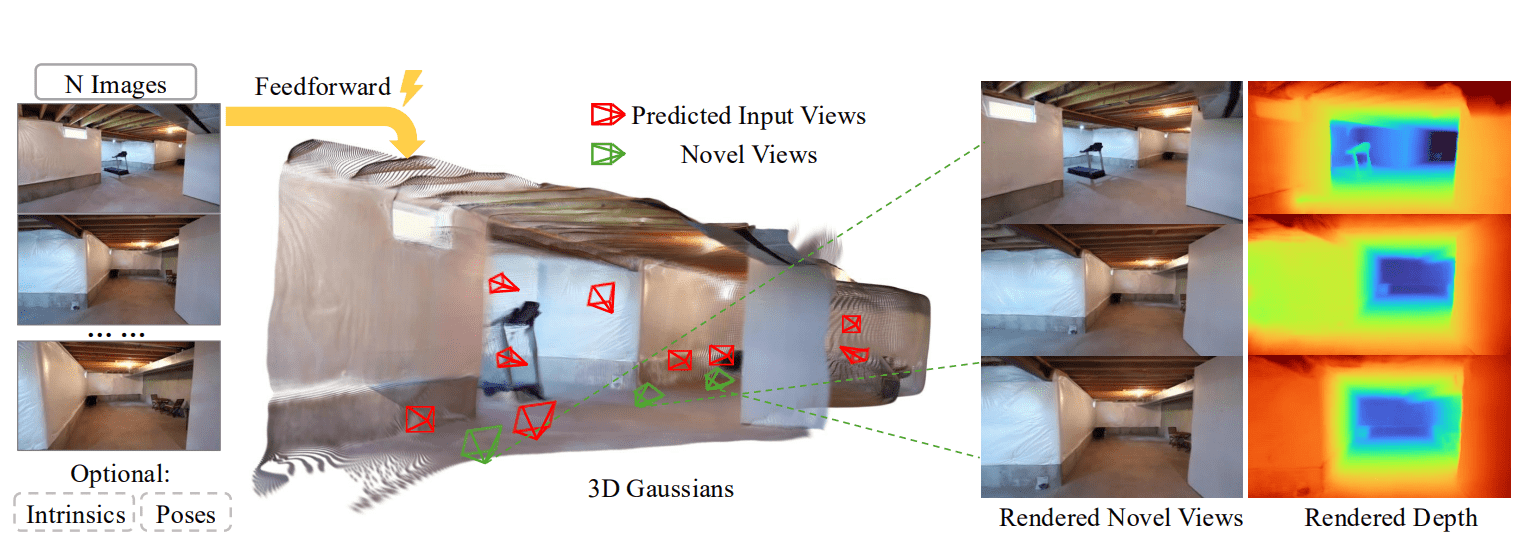

This research introduces YoNoSplat, a novel feedforward model that reconstructs high-quality 3D scenes from collections of images, even when camera poses and calibration data are unavailable. The system overcomes limitations of existing methods by accepting an arbitrary number of unposed and uncalibrated images, while seamlessly integrating ground-truth camera information when provided. Scientists engineered a local-to-global design, where the model first predicts per-view local Gaussians and corresponding camera poses, then aggregates these into a global coordinate system, ensuring scalability and versatility. A key innovation lies in the mix-forcing training strategy, addressing the challenge of jointly learning camera poses and 3D geometry.

The team initially employed teacher-forcing, using ground-truth poses to establish a stable geometric foundation, then gradually introduced the model’s predicted poses during aggregation. This curriculum balances training stability with robustness, enabling YoNoSplat to operate effectively with either ground-truth or predicted poses during inference. Experiments demonstrate that a naive self-forcing approach leads to unstable training and poor performance, while pure teacher-forcing introduces exposure bias. To resolve scale ambiguity, particularly when ground-truth depth is unavailable, the researchers developed a pipeline that predicts camera intrinsics, enabling reconstruction from uncalibrated images.

They systematically evaluated scene-normalization strategies and found that normalizing by the maximum pairwise camera distance proved most effective, aligning with the relative pose supervision used during training. The system achieves exceptional efficiency, reconstructing a scene from 100 images at 280×518 resolution in just 2. 69 seconds on a GH200 GPU, and outperforms prior pose-dependent methods even without ground-truth camera inputs. This demonstrates the strong geometric and appearance priors learned through the innovative training strategy.

Unposed 3D Reconstruction via Mix-Forcing Training

Scientists present YoNoSplat, a groundbreaking feedforward model that achieves state-of-the-art 3D scene reconstruction from an arbitrary number of images, regardless of whether camera poses and calibrations are known. The research delivers a versatile system capable of processing posed and unposed, calibrated and uncalibrated inputs, seamlessly integrating ground-truth camera information when available. Experiments demonstrate that YoNoSplat reconstructs a scene from 100 views, at a resolution of 280×518, in just 2. 69 seconds on a GH200 GPU, establishing a new benchmark for speed and efficiency.

A key achievement of this work lies in addressing the inherent entanglement of learning both 3D geometry and camera poses, a significant challenge in the field. Researchers developed a novel mix-forcing training strategy, beginning with ground-truth pose information to establish a stable geometric foundation, then gradually incorporating the model’s predicted poses. This curriculum balances stability with robustness, allowing YoNoSplat to operate effectively with either ground-truth or predicted poses during testing. The team also resolved the problem of scale ambiguity by predicting camera intrinsics and normalizing scenes by maximum pairwise camera distance.

Measurements confirm that YoNoSplat outperforms prior pose-dependent methods, even without ground-truth camera inputs, highlighting the strong geometric and appearance priors learned through the training strategy. The approach generalizes across datasets and varying numbers of views, demonstrating a significant advancement in flexible and robust 3D reconstruction. This work introduces the first feedforward model to achieve state-of-the-art performance in both pose-free and pose-dependent settings, paving the way for more versatile and efficient 3D scene understanding.

YoNoSplat Achieves Fast, Flexible 3D Reconstruction

YoNoSplat represents a significant advance in 3D scene reconstruction, demonstrating the ability to create high-quality 3D Gaussian Splatting representations from a flexible number of images. The model uniquely operates effectively with both posed and unposed, calibrated and uncalibrated input images, broadening its applicability to diverse datasets and capture scenarios. Researchers addressed key challenges in this field, namely the difficulty of simultaneously learning 3D geometry and camera poses, and the problem of establishing a consistent scale for reconstructions. To overcome these hurdles, the team developed a novel training strategy that balances the use of ground-truth and predicted camera poses, promoting both training stability and accurate results.

Furthermore, they introduced a robust normalization scheme, based on pairwise camera distances, alongside a network module that embeds camera intrinsics, enabling reconstruction from uncalibrated images. The method achieves state-of-the-art performance on standard benchmarks, reconstructing scenes efficiently, for example, processing 100 views in just a few seconds on specialized hardware. Future work could explore the application of YoNoSplat to larger and more complex scenes, as well as investigate methods for further improving reconstruction quality and efficiency.

👉 More information

🗞 YoNoSplat: You Only Need One Model for Feedforward 3D Gaussian Splatting

🧠 ArXiv: https://arxiv.org/abs/2511.07321