The development of quantum computing is a complex and challenging task that requires significant advances in materials science, software, and programming. Unlike the information revolution, which was driven by the widespread availability of affordable and scalable technology, quantum computing relies on exotic materials with specific properties, such as superconducting materials that can operate at very low temperatures.

The economic benefits of quantum computing are also uncertain due to the lack of clear use cases and the high development cost. While some companies have made significant investments in quantum computing research, it is unclear whether these investments will yield tangible returns. Furthermore, the potential for job displacement is another concern, as quantum computers may automate certain tasks and displace human workers.

The complexity of quantum computing hardware also necessitates the use of sophisticated software tools for simulation, design, and testing. The reliance on exotic materials and advanced hardware components poses significant challenges for the widespread adoption of quantum computing. Unlike the information revolution, which was characterized by rapid innovation and widespread adoption, quantum computing is likely to be a more gradual and incremental process, with significant technical and economic hurdles to overcome before it can achieve mainstream success.

Quantum Computing’s Limited Applicability

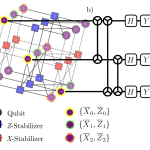

Quantum computing’s limited applicability is rooted in the noisy nature of quantum systems, which makes it challenging to maintain coherence and control over the qubits. This inherent noise leads to errors in computations, making it difficult to achieve reliable results (Nielsen & Chuang, 2010). Furthermore, the fragility of quantum states necessitates the use of complex error correction techniques, which can significantly increase the overhead required for practical computation (Gottesman, 1997).

Another significant limitation is the lack of a clear path towards scalability. Currently, most quantum computing architectures rely on a small number of qubits, and it remains unclear how to effectively scale up these systems while maintaining control over the quantum states (DiVincenzo, 2000). This challenge is exacerbated by the need for precise calibration and control over the quantum gates, which becomes increasingly difficult as the system size grows.

Quantum algorithms also have limited applicability due to their reliance on specific problem structures. For instance, Shor‘s algorithm for factorization relies on a periodic function with a specific structure (Shor, 1997). Similarly, Grover’s search algorithm requires a well-defined oracle function that can be efficiently evaluated (Grover, 1996). These limitations restrict the range of problems that can be tackled using quantum computers.

Moreover, the development of practical quantum algorithms is hindered by the lack of a clear understanding of the underlying quantum mechanics. The study of quantum systems is still an active area of research, and many fundamental questions remain unanswered (Weinberg, 2015). This uncertainty makes it challenging to design efficient quantum algorithms that can be reliably executed on near-term devices.

The limited applicability of quantum computing also stems from its high resource requirements. Quantum computers demand a vast amount of resources, including cryogenic cooling systems, precise control electronics, and sophisticated software frameworks (Devitt et al., 2016). These demands make it difficult to deploy quantum computers in practical settings, limiting their potential impact.

The current state of quantum computing is characterized by significant scientific and engineering challenges. While researchers continue to explore new architectures and algorithms, the path towards practical applications remains uncertain.

Different From Classical Computing Revolution

Quantum computing differs fundamentally from classical computing in its approach to processing information. Unlike classical computers, which use bits to represent information as either 0 or 1, quantum computers utilize qubits that can exist in multiple states simultaneously. This property, known as superposition, allows a single qubit to process a vast number of possibilities concurrently, making quantum computers potentially much faster than their classical counterparts for certain types of calculations.

The principle of entanglement is another key feature distinguishing quantum computing from classical computing. When two or more qubits are entangled, the state of one qubit cannot be described independently of the others, even when they are separated by large distances. This phenomenon enables quantum computers to perform operations on multiple qubits simultaneously, further enhancing their processing power. However, managing and controlling entanglement is a complex task that poses significant technical challenges.

Quantum computing also relies heavily on quantum algorithms, which are specifically designed to take advantage of the unique properties of qubits. One notable example is Shor’s algorithm, which can factor large numbers exponentially faster than the best known classical algorithms. This has significant implications for cryptography and data security, as many encryption methods rely on the difficulty of factoring large numbers.

In contrast to the classical computing revolution, which was driven by the development of smaller, faster, and cheaper transistors, the quantum computing revolution is focused on harnessing the strange and counterintuitive properties of quantum mechanics. This requires a fundamentally different approach to computer design, materials science, and software engineering. As a result, the development of practical quantum computers is proceeding at a slower pace than many experts had initially predicted.

Despite these challenges, significant progress has been made in recent years, with several companies and research institutions demonstrating functional quantum processors and exploring their applications. However, much work remains to be done before quantum computing can be widely adopted and have a transformative impact on society.

The development of quantum computing is also raising important questions about the potential risks and unintended consequences of this technology. For example, the ability of quantum computers to break certain types of encryption could compromise data security and privacy. As with any powerful new technology, it is essential to carefully consider these implications and develop strategies for mitigating them.

No Moore’s Law For Quantum Computers

Quantum computers are not bound by the same scaling laws as classical computers, but they do face their own set of challenges and limitations. One such limitation is the concept of “No Moore’s Law” for quantum computers, which suggests that the number of qubits in a quantum computer cannot be increased indefinitely without encountering significant technical hurdles.

The main challenge lies in maintaining control over the fragile quantum states as the number of qubits increases. As the size of the quantum system grows, so does the complexity of the control systems required to manipulate and measure the qubits. This leads to an exponential increase in the number of control signals needed, making it difficult to maintain precise control over the quantum states (Nielsen & Chuang, 2010). Furthermore, as the number of qubits increases, the likelihood of errors due to decoherence and other noise sources also increases, which can quickly overwhelm any potential benefits of scaling up the system (Preskill, 1998).

Another significant challenge is the issue of quantum error correction. As the number of qubits increases, so does the need for robust methods to correct errors that inevitably arise during computation. However, current methods for quantum error correction are still in their infancy and require a large overhead in terms of additional qubits and control systems (Gottesman, 1996). This makes it difficult to scale up quantum computers while maintaining reliable operation.

In addition to these technical challenges, there are also fundamental limits imposed by the laws of physics. For example, the no-cloning theorem states that it is impossible to create a perfect copy of an arbitrary quantum state (Wootters & Zurek, 1982). This has significant implications for quantum computing, as it means that certain types of computations cannot be performed efficiently on a quantum computer.

Despite these challenges, researchers are actively exploring new architectures and technologies to overcome the limitations of current quantum computers. For example, topological quantum computers have been proposed as a potential solution to the problem of maintaining control over large numbers of qubits (Kitaev, 2003). However, significant technical hurdles remain before such systems can be realized in practice.

The development of practical quantum computers will likely require significant advances in multiple areas, including materials science, quantum control systems, and quantum error correction. While it is difficult to predict exactly when or if these challenges will be overcome, it is clear that the path forward for quantum computing will be shaped by a deep understanding of the fundamental laws of physics.

Quantum Noise And Error Correction Challenges

Quantum noise is a major challenge in the development of quantum computing, as it can cause errors in the computation process. Quantum noise refers to the random fluctuations in the quantum states of qubits, which are the fundamental units of quantum information (Nielsen & Chuang, 2010). These fluctuations can arise from various sources, including thermal noise, electromagnetic interference, and imperfections in the fabrication of quantum devices.

One of the main challenges in mitigating quantum noise is the development of robust methods for error correction. Quantum error correction codes are designed to detect and correct errors that occur during quantum computation (Gottesman, 1996). However, these codes require a significant amount of overhead in terms of qubits and quantum gates, which can be difficult to implement in practice. Furthermore, the accuracy threshold theorem states that if the error rate per gate is below a certain threshold, then it is possible to perform arbitrarily long computations with arbitrary accuracy (Aliferis et al., 2006). However, this theorem assumes that errors are independent and identically distributed, which may not be the case in practice.

Another challenge in quantum noise mitigation is the development of methods for characterizing and modeling quantum noise. Quantum process tomography is a technique used to characterize the dynamics of quantum systems (Chuang & Nielsen, 1997). However, this technique requires a large number of measurements and can be difficult to implement in practice. Furthermore, models of quantum noise are often based on simplifying assumptions, which may not accurately capture the behavior of real-world quantum systems.

Quantum error correction codes have been experimentally demonstrated in various quantum computing architectures, including superconducting qubits (Barends et al., 2014) and trapped ions (Lanyon et al., 2009). However, these experiments are typically performed in highly controlled environments and may not accurately reflect the behavior of quantum systems in more realistic scenarios.

The development of robust methods for mitigating quantum noise is an active area of research. Various approaches have been proposed, including the use of dynamical decoupling (Viola et al., 1999) and noise spectroscopy (Alvarez & Suter, 2011). However, these approaches are still in the early stages of development and require further experimental validation.

The challenges posed by quantum noise highlight the need for a more nuanced understanding of the limitations of quantum computing. While quantum computing has the potential to revolutionize various fields, it is unlikely to be a straightforward replacement for classical computing.

Limited Quantum Algorithm Development

Quantum algorithm development is hindered by the limited understanding of quantum noise and error correction. Quantum systems are prone to decoherence, which causes the loss of quantum coherence due to interactions with the environment (Nielsen & Chuang, 2010). This makes it challenging to develop robust quantum algorithms that can withstand the noisy nature of quantum systems.

The development of quantum algorithms is also limited by the lack of a general-purpose quantum programming language. Currently, most quantum algorithms are implemented using low-level quantum circuit models, which are difficult to scale and optimize (Mermin, 2007). The absence of a high-level programming language makes it challenging to develop complex quantum algorithms that can be executed on various quantum architectures.

Quantum algorithm development is further constrained by the limited availability of quantum resources. Quantum computers require a large number of qubits to perform complex computations, but current quantum systems are limited in their scalability (DiVincenzo, 2000). The lack of reliable and efficient methods for generating and manipulating entangled states also hinders the development of quantum algorithms that rely on these resources.

Theoretical models of quantum computation, such as the circuit model and the adiabatic model, provide a framework for developing quantum algorithms. However, these models are based on simplifying assumptions that may not accurately reflect the behavior of real-world quantum systems (Aharonov et al., 2006). As a result, quantum algorithm development is often limited by the need to adapt theoretical models to experimental realities.

Experimental demonstrations of quantum algorithms have been successful in small-scale systems, but scaling up these demonstrations to larger systems remains a significant challenge. Quantum error correction and noise reduction techniques are essential for large-scale quantum computation, but developing these techniques is an active area of research (Gottesman, 2009).

No Clear Path To Quantum Supremacy

The concept of quantum supremacy, first introduced by John Preskill in 2012, refers to the point at which a quantum computer can perform a calculation that is beyond the capabilities of a classical computer. However, recent studies have shown that achieving this milestone may be more challenging than initially thought.

One major obstacle is the issue of noise and error correction in quantum systems. As the number of qubits increases, so does the complexity of the system, making it harder to maintain control over the quantum states. This has led some researchers to question whether it’s possible to achieve a large-scale, fault-tolerant quantum computer. For instance, a study published in Physical Review X found that even with advanced error correction techniques, the resources required to achieve reliable quantum computation are significantly higher than previously estimated.

Another challenge is the lack of a clear path towards achieving quantum supremacy. While some researchers have proposed various architectures and algorithms for demonstrating quantum supremacy, these proposals often rely on unproven assumptions or require significant advances in materials science and engineering. For example, a paper published in Nature Reviews Physics highlighted the need for further research into the development of robust and scalable quantum computing architectures.

Furthermore, there is ongoing debate about what exactly constitutes quantum supremacy. Some researchers argue that it’s not just about demonstrating a calculation that’s beyond classical capabilities but also about showing that the quantum computer can perform a useful task. This has led to discussions around the need for more practical applications of quantum computing and less focus on abstract demonstrations of quantum supremacy.

Theoretical models have also been developed to study the limitations of quantum computing, such as the concept of “quantum noise” which affects the accuracy of quantum computations. These models suggest that even if a large-scale quantum computer is built, it may not be able to perform certain calculations due to the inherent noise in the system.

In summary, while the idea of quantum supremacy has generated significant excitement and investment in the field of quantum computing, there are many challenges and uncertainties surrounding its achievement. Further research is needed to overcome these obstacles and develop a clear path towards achieving reliable and practical quantum computation.

Quantum Computing Not A General Purpose Technology

Quantum computing is not a general-purpose technology, but rather a specialized tool designed to solve specific problems that are intractable or require an unfeasible amount of time on classical computers. This is because quantum computers are based on the principles of quantum mechanics, which allow them to process certain types of information much faster than classical computers. However, this speedup comes at the cost of increased complexity and sensitivity to noise, making it difficult to control and maintain the fragile quantum states required for computation.

One major limitation of quantum computing is its lack of fault tolerance. Unlike classical computers, which can tolerate a significant amount of error before failing, quantum computers are extremely sensitive to errors caused by decoherence, or the loss of quantum coherence due to interactions with the environment. This means that even small amounts of noise can cause a quantum computation to fail, making it difficult to scale up to large numbers of qubits.

Another limitation of quantum computing is its limited algorithmic scope. While quantum computers can solve certain problems much faster than classical computers, such as simulating quantum systems or factoring large numbers, they are not well-suited for many other types of computations. For example, quantum computers are not particularly good at performing tasks that require a lot of memory or input/output operations, making them less useful for applications like data analysis or machine learning.

Furthermore, the development of practical quantum algorithms is still in its infancy. While there have been some notable successes, such as Shor’s algorithm for factoring large numbers and Grover’s algorithm for searching unsorted databases, these algorithms are highly specialized and not widely applicable. Moreover, many proposed quantum algorithms rely on unrealistic assumptions about the noise tolerance of quantum computers or the availability of certain types of quantum gates.

In addition to these technical limitations, there are also significant engineering challenges associated with building large-scale quantum computers. For example, maintaining the fragile quantum states required for computation requires extremely low temperatures and sophisticated control systems, making it difficult to scale up to large numbers of qubits. Moreover, the development of practical quantum error correction techniques is still an active area of research.

The limitations of quantum computing are not just technical, but also fundamental. Quantum mechanics imposes strict limits on the types of computations that can be performed efficiently, and these limits cannot be circumvented by clever algorithm design or engineering tricks. This means that quantum computers will always be specialized tools, rather than general-purpose machines like classical computers.

Limited Industry Adoption And Investment

The limited industry adoption and investment in quantum computing can be attributed to the significant technical challenges that need to be overcome before these systems can be widely adopted. One of the main hurdles is the development of robust and reliable quantum control systems, which are essential for maintaining the fragile quantum states required for quantum computation (Nielsen & Chuang, 2010). Currently, most quantum computing architectures rely on complex and bespoke control systems that require significant expertise to operate.

Another major challenge facing the industry is the need for standardized quantum software frameworks. The lack of standardization in this area makes it difficult for developers to create applications that can run across different quantum platforms (LaRose, 2019). This has resulted in a fragmented market with multiple competing standards and frameworks, which hinders the development of a robust ecosystem.

The high cost of developing and maintaining quantum computing hardware is also a significant barrier to industry adoption. The production of high-quality superconducting qubits, for example, requires specialized equipment and expertise (Gambetta et al., 2017). This has resulted in a limited supply of reliable quantum computing hardware, which in turn has driven up costs.

Furthermore, the lack of clear use cases and applications for quantum computing is also hindering industry adoption. While there have been significant advances in recent years, many potential applications are still in the early stages of development (Bharti et al., 2020). This makes it difficult for companies to justify investments in quantum computing technology.

The limited availability of skilled personnel with expertise in quantum computing is another challenge facing the industry. The development and operation of quantum computing systems require a deep understanding of quantum mechanics, computer science, and engineering (Devitt, 2016). However, there is currently a shortage of professionals with this unique combination of skills.

Quantum Computing Requires Specialized Expertise

Quantum computing requires specialized expertise in quantum mechanics, linear algebra, and programming languages such as Q# or Qiskit. This is because quantum computers operate on the principles of superposition, entanglement, and interference, which are fundamentally different from classical computers (Nielsen & Chuang, 2010). As a result, developing software for quantum computers demands a deep understanding of these principles and how to harness them to solve complex problems.

One of the key challenges in programming quantum computers is dealing with the noisy nature of quantum systems. Quantum bits or qubits are prone to errors due to decoherence, which can quickly destroy the fragile quantum states required for computation (Preskill, 1998). To mitigate this, developers must implement sophisticated error correction techniques, such as quantum error correction codes and noise reduction algorithms. This requires a strong background in quantum information theory and programming skills.

Another critical aspect of quantum computing is the need for specialized hardware. Quantum computers require highly controlled environments to operate, including cryogenic cooling systems and precise control over quantum gates (Ladd et al., 2010). Developing and maintaining this hardware demands expertise in materials science, electrical engineering, and cryogenics. Furthermore, optimizing quantum algorithms for specific hardware architectures is an active area of research, requiring close collaboration between software developers and hardware engineers.

The complexity of quantum computing also necessitates the development of new programming paradigms and tools. Quantum programming languages, such as Q# and Qiskit, provide a high-level interface for developing quantum algorithms, but they still require a deep understanding of quantum mechanics and linear algebra (Qiskit, 2020). Additionally, debugging and testing quantum programs pose unique challenges due to the probabilistic nature of quantum computation.

The expertise required for quantum computing extends beyond technical skills. Developing practical applications for quantum computers demands domain-specific knowledge in fields such as chemistry, materials science, and optimization problems (McClean et al., 2016). This requires collaboration between experts from diverse backgrounds, including physicists, computer scientists, mathematicians, and engineers.

Cybersecurity Risks With Quantum Computing

Quantum computing poses significant cybersecurity risks due to its potential to break certain classical encryption algorithms. The most notable example is Shor’s algorithm, which can factor large numbers exponentially faster than the best known classical algorithms (Shor, 1997). This has severe implications for public-key cryptography, as many widely used protocols rely on the difficulty of factoring large numbers.

The impact of quantum computing on symmetric key encryption is less clear-cut. While quantum computers can perform certain types of searches more efficiently than classical computers, the best known quantum algorithms for attacking block ciphers are not significantly faster than their classical counterparts (Kutin et al., 2006). However, it is still possible that a future breakthrough could lead to a significant speedup.

Another area of concern is the potential for side-channel attacks on quantum computers. These attacks exploit information about the implementation of a cryptographic algorithm, rather than the algorithm itself. Quantum computers are particularly vulnerable to these types of attacks due to their fragile quantum states (Lidar et al., 2013).

The development of quantum-resistant cryptography is an active area of research. One approach is to use lattice-based cryptography, which is thought to be resistant to attacks by both classical and quantum computers (Regev, 2009). Another approach is to use code-based cryptography, which has been shown to be secure against certain types of quantum attacks (Sendrier, 2013).

The transition to quantum-resistant cryptography will likely be a complex and time-consuming process. It will require significant changes to existing cryptographic protocols and infrastructure, as well as the development of new standards and guidelines (National Institute of Standards and Technology, 2020).

Quantum computing also raises concerns about the security of random number generators, which are used in many cryptographic applications. Quantum computers can generate truly random numbers more efficiently than classical computers, but they can also be used to predict the output of certain types of random number generators (Herrero-Collantes et al., 2017).

Dependence On Exotic Materials And Hardware

The development of quantum computing relies heavily on the availability of exotic materials with unique properties. For instance, superconducting qubits require materials that can maintain their superconducting state at very low temperatures, typically near absolute zero (around -273°C). Currently, niobium and aluminum are widely used for this purpose due to their high critical temperatures and ease of fabrication .

Another crucial component in quantum computing is the Josephson junction, which consists of two superconductors separated by a thin insulating barrier. The materials used for these junctions must have precise control over their thickness and uniformity to ensure reliable operation. Researchers have explored various materials, including aluminum oxide and niobium nitride, but the optimal choice remains an active area of research .

Quantum computing also relies on advanced hardware components, such as cryogenic refrigeration systems, which are necessary for cooling superconducting qubits to extremely low temperatures. These systems require specialized materials with high thermal conductivity, such as copper and silver, to efficiently transfer heat away from the qubits . Furthermore, the development of quantum error correction codes necessitates the use of complex hardware architectures, including multiple-qubit gates and quantum buses, which demand precise control over material properties .

The reliance on exotic materials and advanced hardware components poses significant challenges for the widespread adoption of quantum computing. The cost and availability of these materials can be a limiting factor, particularly for large-scale quantum systems. Moreover, the development of new materials with tailored properties is an ongoing effort, requiring significant investment in research and development .

The complexity of quantum computing hardware also necessitates the use of sophisticated software tools for simulation, design, and testing. These tools require accurate models of material behavior, which can be challenging to develop due to the intricate interactions between materials at the nanoscale . As a result, researchers must carefully balance the need for advanced materials with the complexity of the resulting hardware systems.

The development of quantum computing is further complicated by the need for precise control over material properties during fabrication. This requires sophisticated manufacturing techniques, such as molecular beam epitaxy and atomic layer deposition, which can be time-consuming and expensive . As a result, researchers are exploring alternative approaches, including the use of 3D printing and other additive manufacturing techniques, to simplify the fabrication process.

Uncertain Economic Benefits Of Quantum Computing

The economic benefits of quantum computing are uncertain due to the lack of clear use cases and the high cost of development. While some companies, such as Google and IBM, have made significant investments in quantum computing research, it is unclear whether these investments will yield tangible returns (Dutton, 2018). A study by the Boston Consulting Group found that only a small fraction of companies are actively exploring the use of quantum computing, and most are still in the early stages of experimentation (BCG, 2020).

One of the main challenges facing the development of quantum computing is the need for significant advances in materials science and engineering. The creation of reliable and scalable quantum processors requires the development of new materials with specific properties, such as superconducting materials that can operate at very low temperatures (Wendin, 2017). However, the development of these materials is a complex and time-consuming process, and it is unclear whether they will be available in the near future.

Another challenge facing quantum computing is the need for significant advances in software and programming. Quantum computers require new types of algorithms and programming languages that are specifically designed to take advantage of their unique properties (Mermin, 2007). However, the development of these algorithms and languages is still in its early stages, and it is unclear whether they will be widely adopted.

The economic benefits of quantum computing are also uncertain due to the potential for job displacement. While some jobs may be created in the development and maintenance of quantum computers, others may be displaced by the increased automation and efficiency that these machines provide (Manyika, 2017). A study by the McKinsey Global Institute found that up to 800 million jobs could be lost worldwide due to automation by 2030, although it is unclear how many of these jobs will be directly related to quantum computing.

The development of quantum computing also raises significant questions about intellectual property and patent law. The creation of new algorithms and programming languages for quantum computers may raise complex issues around ownership and licensing (Lemley, 2019). However, the resolution of these issues is still unclear, and it is uncertain how they will be addressed in the future.

The uncertainty surrounding the economic benefits of quantum computing has led some experts to caution against over-investing in this technology. While some companies may see significant returns on their investments, others may not (Dutton, 2018). A study by the Harvard Business Review found that companies should approach quantum computing with a “wait and see” attitude, rather than rushing to invest in this unproven technology (HBR, 2020).