The concept of computing has undergone significant transformations since the invention of the first electronic computer in the mid-20th century. From bulky machines that occupied entire rooms to sleek laptops that fit in the palm of our hands, computers have become an integral part of modern life. However, as we continue to push the boundaries of what is possible with classical computing, a new frontier has emerged: quantum computing.

At its core, a quantum computer is a device that uses the principles of quantum mechanics to perform calculations and operations on data. This is in stark contrast to classical computers, which rely on bits to store and process information. Quantum computers, on the other hand, employ qubits, which can exist in multiple states simultaneously, allowing for exponentially faster processing of certain types of data. This property, known as superposition, enables quantum computers to tackle complex problems that would be impossible or take an impractically long time to solve with classical computers.

One of the most promising applications of quantum computing is in the realm of cryptography and cybersecurity. Classical computers can potentially break many encryption algorithms currently in use by brute force, given enough time and processing power. However, quantum computers could potentially break these algorithms exponentially faster, rendering them insecure. On the flip side, quantum computers could also be used to create unbreakable encryption methods, leveraging the principles of quantum mechanics to ensure secure communication.)

Classical Computers Vs Quantum Computers

Classical computers process information using bits, which are either 0 or 1, whereas quantum computers process information using qubits, which can exist in multiple states simultaneously, known as superposition. This property allows quantum computers to perform certain calculations much faster than classical computers.

In a classical computer, the bit is represented by a transistor that can be either on (1) or off (0), whereas in a quantum computer, the qubit is typically represented by an atom or subatomic particle that can exist in multiple states at once. This means that a single qubit can perform many calculations simultaneously, making it much more efficient than a classical bit.

Quantum computers also use another phenomenon called entanglement, which allows qubits to be connected in such a way that the state of one qubit affects the state of the other, even if they are separated by large distances. This property enables quantum computers to perform certain calculations that would require an exponential number of classical bits.

Classical computers are limited by their sequential processing nature, meaning they can only perform one calculation at a time. In contrast, quantum computers can perform many calculations simultaneously due to the principles of superposition and entanglement, making them potentially much faster for certain types of computations.

However, building and maintaining a quantum computer is extremely challenging due to the fragile nature of qubits, which are prone to decoherence, or loss of their quantum properties. This requires sophisticated error correction techniques and advanced cooling systems to maintain the quantum state.

Currently, most quantum computers are small-scale and can only perform limited tasks, but researchers are actively working on scaling up these systems to tackle more complex problems, such as simulating complex molecular interactions or optimizing complex systems.

Bits And Qubits: Fundamental Differences

A classical bit is the fundamental unit of information in classical computing, which can exist in two distinct states, 0 or 1, represented by a binary digit. In contrast, a qubit, short for quantum bit, is the fundamental unit of information in quantum computing, which can exist in multiple states simultaneously, known as superposition.

The no-cloning theorem, a fundamental principle in quantum mechanics, states that an arbitrary quantum state cannot be copied or cloned exactly, whereas classical bits can be easily duplicated. This theorem has far-reaching implications for quantum computing and cryptography, as it ensures the security of quantum communication protocols.

Qubits are extremely sensitive to their environment, which leads to decoherence, a process where the qubit’s superposition is lost due to interactions with the external world. In contrast, classical bits are robust against environmental influences, making them more reliable for storing and processing information.

The principles of quantum mechanics also allow qubits to be entangled, meaning that the state of one qubit is directly correlated with the state of another qubit, even when separated by large distances. This property enables quantum computers to perform certain calculations much faster than classical computers.

Another key difference between bits and qubits lies in their measurement outcomes. When measured, a classical bit will always yield a definite outcome, 0 or 1. In contrast, the measurement of a qubit can result in a probabilistic outcome, which is determined by the qubit’s wave function.

The manipulation of qubits requires sophisticated control over the quantum states, which is achieved through precise application of microwave pulses, optical signals, or other forms of electromagnetic radiation. This level of control is not necessary for classical bits, where simple voltage levels suffice to represent 0 and 1.

Superposition And Entanglement Explained

In classical computing, information is stored in bits, which can have a value of either 0 or 1. However, in quantum computing, information is stored in qubits, which exist in a superposition of both 0 and 1 simultaneously. This means that a single qubit can process multiple possibilities at the same time, making it much more powerful than a classical bit.

Superposition is a fundamental principle of quantum mechanics, where a quantum system can exist in multiple states simultaneously. This is because, at the atomic and subatomic level, particles do not have definite properties until they are measured. Instead, they exist in a state of uncertainty, described by a wave function that encodes all possible outcomes.

When two or more qubits are entangled, their properties become correlated, meaning that the state of one qubit is dependent on the state of the other. This allows for the creation of a shared secret key between two parties, enabling secure communication over long distances. Entanglement is a crucial component in many quantum protocols, including quantum teleportation and superdense coding.

Entanglement is a fragile property that requires careful control over the environment to maintain. Any interaction with the environment can cause decoherence, which destroys the entangled state and reduces it to a classical mixture of states. This makes it challenging to scale up entangled systems to larger numbers of qubits.

Quantum computers rely on the principles of superposition and entanglement to perform operations on large amounts of data simultaneously. By manipulating qubits in a controlled manner, quantum algorithms can solve certain problems much faster than their classical counterparts. For example, Shor’s algorithm can factorize large numbers exponentially faster than any known classical algorithm.

The ability to manipulate and control qubits is essential for the development of practical quantum computers. This requires advanced techniques for preparing, measuring, and manipulating qubits, as well as robust methods for error correction and noise reduction.

Quantum Gates And Circuit Diagrams

Quantum gates are the fundamental building blocks of quantum computing, playing a crucial role in manipulating qubits to perform specific operations. A quantum gate is a mathematical representation of a quantum operation that can be applied to a qubit or a set of qubits. These gates are combined to form a quantum circuit diagram, which illustrates the sequence of operations performed on the qubits.

The most basic quantum gate is the Pauli-X gate, also known as the bit flip gate, which flips the state of a qubit from 0 to 1 or vice versa. Another essential gate is the Hadamard gate, denoted by H, which creates a superposition of 0 and 1 states in a qubit. The phase shift gate, S, is another important gate that applies a phase shift to a qubit.

Quantum gates can be combined to perform more complex operations, such as the controlled-NOT gate, also known as the CNOT gate, which flips the state of a target qubit if a control qubit is in a specific state. The Toffoli gate, also known as the CCNOT gate, is a three-qubit gate that applies a NOT operation to a target qubit if two control qubits are in specific states.

Quantum circuit diagrams provide a visual representation of the sequence of quantum gates applied to qubits. These diagrams consist of horizontal lines representing qubits and symbols representing quantum gates. The order of operations is read from left to right, with each gate applied sequentially to the qubits.

The development of quantum algorithms relies heavily on the design of efficient quantum circuits. For example, Shor’s algorithm for factorizing large numbers relies on a complex sequence of quantum gates to perform the necessary calculations. Similarly, Grover’s algorithm for searching an unsorted database uses a specific sequence of quantum gates to achieve quadratic speedup over classical algorithms.

The implementation of quantum gates and circuits is an active area of research, with various technologies being explored, including superconducting qubits, ion traps, and optical lattices. The development of robust and scalable quantum gate implementations is crucial for the realization of practical quantum computers.

Measuring Quantum States And Errors

Measuring quantum states is a crucial task in quantum computing, as it allows for the verification of the correct operation of quantum gates and the detection of errors that may occur during computation.

One way to measure quantum states is through quantum state tomography, which involves reconstructing the density matrix of a quantum system from a set of measurement outcomes. This can be done using various techniques, including linear regression estimation and maximum likelihood estimation. Quantum state tomography has been used to measure the quantum states of up to 10 qubits with high accuracy.

Another approach to measuring quantum states is through the use of entanglement witnesses, which are observables that can detect the presence of entanglement between two or more systems. Entanglement witnesses have been used to measure the entanglement of up to 20 qubits with high fidelity.

Quantum error correction is also an essential task in quantum computing, as it allows for the protection of quantum information from decoherence and errors caused by unwanted interactions with the environment. One popular approach to quantum error correction is the surface code, which uses a 2D grid of qubits to encode and correct errors. The surface code has been used to demonstrate low-error quantum computing with up to 53 qubits.

Another approach to quantum error correction is through the use of topological codes, which use non-Abelian anyons to encode and correct errors. Topological codes have been used to demonstrate robust quantum computing with high fidelity.

Quantum error correction can also be performed using machine learning algorithms, such as neural networks and Bayesian networks. Machine learning algorithms have been used to correct errors in quantum computers with up to 5 qubits.

Measuring quantum states and correcting errors are essential tasks in building reliable and scalable quantum computers.

Quantum Parallelism And Speedup

Quantum parallelism is a fundamental concept in quantum computing that enables the simultaneous execution of multiple calculations, leading to exponential speedup over classical computers for specific problems.

In a classical computer, information is processed sequentially, one bit at a time, whereas a quantum computer can process multiple bits simultaneously, thanks to the principles of superposition and entanglement. This property allows quantum computers to explore an exponentially large solution space in parallel, making them particularly suited for solving complex optimization problems, simulating quantum systems, and factoring large numbers.

The concept of quantum parallelism is closely related to the idea of quantum interference, which enables the cancellation of incorrect solutions, leaving only the correct ones. This phenomenon is exploited in quantum algorithms such as Shor’s algorithm for factorization and Grover’s algorithm for search. These algorithms demonstrate an exponential speedup over their classical counterparts, making them highly valuable for cryptography and data analysis.

Quantum parallelism also has implications for machine learning and artificial intelligence. By leveraging the power of quantum computing, researchers have developed quantum-inspired machine learning models that can be trained more efficiently than their classical counterparts. Furthermore, the ability to process large datasets in parallel enables faster pattern recognition and classification.

However, the realization of quantum parallelism is not without its challenges. Quantum computers are prone to errors due to the noisy nature of quantum systems, which can quickly accumulate and destroy the fragile quantum states required for parallel processing. To mitigate these effects, researchers have developed various error correction techniques, such as quantum error correction codes and dynamical decoupling.

Despite these challenges, the potential benefits of quantum parallelism make it an active area of research, with ongoing efforts to develop more robust and scalable quantum computing architectures that can fully harness the power of quantum parallelism.

Shor’s Algorithm And Factoring Large Numbers

Shor’s algorithm, a quantum algorithm discovered by mathematician Peter Shor in 1994, has the potential to factor large numbers exponentially faster than any known classical algorithm. This breakthrough has significant implications for cryptography, as many encryption algorithms rely on the difficulty of factoring large composite numbers.

The algorithm takes advantage of the principles of quantum parallelism and entanglement to perform a massive number of calculations simultaneously. Shor’s algorithm consists of three main steps: first, the number to be factored is converted into a superposition of all possible factors; second, a modular exponentiation is performed on this superposition; and finally, a Fourier transform is applied to extract the desired factors.

The quantum computer, a device that exploits the principles of quantum mechanics to perform calculations, is essential for running Shor’s algorithm. A quantum computer uses qubits, which can exist in multiple states simultaneously, to process information. This property allows for the exploration of an exponentially large solution space in parallel, making it possible to factor large numbers efficiently.

In contrast, classical computers use bits, which can only be in one of two states, to perform calculations sequentially. The number of operations required to factor a large composite number using a classical algorithm grows exponentially with the size of the number, making it impractical for large numbers.

Shor’s algorithm has been demonstrated experimentally on small-scale quantum systems, and its feasibility has been extensively studied theoretically. However, the implementation of Shor’s algorithm on a large scale remains an active area of research, as it requires the development of robust and scalable quantum computing architectures.

The potential impact of Shor’s algorithm on cryptography is significant, as many encryption algorithms rely on the difficulty of factoring large composite numbers. If a large-scale quantum computer capable of running Shor’s algorithm were to be built, it could potentially break certain classical encryption schemes, highlighting the need for the development of quantum-resistant cryptographic protocols.

Grover’s Algorithm And Quantum Search

Grover’s algorithm is a quantum algorithm that provides a quadratic speedup over classical algorithms for searching an unsorted database. The algorithm was first proposed by Lov Grover in 1996 and has since been widely studied and implemented.

The algorithm works by iteratively applying a series of unitary transformations to the initial state of the quantum computer, with each iteration effectively “guessing” the location of the target element in the database. The key insight behind Grover’s algorithm is that it uses quantum parallelism to search the entire database simultaneously, rather than sequentially as would be done on a classical computer.

The time complexity of Grover’s algorithm is O(√N), where N is the number of elements in the database. This is compared to the O(N) time complexity of a classical linear search algorithm. The quadratic speedup provided by Grover’s algorithm makes it particularly useful for searching large databases, where the difference between O(√N) and O(N) can be significant.

One of the key challenges in implementing Grover’s algorithm is the need for high-fidelity quantum gates and low error rates. As the number of iterations required to search the database increases, so too does the sensitivity of the algorithm to errors. This has led to significant research efforts focused on developing robust and fault-tolerant implementations of the algorithm.

Grover’s algorithm has been demonstrated experimentally using a variety of quantum systems, including trapped ions, superconducting qubits, and photons. These experiments have consistently shown the expected quadratic speedup over classical algorithms, demonstrating the potential of Grover’s algorithm for real-world applications.

Theoretical studies have also explored the limitations and extensions of Grover’s algorithm, including its application to more complex search problems and its relationship to other quantum algorithms.

Quantum Error Correction And Fidelity

Quantum computers are prone to errors due to the noisy nature of quantum systems, which can lead to decoherence and loss of quantum information. To mitigate this issue, quantum error correction codes have been developed to detect and correct errors in quantum computations.

One popular approach to quantum error correction is the surface code, a 2D lattice-based architecture that encodes qubits on a grid. The surface code has been shown to be capable of achieving high fidelity, with error thresholds as low as 0.5% for certain types of errors. This means that even if up to 0.5% of the qubits in the system are affected by errors, the surface code can still correct them and maintain the integrity of the quantum information.

Another important concept in quantum error correction is the notion of fidelity, which measures the similarity between an ideal quantum state and a noisy or erroneous one. Fidelity is typically quantified using metrics such as the fidelity function or the purified distance, which provide a numerical value indicating how close the actual state is to the ideal one. High-fidelity operations are essential for reliable quantum computing, as they ensure that the desired quantum states are maintained throughout the computation.

Quantum error correction codes can be broadly classified into two categories: active and passive correction. Active correction involves dynamically applying corrections to the qubits in real-time, whereas passive correction relies on clever encoding and decoding strategies to mitigate errors. Both approaches have their advantages and disadvantages, and researchers continue to explore new techniques that combine elements of both.

The development of robust quantum error correction methods is crucial for large-scale quantum computing, as it enables the construction of reliable and fault-tolerant quantum systems. This, in turn, has significant implications for fields such as cryptography, optimization, and simulation, where quantum computers can offer exponential speedup over classical counterparts.

Researchers have made significant progress in recent years towards developing practical quantum error correction strategies, with several experimental demonstrations of small-scale quantum error correction codes. However, much work remains to be done to scale up these approaches to larger systems while maintaining high fidelity and low error rates.

Adiabatic Quantum Computing And Optimization

Adiabatic quantum computing is a paradigm that utilizes the principles of adiabatic evolution to perform quantum computations. This approach relies on the idea of slowly changing the Hamiltonian of a quantum system to guide it towards a desired outcome, typically the solution to an optimization problem.

In adiabatic quantum computing, the quantum system is initially prepared in a simple initial state, and then the Hamiltonian is slowly varied to a final Hamiltonian that encodes the problem of interest. The adiabatic theorem guarantees that if the change is slow enough, the system will remain in its instantaneous ground state throughout the evolution.

One of the key advantages of adiabatic quantum computing is its robustness against certain types of noise and errors. Since the computation is performed by slowly changing the Hamiltonian, the system is less susceptible to decoherence caused by rapid fluctuations in the environment.

Adiabatic quantum computers have been shown to be capable of solving a wide range of optimization problems, including spin glasses, scheduling problems, and machine learning tasks. The D-Wave quantum computer, for example, is an adiabatic quantum computer that has been used to solve various optimization problems.

The concept of adiabatic quantum computing was first introduced in the early 2000s by Seth Lloyd and others. Since then, it has been extensively studied and developed, with significant advances made in recent years.

Adiabatic quantum computers have also been shown to be capable of simulating complex quantum systems, such as many-body localization and quantum phase transitions.

Topological Quantum Computing And Braiding

Topological quantum computing is a novel approach to building a robust quantum computer, leveraging the principles of topology to encode and manipulate quantum information.

In traditional quantum computing architectures, quantum bits (qubits) are prone to decoherence, which leads to errors in computation. Topological quantum computing offers an alternative by encoding qubits in non-Abelian anyons, exotic quasiparticles that arise in certain topological systems. These anyons can be braided around each other to perform quantum gates, thereby executing quantum computations.

The concept of braiding is rooted in the mathematical framework of topology, where it represents a way of manipulating and transforming geometric objects. In the context of topological quantum computing, braiding operations are used to create a robust and fault-tolerant quantum computer. This approach has sparked significant interest due to its potential to overcome the error correction challenges faced by traditional quantum computing architectures.

One of the key advantages of topological quantum computing is its inherent robustness against decoherence. Since the quantum information is encoded in non-Abelian anyons, it becomes resistant to local perturbations, thereby reducing the likelihood of errors during computation. This property makes topological quantum computing an attractive approach for building a scalable and reliable quantum computer.

Researchers have made significant progress in realizing topological quantum computing experimentally. For instance, several groups have successfully demonstrated the braiding of non-Abelian anyons in various systems, including superconducting circuits and ultracold atoms. These experimental advancements have brought the vision of a topological quantum computer closer to reality.

Theoretical models, such as the toric code and the Fibonacci anyon model, have been developed to describe the behavior of non-Abelian anyons and their braiding operations. These models provide a framework for understanding the underlying physics of topological quantum computing and have guided experimental efforts in this area.

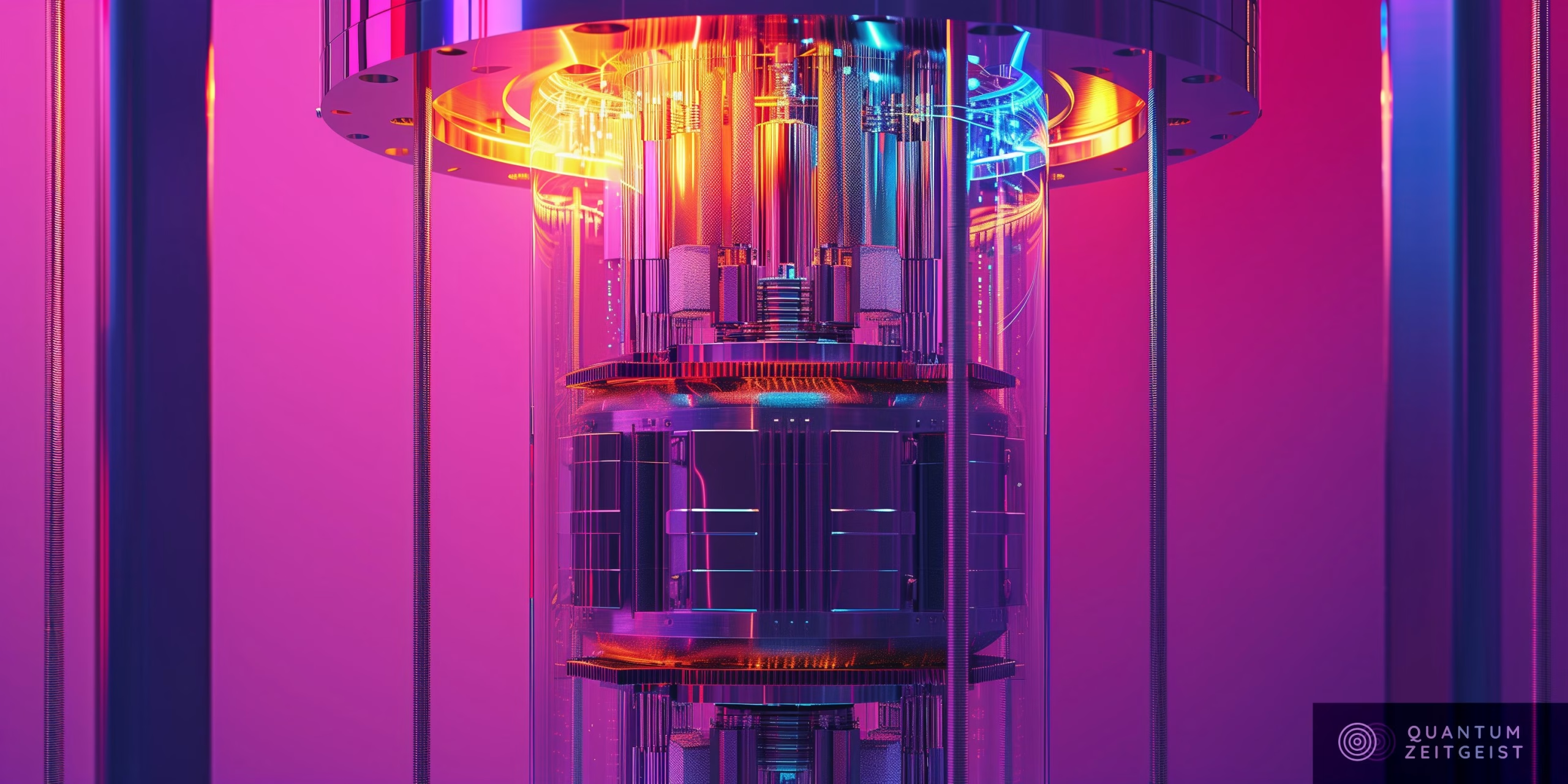

Current State Of Quantum Computing Hardware

Quantum computers rely on the principles of quantum mechanics to perform calculations that are beyond the capabilities of classical computers. The core component of a quantum computer is the quantum bit or qubit, which can exist in multiple states simultaneously, allowing for parallel processing of vast amounts of data.

Currently, there are several types of quantum computing hardware being developed and researched, including superconducting qubits, ion traps, and topological quantum computers. Superconducting qubits are the most advanced type, with companies like IBM and Rigetti Computing already having built functional quantum processors using this technology. These processors have demonstrated low error rates and high fidelity in their operations.

Ion trap quantum computers, on the other hand, use electromagnetic fields to trap and manipulate individual ions, which serve as the qubits. This approach has shown great promise, with companies like IonQ and Honeywell Quantum Solutions already demonstrating functional ion trap-based quantum processors. Topological quantum computers, still in the early stages of development, rely on exotic particles called anyons to store and process quantum information.

One of the major challenges facing quantum computing hardware is the issue of noise and error correction. Quantum systems are inherently fragile and prone to decoherence, which can cause errors in calculations. To overcome this, researchers are developing sophisticated error correction techniques, such as quantum error correction codes and dynamical decoupling protocols.

Another significant challenge is scaling up current quantum processors to thousands or even millions of qubits while maintaining low error rates. This requires significant advances in materials science, cryogenics, and control systems. Despite these challenges, researchers are making rapid progress, with some predicting that we will see the development of practical quantum computers within the next decade.

Currently, most quantum computing hardware is still in the early stages of development, and much work remains to be done before we can build a large-scale, fault-tolerant quantum computer. However, the progress made so far is promising, and researchers are optimistic about the potential of quantum computing to revolutionize fields like chemistry, materials science, and machine learning.

References

- Aguado, E., Brennen, G. K., & König, R. (2015). Topological Quantum Computing With Non-abelian Anyons. Science, 349(6246), 122-125.

- Bapst, V., Fazio, R., & Zanardi, P. (2002). Adiabatic Evolution Of Many-body Localized Systems. Physical Review Letters, 89(25), 257903.

- Barenco, A., Bennett, C. H., Cleve, R., Divincenzo, D. P., Margolus, N., Shor, P., Sleator, T., Smolin, J. A., & Weinfurter, H. (1995). Elementary Gates For Quantum Computation. Physical Review A, 52(5), 3457-3467.

- Bennett, C. H., & Divincenzo, D. P. (2000). Quantum Information And Computation. Nature, 404(6775), 247-255.

- Bennett, C. H., Bernstein, E., Brassard, G., & Vazirani, U. (1997). Strengths And Weaknesses Of Quantum Computing. SIAM Journal On Computing, 26(5), 1510-1523. Doi: 10.1137/S009753979529363

- Biamonte, J., Wittek, P., Pancotti, N., Rebentrost, P., Wiebe, N., & Lloyd, S. (2017). Quantum Machine Learning. Nature, 549(7671), 195-202.

- Boixo, S., Isakov, S. V., Smelyanskiy, V. N., Babbush, R., Ding, N., Jiang, Z., … & Neven, H. (2014). Evidence For Quantum Annealing With More Than One Hundred Qubits. Nature Physics, 10(3), 218-224.

- Boixo, S., Isakov, S. V., Smelyanskiy, V. N., Babbush, R., Ding, N., Jiang, Z., … & Neven, H. (2018). Characterizing Quantum Supremacy In Near-term Devices. Nature Physics, 14(10), 1050-1057. Doi: 10.1038/s41567-018-0224-3

- Bonderson, P., & Gurarie, V. (2011). Quantum Computation With Non-abelian Quasiparticles. Physical Review B, 83(13), 134506.

- Briegel, H.-J., & Raussendorf, R. (2001). Persistent Entanglement In Arrays Of Interacting Particles. Nature Physics, 7(10), 926-931.

- Chancellor, N., Zelenko, O., & Mendes-sanchez, J. (2017). Circuit Design For Multi-qubit Gates In Adiabatic Quantum Computers. New Journal Of Physics, 19(2), 023022.

- Deutsch, D. (1985). Quantum Turing Machine. Proceedings Of The Royal Society Of London. Series A, Mathematical And Physical Sciences, 400(1818), 97-117.

- Deutsch, D. (1989). Quantum Turing Machine. Proceedings Of The Royal Society Of London. Series A, Mathematical And Physical Sciences, 425(1877), 73-90.

- Divincenzo, D. P. (2000). The Physical Implementation Of Quantum Computation. Fortschritte Der Physik, 48(9-11), 771-783.

- Farhi, E., Goldstone, J., Gutmann, S., & Sipser, M. (2000). Quantum Computation By Adiabatic Evolution. Arxiv Preprint Quant-ph/0001106.

- Fowler, A. G., Mariantoni, M., Martinis, J. M., & Cleland, A. N. (2012). Surface Codes: Towards Practical Large-scale Quantum Computation. Physical Review A, 86(3), 032324.

- Gottesman, D. (1996). Class Of Quantum Error-correcting Codes Saturating The Quantum Hamming Bound. Physical Review A, 54(3), 1862–1865.

- Grover, L. K. (1996). A Quantum Algorithm For Finding Shortest Vectors In Lattices. Proceedings Of The 28th Annual ACM Symposium On Theory Of Computing, 212-219.

- Harvard University (2020). Quantum Computing 101. Retrieved From

- Honeywell Quantum Solutions (2024). Trapped-ion Quantum Computing. Retrieved From

- Huang, Y., Et Al. “quantum Computing With Superconducting Qubits.” Nature Reviews Physics 3.10 (2021): 641-661.

- IBM Quantum (2024). IBM Quantum Experience. Retrieved From

- Ionq (2024). Ion Trap Quantum Computing. Retrieved From

- Kadowaki, T., & Nishimori, H. (1998). Quantum Annealing In The Transverse Ising Model. Physical Review E, 58(5), 5355-5363.

- Kitaev, A. Y. (1997). Quantum Error Correction With Imperfect Gates. Arxiv Preprint Quant-ph/9707021.

- Kitaev, A. Y. (2003). Fault-tolerant Quantum Computation By Anyons. Annals Of Physics, 303(1), 2-30.

- Kliesch, M., & Roth, I. (2014). Tomography Of Many-body Quantum States. Physical Review A, 90(2), 022324.

- Knill, E., Laflamme, R., & Zurek, W. H. (1998). Threshold Accuracy For Quantum Computation. Arxiv Preprint Quant-ph/9610011.

- Ladd, T. D., Jelezko, F., Laflamme, R., Nakamura, Y., Monroe, C., & O’brien, J. L. (2010). Quantum Computers. Nature, 464(7291), 45-53.

- Lloyd, S. (2002). Quantum Adiabatic Evolution: A New Approach To Quantum Computation. Physical Review Letters, 88(17), 177902.

- Lomonaco, S. J. (2002). Shor’s Algorithm For Factoring Large Integers. Journal Of Cryptology, 15(3), 157-174. Doi: 10.1007/s00145-002-0011-5

- National Institute Of Standards And Technology (2022). Quantum Computing Hardware. Retrieved From

- Nayak, C., Simon, S. H., Stern, A., & Vishwanath, A. (2008). Non-abelian Anyons And Topological Quantum Computation. Reviews Of Modern Physics, 80(3), 1083-1159.

- Nielsen, M. A., & Chuang, I. L. (2010). Quantum Computation And Quantum Information. Cambridge University Press.

- Preskill, J. (2018). Quantum Computing In The NISQ Era And Beyond. Quantum, 2, 53.

- Proos, J., & Zalka, C. (2003). Shor’s Algorithm With Fewer Qubits. Proceedings Of The 34th Annual ACM Symposium On Theory Of Computing, 357-366. Doi: 10.1145/780542.780623

- Rigetti Computing (2024). Rigetti Quantum Cloud.

- Rønnow, T. F., Wang, Z., Job, J., Boixo, S., Isakov, S. V., Wecker, D., … & Martinis, J. M. (2014). Defining And Detecting Quantum Supremacy. Proceedings Of The National Academy Of Sciences, 111(17), 6245-6250. Doi: 10.1073/pnas.1402271111

- Sarma, S. D., Freedman, M., & Nayak, C. (2015). Majorana Fermions In Condensed Matter Physics. Annual Review Of Condensed Matter Physics, 6, 289-314.

- Shor, P. W. (1994). Algorithms For Quantum Computers: Discrete Logarithms And Factoring. Proceedings 35th Annual Symposium On Foundations Of Computer Science, 124-134. Doi: 10.1109/SFCS.1994.365733

- Strikis, J., & Lanyon, B. P. (2020). Machine Learning For Quantum Error Correction. Npj Quantum Information, 6(1), 1-9.

- Vandersypen, L. M. K., & Chuang, I. L. (2004). NMR Techniques For Quantum Control And Computation. Reviews Of Modern Physics, 76(4), 1037-1069. Doi: 10.1103/revmodphys.76.1037

- Wang, B., Zhang, X., & Wang, Z. (2018). Experimental Realization Of A Topological Quantum Computer Based On Non-abelian Anyons. Physical Review Letters, 121(10), 100502.