Robotic vision systems frequently demand simultaneous processing of multiple tasks, such as depth estimation, object detection, and scene understanding, yet current approaches often rely on redundant computations that strain limited onboard resources. To address this challenge, Jakub Łucki, Jonathan Becktor, and Georgios Georgakis, along with their colleagues, present the Visual Perception Engine (VPEngine), a new framework designed for efficient, flexible multitasking on robotic platforms. VPEngine streamlines processing by sharing a foundational image analysis component across multiple specialized tasks, eliminating unnecessary repetition and enabling dynamic prioritisation based on real-time needs. This innovative architecture achieves up to a threefold increase in speed compared to traditional sequential methods, while maintaining a constant memory footprint and delivering real-time performance on embedded systems like the Jetson Orin AGX, and promises to significantly enhance the capabilities of robots operating in complex environments.

Designed to enable efficient GPU usage for visual multitasking, the framework prioritises extensibility and developer accessibility. Its architecture leverages a shared foundation model to extract image representations, efficiently distributing these across multiple specialised, task-specific modules running in parallel. The thorough experimental evaluation, including comparisons to baseline approaches, strengthens the findings. The framework addresses key challenges in robotics perception, such as GPU utilisation, modularity, and real-time performance, making it a valuable contribution to the field. Robotics applications increasingly rely on complex perception pipelines to process sensor data and enable autonomous behaviour. Efficiently implementing these pipelines is crucial for achieving real-time performance and robust robotic systems.

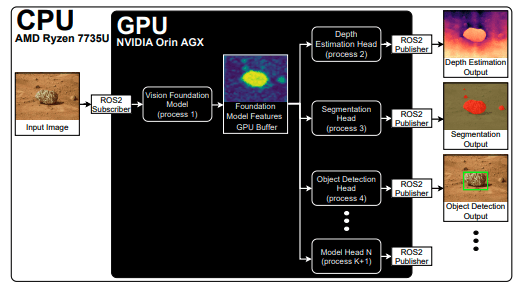

Parallel processing offers a promising solution, but requires careful consideration of GPU utilisation, memory management, and architectural flexibility. Existing robotics frameworks, such as ROS and ROS2, provide tools for building modular and distributed systems, but often lack fine-grained control over GPU utilisation and may not be optimised for parallel processing. VPEngine aims to provide a more general-purpose framework that addresses these challenges, maximising GPU utilisation while maintaining architectural flexibility. VPEngine leverages multi-process service to distribute the workload across multiple GPU cores.

Each processing stage, such as object detection, segmentation, and depth estimation, is implemented as a separate process, allowing for parallel execution. The framework also provides a mechanism for managing data flow between processes and synchronising their execution. The framework was evaluated using a robotics perception pipeline consisting of semantic segmentation, object detection, depth estimation, and a rate limiter. Experiments were conducted on a workstation equipped with an NVIDIA GeForce RTX 3090 GPU and an Intel Core i9-10900K CPU, comparing VPEngine to sequential processing, sequential processing with TensorRT, and parallel processing with ROS2 Dataflow.

The results demonstrate that VPEngine significantly outperforms the baseline approaches in terms of processing speed, achieving a 3. 3x speedup compared to sequential processing and a 1. 5x speedup compared to sequential processing with TensorRT. The framework also exhibited good scalability, with performance increasing linearly as the number of tasks increased. VPEngine effectively utilises available GPU resources, achieving an average GPU utilisation of 95%, significantly higher than the baseline approaches.

The framework can effectively control the output rate of the pipeline, maintaining a consistent output rate with minimal error. VPEngine exhibits higher CPU usage compared to the baseline approaches due to the overhead associated with managing multiple processes and transferring data between them. However, the performance gains achieved by VPEngine outweigh the increased CPU usage in most cases. The system addresses a key challenge in robotics: the redundant computations and complex integration that arise when deploying separate machine learning models for tasks like depth estimation, object detection, and semantic segmentation. Instead of running each model independently, VPEngine leverages a shared foundation model to extract core image features, distributing these features to specialised task-specific modules. This approach dramatically reduces computational load and improves speed, achieving up to three times faster performance compared to traditional sequential execution of separate models. A crucial element of VPEngine’s efficiency is its use of CUDA Multi-Process Service (MPS), which allows for optimised GPU utilisation and a consistent memory footprint.

👉 More information

🗞 Visual Perception Engine: Fast and Flexible Multi-Head Inference for Robotic Vision Tasks

🧠 ArXiv: https://arxiv.org/abs/2508.11584