Understanding what objects are doing in videos requires both identifying where they are and what they are, a challenge researchers now address with a new system called VoCap. Jasper Uijlings, Xingyi Zhou, Xiuye Gu, and colleagues at Google DeepMind present VoCap, a flexible approach that takes a video and a simple prompt — be it text, a bounding box, or even a mask — and generates both a precise outline of the object within the video and a descriptive caption. This work simultaneously advances three key areas of video understanding, including identifying objects from prompts, segmenting objects within video footage, and automatically generating captions that describe them. To overcome the difficulty of obtaining labelled video data, the team created a new dataset, SAV-Caption, by leveraging existing footage and employing a vision-language model to generate pseudo-captions. The resulting system achieves state-of-the-art performance across multiple video understanding tasks, establishing a new benchmark for video object captioning.

Recognising the limited availability of labelled video data for this complex task, the team pioneered a method to create a large-scale dataset, SAV-Caption, by leveraging existing segmentation data and employing a Vision-Language Model to generate pseudo-captions. This innovative approach involved highlighting objects within video frames using ground-truth masks and then using the VLM to automatically generate corresponding captions, effectively expanding the available training data. To ensure reliable evaluation, the team meticulously collected manual annotations for a validation set, creating a ground truth for assessing performance.

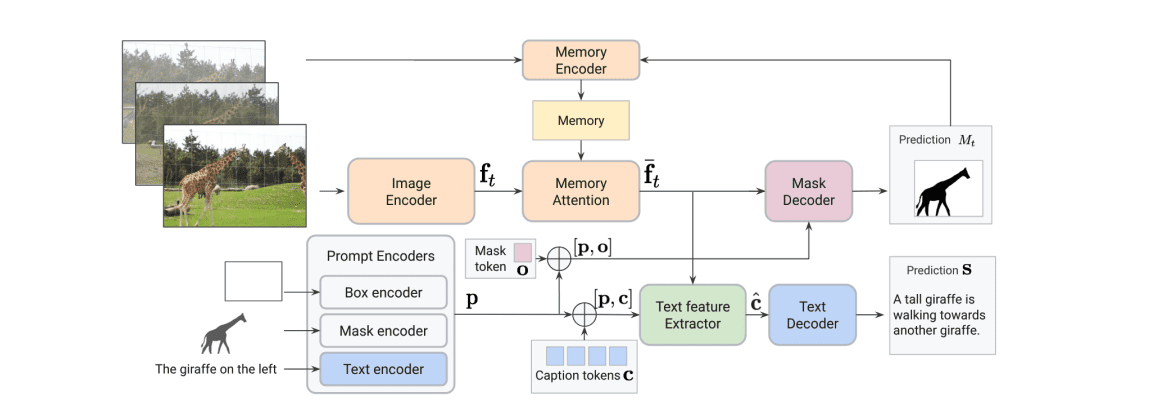

The core of VoCap lies in its ability to process videos and prompts, which can be text, bounding boxes, or masks, to produce a spatio-temporal masklet alongside a corresponding object-centric caption. Experiments employed a joint training strategy, finetuning VoCap on the newly created SAV-Caption dataset in combination with existing image and video datasets. To establish a robust benchmark, the team compared VoCap against several baselines, including semi-supervised Video Object Segmentation methods coupled with off-the-shelf captioning models. These baselines were adapted to process segmentation masks, extract bounding boxes, or crop images to generate captions.

Results demonstrate that VoCap significantly outperforms these baselines on video object captioning, achieving a high score on the SAV-Caption validation set. Further evaluation on localised image captioning reveals that VoCap achieves a state-of-the-art score, surpassing previous methods. The system also excels in video object segmentation tasks, performing well on several datasets and demonstrating strong performance on referring expression video object segmentation benchmarks.

VoCap Understands and Describes Objects in Video

Researchers have developed VoCap, a new model capable of understanding objects within videos by simultaneously identifying their precise location and describing them with detailed language. This system addresses a fundamental challenge in video understanding, bridging the gap between visual localisation and semantic comprehension, and can produce both a segmentation mask and a descriptive caption for each object. VoCap accepts various inputs, such as textual descriptions, bounding boxes, or even initial masks, demonstrating remarkable flexibility in how it receives instructions. The team overcame a significant hurdle in training such a model by creating a large-scale dataset, SAV-Caption, leveraging existing segmentation data and a powerful Vision-Language Model to automatically generate object-centric captions.

By highlighting objects within videos and utilising the VLM, they successfully created detailed descriptions without requiring extensive manual annotation, thereby significantly reducing the cost and effort of data collection. Human annotation was then performed on a validation set to ensure high-quality evaluation. Experiments demonstrate that VoCap achieves state-of-the-art results on referring expression video object segmentation, a task where the model must identify an object based on a textual description. Furthermore, the model performs competitively in semi-supervised video object segmentation and establishes a new benchmark for video object captioning, demonstrating its ability to both accurately locate and describe objects. This breakthrough delivers a unified model capable of handling multiple tasks, opening up new applications in areas such as video editing, wildlife monitoring, and autonomous driving technology. The researchers have made both the dataset and model publicly available, fostering further research and innovation in the field of video understanding.

VoCap Understands Objects and Describes Video Actions

The research presents VoCap, a new model for understanding objects within videos by identifying their location and describing their semantic properties. VoCap takes a video and a prompt, which can be text, a bounding box, or a mask, and produces a precise spatio-temporal mask alongside a descriptive caption of the object. This simultaneously addresses three tasks: promptable video object segmentation, referring expression segmentation, and object captioning. To facilitate training, the team created SAV-Caption, a large dataset annotated with pseudo-captions generated by processing existing video mask data with a vision-language model.

VoCap, trained on SAV-Caption alongside other image and video datasets, achieves state-of-the-art results in referring expression video object segmentation and competitive performance in semi-supervised video object segmentation. Notably, it also establishes a new benchmark for video object captioning. The team demonstrated the critical importance of the SAV-Caption dataset, finding that performance significantly decreased in its absence. This highlights the effectiveness of their automatic annotation pipeline in generating valuable training data. The authors acknowledge that the performance of their model relies heavily on the quality of the pseudo-captions generated for the SAV-Caption dataset. Future work could explore methods to refine these captions or investigate alternative approaches to automatic annotation. They hope their model and datasets will encourage further research into fine-grained spatio-temporal video understanding.

👉 More information

🗞 VoCap: Video Object Captioning and Segmentation from Any Prompt

🧠 ArXiv: https://arxiv.org/abs/2508.21809