Detecting increasingly realistic deepfakes presents a major challenge to online trust and information security, yet current detection methods often fail when faced with the complexities of real-world forgeries. Hao Tan, Jun Lan, and Zichang Tan, along with their colleagues, address this critical gap by introducing a new dataset, HydraFake, which more accurately reflects the diverse techniques and challenging conditions found in genuine deepfake scenarios. Building upon this resource, the team proposes Veritas, a novel deepfake detector that leverages multi-modal large language models and, crucially, incorporates a pattern-aware reasoning process inspired by human forensic analysis. The results demonstrate that Veritas significantly outperforms existing detectors when confronted with unseen forgery techniques and data, offering a substantial step towards more reliable and transparent deepfake detection in the wild.

Deepfake detection remains a formidable challenge due to the complex and evolving nature of fake content in real-world scenarios. Existing academic benchmarks often fail to reflect practical conditions, typically featuring limited data diversity and low-quality images, which hinders the deployment of current detectors. To address this gap, researchers introduce HydraFake, a dataset that simulates real-world challenges with hierarchical generalization testing, incorporating diverse deepfake techniques and realistic, in-the-wild forgeries alongside rigorous training and evaluation protocols.

Personalized Prompts Evaluate Deepfake Reasoning

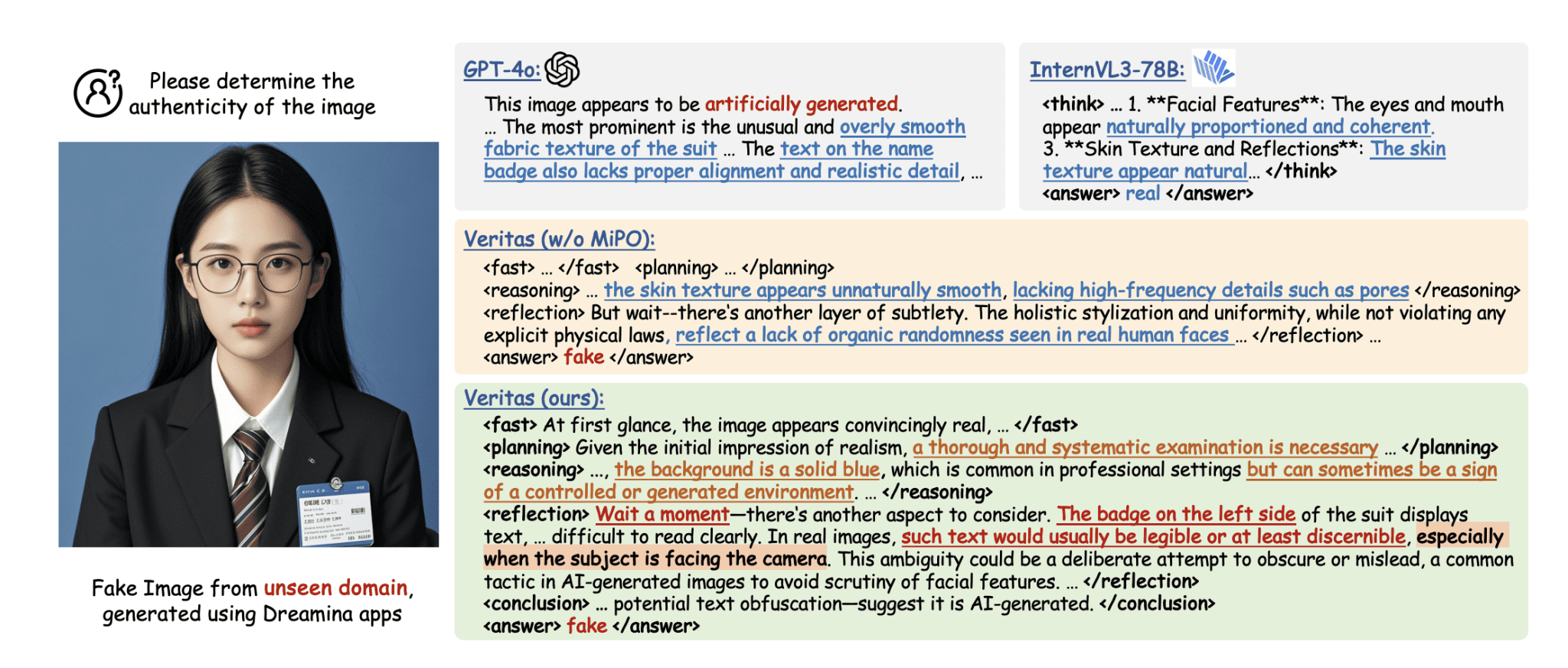

This system builds a robust deepfake detection system that explains its reasoning in a clear and insightful way, identifying subtle artifacts that indicate manipulation. The system generates diverse prompts tailored to the specific input image, increasing the challenge and realism of the task. A vision-language model receives the image and prompt, then generates a reasoning chain explaining its assessment of the image’s authenticity, structured using tags that categorize different aspects of the analysis. A crucial component is the self-reflection stage, where the model critically evaluates its own reasoning to identify potential weaknesses and refine its assessment.

The system uses multiple evaluation metrics to assess the quality of the model’s reasoning, including precision, comprehensiveness, clarity, creativity, and granularity, as well as pairwise comparisons between different models. A dedicated reward model evaluates the self-reflection stage, ensuring it introduces new insights and provides feedback to guide improvement. This approach offers explainability, critical self-evaluation, comprehensive evaluation, and iterative improvement, resulting in a sophisticated and explainable deepfake detection model.

Reasoning Improves Deepfake Detection Accuracy

This research introduces VERITAS, a deepfake detection model built upon a large multimodal language model (MLLM). The core contribution is a new training framework called P-GRPO (Pattern-aware Reasoning with Preference-based Reinforcement Learning), which enhances the reasoning capabilities of MLLMs for more accurate and robust deepfake detection. Simply scaling up MLLMs is insufficient; they need to be trained to reason effectively about the visual and textual cues that distinguish real from fake content. The proliferation of deepfakes poses significant risks to individuals, organizations, and society.

Traditional detection methods often rely on low-level visual artifacts and are easily fooled by increasingly sophisticated forgeries. MLLMs have the potential to leverage both visual and textual information for more robust detection, but require specific training to reason effectively, explaining decisions, identifying relevant cues, and generalizing to unseen deepfakes. The P-GRPO framework consists of three stages. First, the MLLM is initially fine-tuned on a dataset of real and fake images/videos using standard supervised learning. Second, Mixture Preference Optimization (MiPO) uses preference learning to refine the model’s ability to distinguish between real and fake content.

Third, Pattern-aware Reinforcement Learning (P-RL) encourages the model to focus on the most relevant patterns and cues for deepfake detection, incentivizing the identification of relevant patterns, providing explanations, and generalizing to unseen deepfakes. VERITAS achieves state-of-the-art results on a benchmark dataset, surpassing previous methods. Ablation studies demonstrate the effectiveness of each component of the P-GRPO framework, showing that both MiPO and P-RL contribute to improved performance, and that the pattern-aware reward function is crucial for learning robust features. VERITAS is more robust to image/video distortions compared to other methods and can generate more coherent and informative explanations for its decisions.

HydraFake and Veritas Detect Realistic Deepfakes

The research introduces HydraFake, a new dataset designed to more accurately reflect the challenges of detecting deepfakes in real-world scenarios, and Veritas, a deepfake detection model leveraging multi-modal large language models (MLLMs). Existing benchmarks often use simplified, homogenous data, hindering practical application; HydraFake addresses this by incorporating diverse forgery techniques and realistic, in-the-wild images. Veritas distinguishes itself through a pattern-aware reasoning framework, mimicking human forensic analysis with techniques like planning and self-reflection, and a two-stage training pipeline to effectively integrate this reasoning into the MLLM. Experiments using HydraFake demonstrate that while existing detectors perform well on known deepfakes, they struggle with unseen forgeries and new data sources.

Veritas achieves significant improvements across these challenging scenarios, delivering both accurate detection and transparent explanations for its decisions. The authors emphasize that current MLLMs require substantial reasoning data to perform effectively, and their work focuses on providing this data to enhance the model’s ability to reason specifically for deepfake detection. Future research could further explore the refinement of reasoning patterns and the development of even more robust training methodologies to improve generalisation to entirely novel forgery techniques.

👉 More information

🗞 Veritas: Generalizable Deepfake Detection via Pattern-Aware Reasoning

🧠 ArXiv: https://arxiv.org/abs/2508.21048