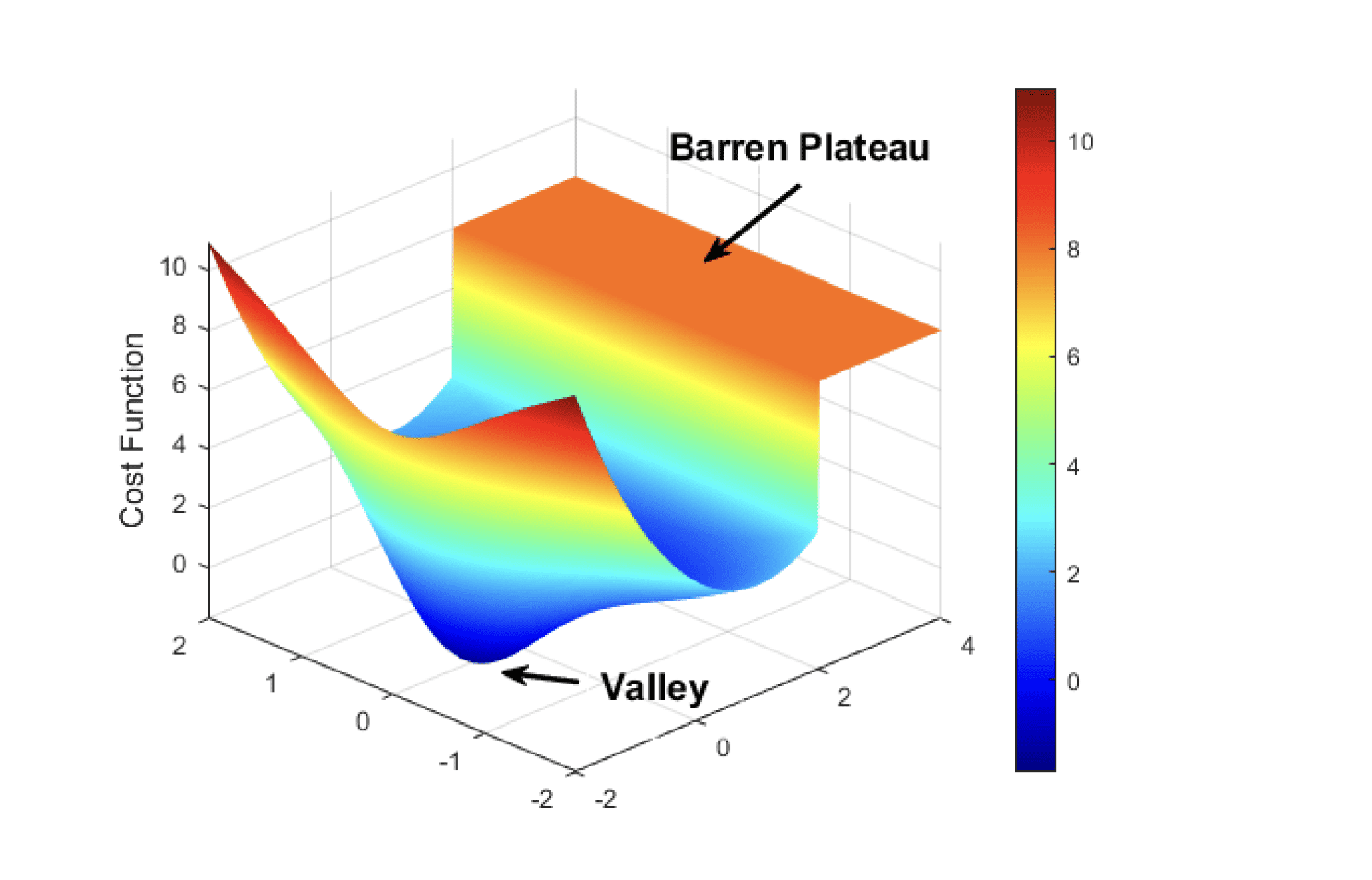

Variational quantum algorithms represent a promising route to utilising near-term quantum computers, but their effectiveness is often limited by the ‘barren plateau’ problem, where signals diminish rapidly as the complexity of the quantum circuit increases. Yifeng Peng from Stevens Institute of Technology, Xinyi Li, and Zhemin Zhang from Rensselaer Polytechnic Institute, along with Samuel Yen-Chi Chen and colleagues, investigate whether techniques commonly used to improve training in classical artificial neural networks can alleviate this issue in quantum circuits. The team systematically explores the application of classical weight initialisation strategies, such as Xavier and He methods, to variational quantum algorithms, adapting these concepts for quantum systems. Their results demonstrate that while these initial approaches offer some improvement in specific scenarios, the overall benefits remain limited, offering a valuable baseline and outlining promising avenues for future research into overcoming the barren plateau.

Most current VQA research employs simple initialization schemes, but classical deep learning has long benefited from sophisticated weight initialization strategies such as Xavier, He, and orthogonal initialization. These classical methods address similar gradient vanishing issues and offer potential improvements for VQA training. Consequently, exploring the application of these established classical techniques to VQA initialization represents a promising avenue for enhancing performance and scalability. This work investigates the impact of various classical weight initialization strategies on the training dynamics and final performance of VQAs, with the aim of mitigating the barren plateau problem and improving the efficiency of quantum computation.

Classical Initialization Mitigates Barren Plateaus

This research paper investigates how different initialization strategies impact the training of deep variational quantum circuits (VQCs). The core problem addressed is the barren plateau phenomenon, where gradients vanish during training, hindering the learning process. The authors explore whether techniques borrowed from classical deep learning, specifically various initialization methods, can alleviate this issue in the quantum realm. Key findings demonstrate that carefully chosen initialization strategies can help mitigate the barren plateau problem, leading to improved training performance and potentially enabling the training of deeper quantum circuits.

The authors emphasize that adaptation is crucial to account for the unique characteristics of quantum circuits, such as gate structure, measurement types, and quantum noise. The paper highlights the challenges in defining a meaningful number of parameters for quantum circuits and how this impacts the interpretation of results. Researchers suggest that a more refined definition, incorporating the structure of the quantum ansatz, is needed for a clearer understanding of the relationship between initialization, circuit depth, and performance. The research includes numerical experiments using both synthetic and molecular datasets (Heisenberg model and molecular geometry optimization) to validate the effectiveness of the different initialization strategies. These results demonstrate that initialization strategy is a critical factor in the successful training of deep VQCs, and that insights from classical deep learning can be valuable when adapted for quantum machine learning.

Quantum Initialization Mitigates Barren Plateaus

Researchers are actively addressing the challenge of barren plateaus, a significant obstacle in the development of near-term quantum computations, by investigating whether classical weight initialization strategies can improve performance. Inspired by the successes of techniques like Xavier, He, and LeCun initialization in classical deep learning, the team systematically explored adapting these methods to quantum circuits, aiming to temper gradient decay and expedite convergence. The study provides a comprehensive survey of classical initialization schemes and proposes heuristic quantum initializations, conducting extensive numerical experiments to compare their effectiveness against common Gaussian or zero-initializations across various optimization tasks. Results demonstrate that while initial attempts inspired by classical initialization yield moderate improvements in certain scenarios, their overall benefits remain marginal, suggesting a need for more nuanced approaches.

Specifically, the team implemented a chunk-based layerwise approach, partitioning the total parameter vector into layers and applying initialization based on a heuristic setting of fan-in and fan-out equal to the number of qubits, resulting in a standard deviation of σl = r 1/n. Furthermore, the researchers explored a global approach, setting fan-in equal to the total number of parameters, and implemented simpler variants without chunk partitioning. The study highlights open challenges, such as refining the definitions of fan-in and fan-out to better suit quantum scenarios, and proposes future research directions to leverage insights gained from this work.

Classical Initialization Fails to Solve Barren Plateaus

This work investigates whether weight initialization strategies commonly used in classical deep learning can mitigate the barren plateau problem in variational quantum algorithms. Researchers adapted initialization methods, including Xavier, He, and orthogonal initialization, for use with quantum circuits and tested their performance on various circuit architectures and optimization tasks. Results demonstrate that while these classical initialization strategies offer some improvement, their overall benefits remain marginal in addressing barren plateaus. The study acknowledges that the observed improvements are modest and suggests potential avenues for future research.

These include exploring initialization strategies that more closely align with quantum logic, potentially at the cost of increased computational expense, and applying orthogonal initialization to blocks of parameters to reflect partial entanglement or layered circuit structures. Additionally, the authors propose that over-parameterization, combined with factorization techniques, could generate diverse initial conditions without compromising orthogonality guarantees. These explorations aim to refine initialization strategies and improve the training of variational quantum algorithms.

👉 More information

🗞 Can Classical Initialization Help Variational Quantum Circuits Escape the Barren Plateau?

🧠 ArXiv: https://arxiv.org/abs/2508.18497