The challenge of training highly complex quantum circuits remains a significant hurdle in the development of practical quantum machine learning. Jun Qi, Chao-Han Huck Yang, and Pin-Yu Chen, alongside Min-Hsiu Hsieh et al., have investigated why increasingly expressive variational quantum circuits (VQCs) often fail to train effectively and generalise beyond limited datasets. Their research, conducted across NVIDIA Research, IBM, and Hon Hai Quantum Computing Research Center, reveals a fundamental principle they term ‘simplicity bias’, demonstrating that these circuits naturally gravitate towards producing near-constant outputs. This work establishes that barren plateaus , regions of vanishing gradients , are a result of this bias, rather than the primary cause of training difficulties. By applying random matrix theory, the team rigorously proves this collapse in complexity and, crucially, identifies circuit architectures, such as those utilising tensor networks, that can circumvent this limitation and maintain robust performance.

Haar Universality and VQC Trainability Limits

Over-parameterization is commonly used to increase the expressivity of variational quantum circuits (VQCs), yet deeper and more highly parameterized circuits often exhibit poor trainability and limited generalization. This work provides a theoretical explanation for this phenomenon from a function-class perspective, investigating the behaviour of these circuits as their complexity increases. Researchers demonstrate that sufficiently expressive, unstructured variational ansätze enter a Haar-like universality class, a concept borrowed from random matrix theory, with significant implications for optimisation. Specifically, they show that both observable expectation values and parameter gradients concentrate exponentially with system size, suggesting a loss of information as the circuit grows.

As a consequence, the hypothesis class induced by such circuits collapses with high probability to a narrow family of near-constant functions, hindering the ability to learn complex relationships within the data. The approach taken involves a rigorous mathematical analysis of the statistical properties of VQC outputs and gradients in the limit of large system size, leveraging tools from random matrix theory and statistical physics to characterise the concentration of these quantities. By establishing a connection to the Haar-like universality class, the researchers are able to derive analytical results describing the scaling of trainability and generalisation performance.

This theoretical framework allows for a deeper understanding of the limitations imposed by over-parameterization in VQCs, moving beyond purely empirical observations. The specific contributions of this work lie in providing a theoretical justification for the observed trainability issues in highly parameterized VQCs, demonstrating for the first time that the collapse of the hypothesis class is not merely an empirical phenomenon but a consequence of fundamental properties of the circuit structure. This understanding offers a pathway towards designing more effective VQC architectures that avoid the pitfalls of over-parameterization, and the established connection to the Haar-like universality class provides a novel perspective on the behaviour of quantum machine learning models and opens up avenues for future research.

VQCs, Expressivity and Random Matrix Theory

The research team investigated the trainability challenges arising in over-parameterized Variational Quantum Circuits (VQCs), focusing on a function-class perspective to explain observed limitations. Their work began by defining over-parameterization as a regime where circuit expressivity is sufficient to approximate unitary 2-designs, even with local observables, and not simply based on parameter count. Experiments employed unstructured, hardware-efficient, or random circuit ansätze to induce Haar-like behaviour, allowing the scientists to model concentration-of-measure phenomena in both circuit outputs and gradients as system size increased.

To rigorously characterise this behaviour, the study harnessed tools from random matrix theory, modelling the statistical properties of circuit outputs and gradients when the induced unitary ensemble approached Haar-randomness. This approach enabled the team to formalise the conditions under which over-parameterized, unstructured VQCs exhibit concentration of measure, demonstrating that such circuits collapse with high probability to a narrow family of near-constant functions, termed ‘simplicity bias’. This collapse, they found, precedes and drives barren plateaus, rather than being a consequence of them, and occurs uniformly over finite datasets.

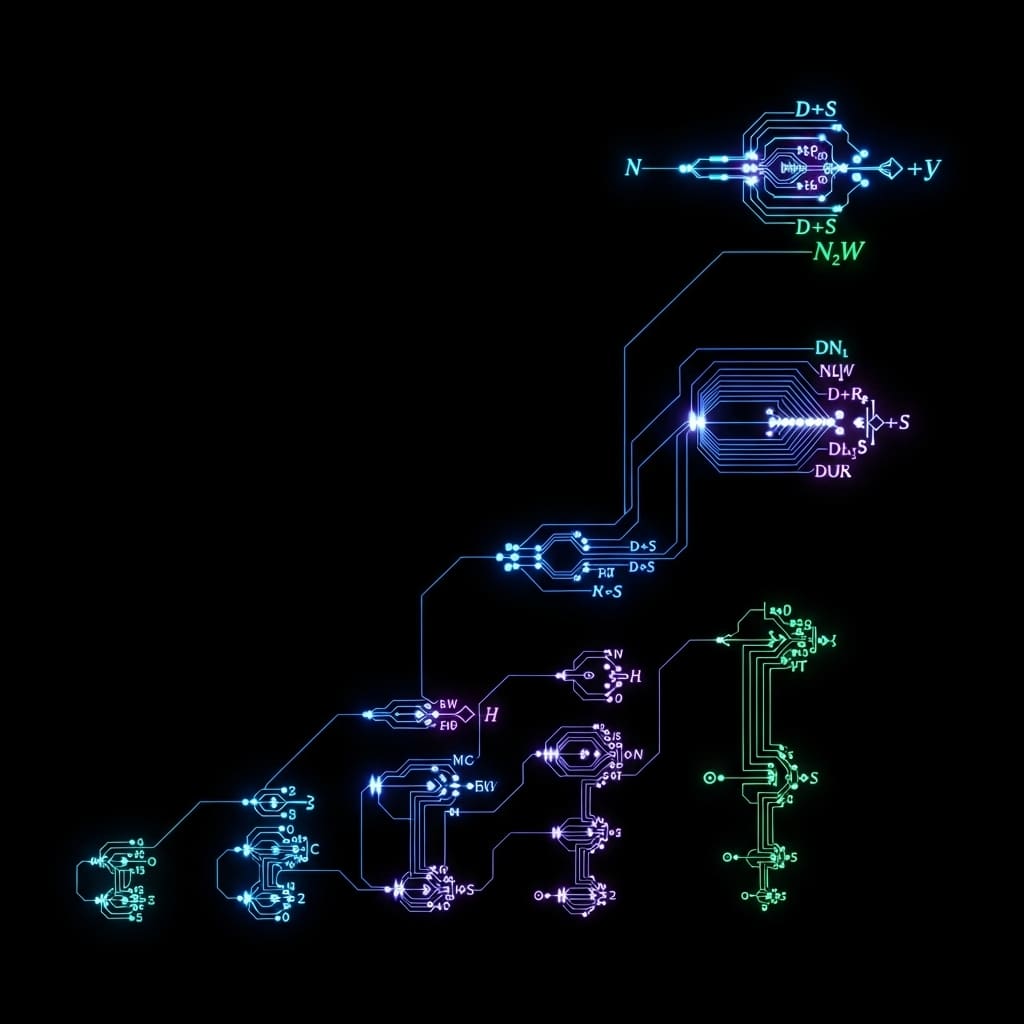

Seeking to overcome these limitations, scientists developed tensor-structured VQCs, explicitly restricting the accessible unitary ensemble through bounded tensor rank or bond dimension. Two architectures were implemented: the Tensor Network VQC (TN-VQC) and the TensorHyper-VQC. The TN-VQC utilises a classical tensor network to generate data-dependent encoding features, mapping inputs to a reduced n-dimensional space before parameterising the data-encoding unitary. Conversely, the TensorHyper-VQC employs a tensor network as a hypernetwork, directly generating the variational circuit parameters from the input using a mapping from a Gaussian random vector.

Crucially, both tensor-structured approaches maintain bounded operator Schmidt rank and entanglement entropy, preventing convergence to Haar-like typicality even in over-parameterized regimes. The team demonstrated that by limiting entanglement growth through controlled tensor rank, these architectures preserve output variability and non-degenerate gradient signals, offering a pathway towards scalable, trainable variational quantum algorithms. Analysis of operator Schmidt rank, defined by the entangling power of an operator, revealed that Haar-random unitaries exhibit exponential Schmidt rank, while tensor-network generated circuits maintain a bounded rank independent of system size.

Variational Circuits Exhibit Exponential Simplicity Bias

Scientists have demonstrated a fundamental limitation in the performance of highly parameterized variational quantum circuits (VQCs). Their work reveals that as circuits become deeper and more complex, they enter a universality class akin to random matrices, leading to an exponential concentration of both observable expectation values and parameter gradients with increasing system size. Experiments revealed that this concentration causes the hypothesis class, the set of functions the circuit can represent, to collapse with high probability into a narrow family of near-constant functions, a phenomenon the researchers term ‘simplicity bias’.

The study rigorously characterized this universality class using tools from random matrix theory and concentration of measure, establishing a uniform collapse of the hypothesis class even with finite datasets. Measurements confirm that this collapse isn’t an unavoidable consequence of increasing circuit complexity, as the team discovered that tensor-structured VQCs, including those based on tensor networks and tensor hypernetworks, operate outside this problematic Haar-like universality class. By restricting the accessible unitary ensemble through bounded tensor rank or bond dimension, these architectures effectively prevent the concentration of measure observed in unstructured circuits.

Results demonstrate that these tensor-structured VQCs preserve output variability for local observables and maintain non-degenerate gradient signals, even when significantly over-parameterized. The research unified previously observed issues like barren plateaus, expressivity limits, and generalization collapse under a single structural mechanism rooted in random-matrix universality. Specifically, the team showed that the architectural inductive bias, the inherent assumptions built into the circuit design, plays a central role in determining the circuit’s representational capacity. Further analysis shows that unstructured VQCs, when parameterized randomly, approach a state where their outputs become largely independent of the input data, while tensor-structured VQCs, by limiting the complexity of the unitary transformations, avoid this collapse and retain the ability to represent a wider range of functions.

This breakthrough delivers a new understanding of the interplay between circuit architecture, expressivity, and trainability in variational quantum algorithms, paving the way for the design of more robust and effective quantum machine learning models.

Representational Collapse Drives Barren Plateaus

Researchers have demonstrated a fundamental limitation affecting the trainability and generalisation ability of highly parameterised variational quantum circuits (VQCs). Their work establishes that sufficiently expressive, unstructured VQC designs fall into a universality class analogous to that of random matrices, leading to an exponential concentration of both output values and parameter gradients. This concentration results in a ‘simplicity bias’, where the effective function represented by the circuit collapses to a near-constant value, effectively causing barren plateaus, not as the primary issue, but as a consequence of this representational collapse.

The study rigorously characterised this behaviour using tools from random matrix theory and concentration of measure, proving that this collapse occurs consistently across finite datasets. Importantly, the authors demonstrated that this limitation is not inherent to all VQC architectures, as circuits incorporating tensor structures, such as those based on tensor networks or hypernetworks, avoid this universality class by restricting the accessible unitary space through bounded.

👉 More information

🗞 Random-Matrix-Induced Simplicity Bias in Over-parameterized Variational Quantum Circuits

🧠 ArXiv: https://arxiv.org/abs/2601.01877