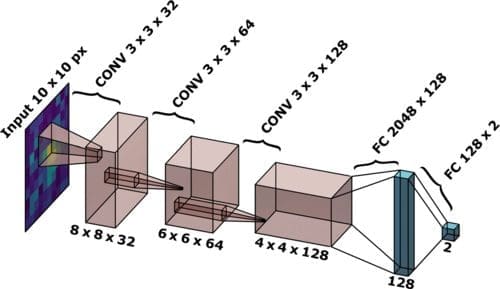

The use of machine learning in quantum computing has significant potential to enhance the measurement process, enabling faster readout times and improved fidelity. Researchers can develop more sophisticated CNN architectures specifically designed for analyzing neutral atom qubit readout data, improving the accuracy and reliability of qubit state measurements. Additionally, exploring other machine learning algorithms like RNNs or LSTM networks can also improve the accuracy and reliability of qubit state measurements. The future directions for machine learning in quantum computing are vast and exciting, with potential applications in achieving scalability and quantum error correction.

Can Machine Learning Enhance Quantum Computing?

Yes, machine learning can help achieve scalability in quantum computing. By enabling faster readout times and improved fidelity, researchers can perform more sequential operations on a larger number of qubits. This is critical for achieving scalable quantum computation.

What are the Challenges Facing Machine Learning in Quantum Computing?

One of the major challenges facing machine learning in quantum computing is the need to develop more sophisticated CNN architectures specifically designed for analyzing neutral atom qubit readout data. Another challenge is the need to explore the use of other machine learning algorithms, such as RNNs or LSTM networks, to improve the accuracy and reliability of qubit state measurements.

In conclusion, the use of machine learning in quantum computing has significant potential to enhance the measurement process. By developing CNN architectures specifically designed for analyzing neutral atom qubit readout data, researchers can improve the accuracy and reliability of qubit state measurements. This proof-of-concept demonstration has important implications for the development of large-scale quantum computers.

Can Machine Learning Help Achieve Quantum Error Correction?

Yes, machine learning can help achieve quantum error correction. By analyzing the readout data from each qubit and using this information to correct for errors caused by crosstalk, researchers can reduce the correlation between the predicted states of neighboring qubits up to 78.5%. This is achieved through the use of a multi-qubit CNN architecture.

What are the Future Directions for Machine Learning in Quantum Computing?

The future directions for machine learning in quantum computing are vast and exciting. Firstly, researchers can continue to develop more sophisticated CNN architectures specifically designed for analyzing neutral atom qubit readout data. Secondly, they can explore the use of other machine learning algorithms, such as RNNs or LSTM networks, to improve the accuracy and reliability of qubit state measurements.

In conclusion, the use of machine learning in quantum computing has significant potential to enhance the measurement process. By developing CNN architectures specifically designed for analyzing neutral atom qubit readout data, researchers can improve the accuracy and reliability of qubit state measurements. This proof-of-con

Publication details: “Enhanced measurement of neutral-atom qubits with machine learning”

Publication Date: 2024-08-05

Authors: L. Phuttitarn, B. M. Becker, Ravikumar Chinnarasu, T. M. Graham, et al.

Source: Physical Review Applied

DOI: https://doi.org/10.1103/physrevapplied.22.024011